- | 8:30 am

Maybe integrating mobile robots into everyday life isn’t a good idea

What might it mean for people when stronger and speedier robots are part of our daily routines?

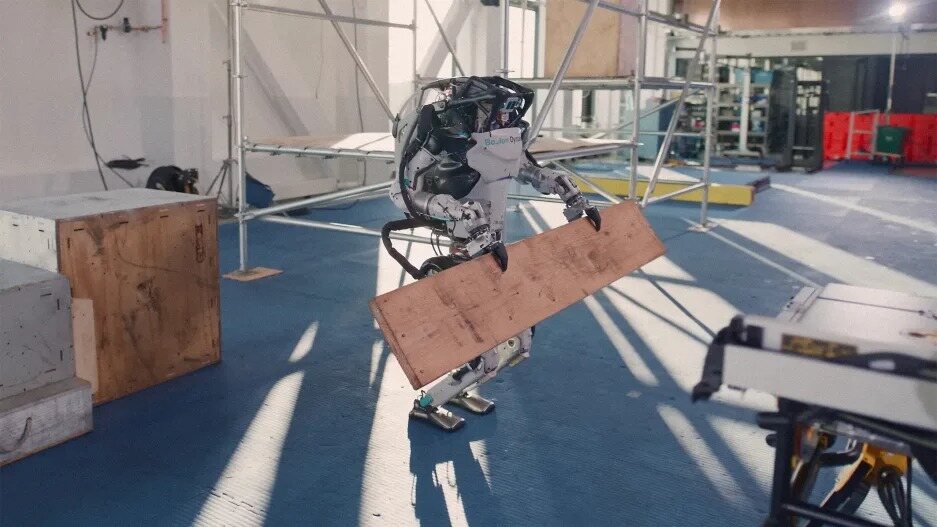

Boston Dynamics’s Atlas robot turns 10 years old this year. In 2023, Atlas—described on their website as a platform “designed to push the limits of whole-body mobility”—is still a research project, but it is off of its tether, flexing its steel, and showing tremendous strength and dexterity in the lab. In a demo released last month the robot is shown to have “grip capabilities” by fetching and delivering a tool bag to a (human) construction worker standing on a second story platform. In order to reach the worker, the robot first fashions itself a bridge, using a plank of wood to cross a chasm within the scaffolding. Once the task is completed, Atlas jumps back onto a small platform on the ground and busts-a-move with some somersaults.

To see such dexterity and agility in a robot is a kinetic uncanny valley: The robot can move swiftly, and with raw power in a new context. But what might it mean for the rest of us when more manually dexterous, strong, speedy robots are off their tethers, out of their cages, and part of the fabric of daily life? Sure, the robot in the demo looks precision-controlled; what if it isn’t, or if that’s not always the case?

In the past, strong robots were developed for a single purpose, and kept in manufacturing plants or used for industrial purposes. I recall touring the Toyota factory in Nagoya, Japan, not too many years ago, and watching the giant robots weld auto frames. These types of robots are immobile, and are stuck behind cages for safety.

There was careful consideration about taking robots out of their cages several years ago, too. When the Robonaut (NASA’s astronaut assistant) was deployed to aid astronauts, its robotic torso was the subject of a talk at the Jet Propulsion Laboratory in Pasadena, where scientists and engineers carefully explained the precautions they’d taken to pad Robonaut with wrapping that could cushion any unforeseen collisions with humans.

So far at least, that hasn’t been the case with Atlas. During the demo, I found myself squirming, wondering, What if the robot missed and tossed the tool bag too far and hit the construction worker, or accidentally somersaulted into someone? Atlas seemed okay in the demo, but there was no one else around. What happens when a robot (or robots) like that—again, perhaps even one produced by a rival of Boston Dynamics—is immersed in a situation with more than one person?

Any free-form walking mobile robot assistant has algorithmic roots. It will be programmed and controlled by computer code written by people, with help from AI and machine learning. This means that the decisions the robot makes will be deducted using artificial logic. This is not the same as human logic, nor does it create true cooperation. In order for anyone to cooperate, each party has to forfeit what they might want to do at a given time, to meet and work collaboratively with others who have also forfeited what they want to do in that same moment. All parties, in other words, forfeit at least some individual agency for group cooperation. Currently, because these robots are not shown in a group context, it isn’t clear how much these robots will be designed for cooperation, or if we humans will be perpetually yielding to robots that weren’t programmed to consider options and choices that humans might make—or to adapt to us in real time.

Consider the issues we already have with websites, pre-paid ticket vending machines, or any other automated machine one might interact with. Now make it mobile and powerful—yet still retaining limitations to how much it is able to communicate, and thus cooperate. In all likelihood, there will be mistakes. Despite all the fun people are having with chatbots like ChatGPT, we’ve already seen the tools produce a number of fallacies and untruths. Imagine those flubs as active controls that are incorrectly being deduced and expressed in the actions of a strong, untethered, mobile robot.

Even in military applications, where there are procedures and processes, there are still unexpected and unforeseen events, and the inability to react also in unexpected ways can be fatal. Imagine hundreds of these in a hospital setting working as orderlies, all trying to navigate around each other, and us—or in a large construction site, where there are hundreds of workers, and potentially thousands of tasks and processes. It would truly be challenging to see how these types of mobile robots could successfully engage without regularly causing accidents or crashing into each other. These would not just be interacting with one person, either, but with many other robots, machines, autonomous vehicles, etc., all while the people surrounding them, or interacting with them are also trying to complete their tasks.

When these strong mobile robots are deployed in workplaces, educational, industrial, military, or healthcare settings, we won’t have a way to easily communicate with them. We’ll have to modify our behavior to cooperate with them, or suffer the consequences. Coexisting with such robots will be yet another autonomous curveball for people to potentially adjust to in the future. For now, Atlas is strictly a research project between Boston Dynamics and DARPA, and there aren’t any plans for it to be deployed to any construction site—or anywhere else—near you in the future.

But, in time, it might, along with others. With that notion comes challenges for both the robots and the rest of us. We’ll need to consider how these are programmed, how we will interact with them (or not), and what it might do for us—or to us. It makes sense to start the discussion now, before regulations are drawn up by . . . a chat bot.