- | 12:00 pm

Adobe is diving—carefully!—into generative AI

The creativity giant’s new Firefly imagery generator is akin to Dall-E 2 and Midjourney—but aims to steer clear of some of their controversies.

Dall-E 2. Stable Diffusion. Midjourney. These tools all use generative AI to create images based on any text description you can dream up. They’re astounding. But the stuff they churn out can be more fascinatingly weird than wonderful. They’re also provoking ire (and, in the case of Stable Diffusion and Midjourney, legal pushback) from artists who don’t like the idea of AI taking their jobs, being trained on their work, and even mimicking their style.

Enter Adobe, the eternal behemoth of digital-imagery software. Like everyone else in the business of computing, it can hardly choose to avoid the generative AI boom. But as a company whose whole reputation rests on helping creative people produce high-quality results, it also has more to lose than a startup. An Adobe image generator must be capable of rendering imagery usable in a professional context. And if the underlying technology is legally or ethically questionable, it could damage the company’s relationship with its core customers.

Today, at its Adobe Summit conference in Las Vegas, the company is revealing Firefly, its first major piece of functionality based on generative AI. Debuting as a beta web-based service, it will also be integrated into Photoshop, Illustrator, and Adobe Express (and, eventually, appear in all relevant Adobe products).

Like other examples of AI appearing under Adobe’s company’s “Sensei” brand, Firefly is based on home-grown technology. I haven’t yet had the opportunity to try it for myself. But I did get a demo from Adobe CTO for digital media Ely Greenfield, who explained the company’s approach to the technology, which emphasizes thoughtfulness during a period when other AI titans are moving fast, breaking things, and mopping up afterward.

“We’ve been talking to a lot to our customers, everyone from the creative pro to the enterprise to the creative communicator, about what they think about [generative AI],” says Greenfield. “And we think it can be incredibly empowering for them.”

To teach Firefly to create pictures, Adobe curated a training set based on its own vast repository of stock imagery as well as public-domain work and other material it knew it had the legal right to ingest. Along with allaying concerns about rights issues and preventing other companies’ brands from showing up in generated images, this process helps Firefly come up with visuals that are professional rather than just entertainingly bizarre. Even so, “it’s still a bit of a rolling-the-dice game,” says Greenfield. “Sometimes you get good stuff, sometimes you don’t. And that’s true with Firefly as well. Yes, we can generate beautiful high-quality content because of what we’re training on, but you’ll get the occasional extra finger or limb.”

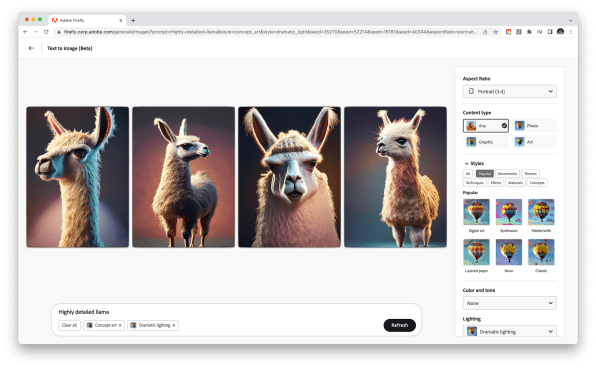

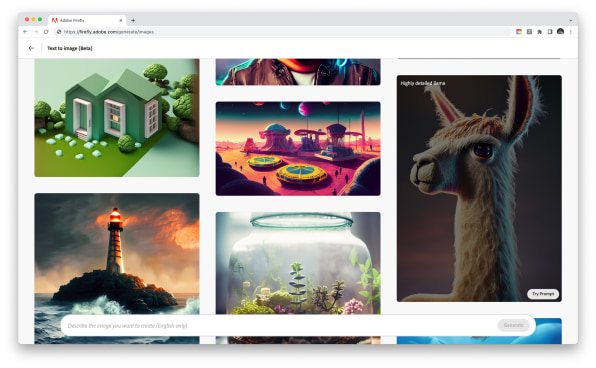

While typing stream-of-consciousness prompts into existing tools, such as Dall-E, is loads of fun, Adobe gave Firefly an interface that emphasizes workaday productivity. Along with typing in a free-form request, such as “highly detailed llama,” you can click on thumbnails to specify elements, such as the desired content type (Photo, Graphic, Art), style (Synthwave, Palette Knife, Layered Paper, and beyond), and lighting (such as “dramatic”). There’s also a typography option that lets you request items, such as, “The letter N made from green and red moss.”

Firefly in its beta form could manage to be more ingratiating than its existing competition, but it won’t address every concern artists have on day one. Adobe says it’s working on ways to pay creators whose images are leveraged by Firefly, including contributors to Adobe Stock. It’s also introducing a “do not train” tag that artists can embed in their digital work’s metadata. That could be a step toward reassuring artists who don’t want their creativity sucked up into algorithms, especially if it’s adopted and honored by everyone else who’s training AI.

Firefly is the single most obvious piece of generative AI functionality that Adobe could build. It’s also just a starting point. “This is the first model that we’re delivering,” says Greenfield. “It will be the first of many in the family.” Already in the works: applications of the tech for video, 3D, and—since the company is a major player in marketing technology—ad copy. Even if Adobe isn’t one of the first tech giants that springs to mind first when you think about AI’s transformative potential, the long-term impact on its sprawling portfolio of offerings could be just as profound.