- | 8:00 am

This plug-in stops employees from leaking sensitive data to AI chatbots

Patented.ai says that when employees enter company data into a chatbot, the data can then be used to train the large language model that powers the chatbot.

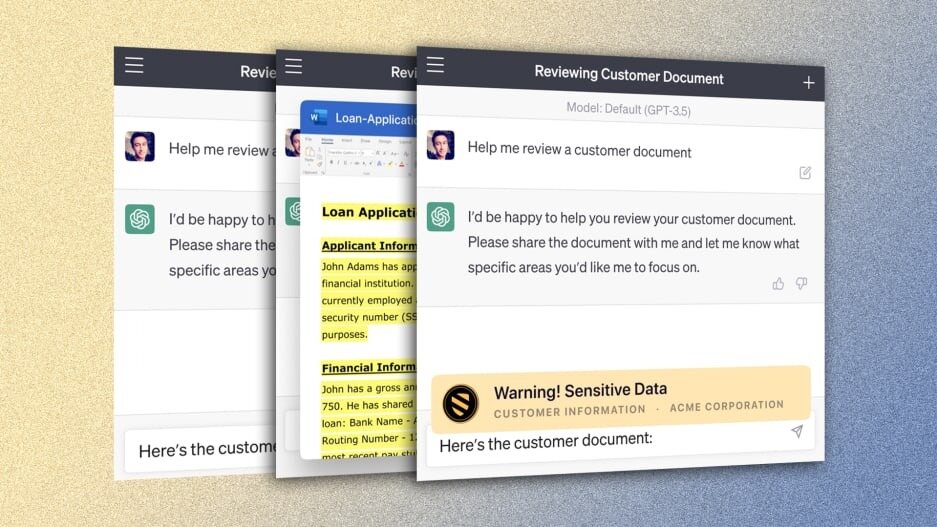

San Francisco-based startup Patented.ai has released a plug-in, called LLM Shield, designed to warn a company’s employees when they’re about to share sensitive or proprietary information with an AI chatbot such as OpenAI‘s ChatGPT or Google‘s Bard.

The company says that when employees enter company data into a chatbot, the data can then be used to train the large language model (LLM) that powers the chatbot. What’s more, it says, the sharing of that data can serve as a signal to those who build the training data sets to search for even more data at that same place on the network.

“AI is incredibly powerful and companies shouldn’t have to ban access,” Wayne Chang, the serial entrepreneur and investor who founded Patented.ai, said in a statement. “With visibility and control, companies should have more confidence with their employees and their use of LLMs.”

LLM Shield is powered by an AI model designed to recognize all kinds of sensitive data, from trade secrets and personally identifiable information to HIPPA-protected health data and military secrets. A Patented.ai spokesperson said the model will be used to power a variety of products to protect the IP of companies across a variety of industries; because of recent demand prompted, in part, by stories of leaks at companies like Samsung, the company accelerated the development of LLM Shield.

The plug-in, which can run inside a chatbot window, shows an alert to an employee who has just entered sensitive information in a chatbot’s text window.

Patented.ai says LLM Shield is available today, and that it intends to roll out new types of security products powered by its AI model throughout this year, including a version of the plug-in for personal use.

Generative AI models will very likely find many useful and time-saving applications within businesses large and small, but many companies are increasingly concerned about how sensitive or private information could be used by the models, the inner workings of which are still not fully understood. Bank of America, Goldman Sachs, Citigroup Inc., Deutsche Bank AG, and Wells Fargo have all banned ChatGPT to minimize the chance of leaks.

Separately, on Thursday, Avivah Litan, an analyst with consulting firm Gartner, said that indeed, “Employees can easily expose sensitive and proprietary enterprise data when interacting with generative AI chatbot solutions.”

She continued: “There is a pressing need for a new class of AI trust-, risk-, and security-management tools to manage data and process flows between users and companies who host generative-AI foundation models.”