- | 10:00 am

Google’s I/O announcements reveal a sprint to catch up with OpenAI/Microsoft in generative AI

Google announced a flurry of AI enhancements to productivity suite and cloud, but many feel quite familiar.

Late last year, as OpenAI’s ChatGPT was astonishing much of the public with its amazing conversational and improvisational skills, Google was reportedly in panic mode.

The tech giant, which declared itself an “AI company” back in 2017, suddenly seemed to be ceding its AI leadership role to a much smaller upstart that had bet big on generative AI models.

Google went into overdrive in early 2023 to wrest back some of its AI mojo. Google researchers learned to think about releasing actual AI products instead of just publishing research papers. It raced to put generative AI features into existing products and brand-new ones.

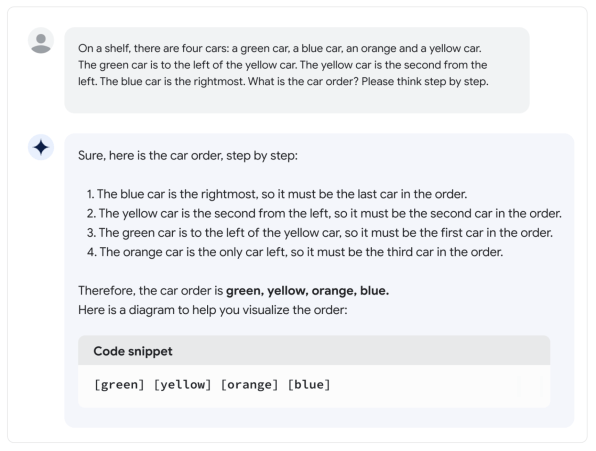

Today, at the company’s annual I/O event, we saw the fruits of that effort. Google unveiled its newest and best large language model, Palm 2. The new model is multilingual; it was trained on 100 human languages. It knows coding too: It was trained on 20 coding languages, including Python, Javascript, and Fortran, so that it’s ready to auto-generate computer code for developers working on a diverse set of project types. It was also trained on a massive amount of scientific and mathematics texts to give it “reasoning” abilities, Google says, and can show the user the steps it took to arrive at a given output.

Palm 2 is also faster and more efficient when it comes to the compute power needed to run the models. And it comes in different sizes to suit the hardware on which it runs. The largest Palm 2 model, called Unicorn, was built to run in data centers, while the smallest, called Gecko, is small enough to run on a high-end mobile phone.

Google says Palm 2 is already the brains behind 25 new products and features. “[It’s] obviously important for us to get this in the hands of a lot of people,” says Zoubin Ghahramani, VP of Google DeepMind, who leads the research team that built the Palm 2 models. “Along that same line, we’ve actually trained Palm 2 deeper and longer on a lot of data.”

BARD GETS SMARTER

Importantly, Palm 2 brings new powers to Google’s Bard assistant, which so far has failed to measure up to the ChatGPT chatbot it was meant to rival. Palm 2’s multilingual powers will be immediately exposed in Bard, which starting today is available in Japanese and Korean.

The big news, however, is that Google is opening up Bard to everyone. Starting next week, Google is removing the waitlist for Bard, and opening access to people in 180 countries.

Bard will also soon be multimodal. That is, users can input an image as a prompt (via Google Lens, perhaps), and Bard will be able to integrate imagery into its responses.

Since people often begin generating ideas within Bard and then want to continue working on them in Gmail or Docs, Google has now made it easy to export content from Bard to those apps with a click of a button.

Also on the roadmap are “extensions,” which are Bard’s answer to OpenAI’s “plugins” for ChatGPT. This is a way to access the functionality and data from other apps within the Bard environment. For instance there will be extensions for Google apps like Docs, Drive, and Gmail. But, most interestingly, there will be extensions from other companies. The first one, Google says, will be Adobe Firefly, which will give Bard users the ability to generate original images based on text prompts directly within the chatbot.

PRODUCTIVITY FEATURES IN WORKSPACE

Google has been hurrying to add generative AI features to its productivity suite, Workspace, to rival those in Microsoft 365. In March, the company announced that it was availing a modest set of AI features to a group of “trusted users,” including “help me write” features within Gmail and Docs.

Now, that feature set has now widened significantly, powered by Palm 2. Many of them may look somewhat familiar, as Microsoft has already built many of them into its Microsoft 365 productivity apps such as Word and Excel. Google has also come up with a new brand for the AI features. Where Microsoft gives us an AI “copilot” to accompany us while working in 365, Google gives us something called “Duet AI.”

In Sheets, you now see a Duet sidebar at the right of the interface where you can type in the description of the spreadsheet you want, and the Duet tool designs it for you. Starting there you can modify it to fit your needs. You can also now generate images for your slides using text descriptions.

The same thing works in Slides. You describe your deck design to Duet, and presto–you have a first draft to work from.

Google has also brought the “help me write” feature to mobile devices, so users will be able to compose Docs and Gmails using AI on their phones.

AI-POWERED SEARCH, A FIRST DRAFT

Part of what gave Google such a fright when ChatGPT came out was that many people immediately thought “why do I need Google’s ad-bloated search engine when I can just ask this chatbot for information?” Since then, Google has been under pressure to bring more generative AI features into Search.

At Google I/O the company unveiled its answer–a new kind of conversational search powered by the new Palm 2 large language model. Google is (wisely) debuting the AI search tool within its “Search Labs,” a sort of sandbox where users can try out experimental products or features.

Google segregated the AI search in Labs for two good reasons. The first is that generative AI search is still prone to making errors, misattributing information, or just making stuff up. Google doesn’t want to risk the trust of its millions of search users by immediately building the AI features into its main search experience. The other reason is that Google’s search engine is a multi-billion dollar ad delivery machine, and the company doesn’t want to mess with that cash cow in any way–not until it has to. Google, nor anyone else, knows how to profitably integrate ads into conversational search results. Not yet.

The main piece of Google’s Search Generative Experience (SGE), as it’s called, is an AI-generated “snapshot” area at the top of the screen that contains AI-generated search results. So if you type a question like “What’s the best vacation spot for a family with dogs–Bryce Canyon National Park or Zion National Park?” the snapshot will contain a short discussion of the question, including the pros and cons of both parks, and some kind of recommendation. At the right you’d see a series of articles from reputable sources that “corroborate” what the AI has said. SGE also uses the main Google search engine to help verify its facts.

SGE also helps with shopping “journeys,” as Google calls them. If you ask what kind of an electric bike is best for suburban commuting, it’ll return a line up of bikes that might work for you. You’ll see bike images, reviews, and prices with the listings. You’ll also see a group of possible followup questions below the snapshot, which might help you and the AI narrow down or fine tune the list of bike recommendations. And the AI will remember the questions you ask throughout your “journey” so that you don’t have to constantly start over. When you finally get to a group of promising choices, the AI will show you some local or ecommerce vendors that have the bike in stock.

There are some kinds of searches or questions the AI search engine won’t touch. If the tool determines that there isn’t enough information out on the web to create a snapshot, it will default to showing you regular search results, meaning a list of relevant links. You might get this same result if you ask a question about a subject area Google sees as unsafe–such as racism or terrorism.

TOOLS FOR CLOUD DEVELOPERS

Since Bard 2 was trained on a wide set of programming languages, Google will soon deliver an expanded set of AI tools to developers. Similar to how Microsoft has branded its AI coding tools “copilot,” Google has branded its coding tools “AI Duet.” Google has exposed these code generation and debugging tools in a variety of Google interfaces commonly used by developers across Google Cloud.

Google has also added a chat assistant to various developer environments within Google Cloud, so that developers can ask questions in plain language about coding issues and best practices, how to use certain cloud services or functions, or to fetch detailed implementation plans for their cloud projects.

These Duet AI developer features are so far available to only a small set of developers, but the company says it will be expanding access soon.

MORE AND BETTER CLOUD MODELS

Google, like Microsoft and OpenAI, wants to sell enterprises access to their generative AI models via the cloud. And the company has expanded the variety of and power of its cloud models this year.

The company’s text-to-code foundation model, Codey, can be accessed by enterprise developers to build code generation and code completion into their own developer applications

Enterprise developers can use Google’s Imagen text-to-image model to generate and customize high quality imagery.

Google also announced Chirp, a speech-to-text model that allows enterprises to build voice enabled apps that translate voice to speech and speech to voice in many languages.

A SLOWER, MORE METHODICAL APPROACH

Taken together, Google’s new AI tools seem to track closely with the same consumer-focused, developer-focused, and enterprise-focused tools that have already been announced by OpenAI and Microsoft.

Absent from the Google I/O announcement was a killer generative AI app that nobody else has thought of, or that nobody other than Google could deliver. Google also appears to be holding on to a more cautious and methodical approach to releasing AI tools and making them available to large numbers of users.

“Producing an example of a demo working really well is very different from producing a product that reliably works,” says Ghahramani. “We’ve put a lot of thought and effort into trying to build AI features into our products in a way that people can use them reliably.”

He continues: “I know a lot of people are just talking about AI right now, but if you think about many of our products that are already taken for granted, like translation and speech recognition and the assistant and search ranking and all of these things, there’s AI technology that’s been built into them over the years that people almost don’t notice or remember.”

Still, a new sense of urgency is present within Google’s walls. Two rival AI groups within the company–DeepMind and Google Research (formerly Google Brain)–have merged so that the two groups can combine their brain power, and share compute power, with the goal of building better AI models that might truly challange OpenAI.

In fact, Google has confirmed a report from The Information that it has a new model in the works called Gemini, which will rival the impressive power and versitility of OpenAI’s GPT-4.

Harry McCracken contributed reporting to this article.