- | 9:00 am

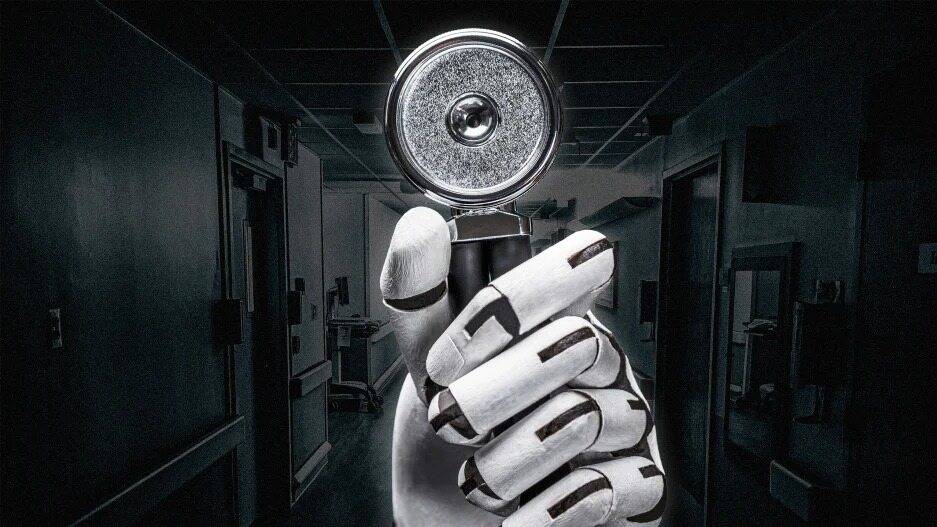

I’m an ER doctor. I think LLMs may shape the future of medicine—for better or worse

While programs like ChatGPT are very exciting for the future of medicine, they also come with some concerning downsides, especially when it comes to the class divide in healthcare.

“So what brings you into the ER on a Friday night?” I ask my latest patient.

“Bad back pain,” the 82-year-old gentleman tells me. “Been acting up lately.”

It’s an interesting reply—especially since this patient told me the very same thing a few days ago.

In the months since I started experimenting with ChatGPT during my shifts as an emergency room physician, I’ve learned that ChatGPT is highly limited and risky as an independent diagnostic tool—but extremely valuable as a tool for helping explain complex medical processes to patients.

In the process, I’ve realized just how much my role as a doctor is already aligned with Large Language Models, and can evolve alongside AI—as long as human engagement remains core to that process. Because while programs like ChatGPT are very exciting for the future of medicine, they also come with some concerning downsides, especially when it comes to the class divide in healthcare.

To better explain what I mean, here’s a glimpse at how medicine is actually practiced—and how LLMs can both complement and hinder treatment.

THE HEALTHCARE NEEDS CHATGPT CAN’T QUANTIFY

Most healthcare involves figuring out what someone actually needs—which is often very different from what they say they need. This is in sharp contrast to how consumer demand is usually created and met. If I run a car dealership, for example, and a customer comes in telling me they need a new vehicle, I will try upselling them the most expensive option possible. I don’t say, “But do you really need a new car?”

Which brings me back to my elderly patient who came in recently, reporting backache.

“When did this pain start?” I ask him.

“17 years ago.” He then starts talking about his grown-up kids who rarely visit.

After a few more questions, the most likely diagnosis becomes clear: Chronic loneliness.

This is actually a very common condition, especially among senior citizens. They may come to the ER complaining of a physical ailment, but often, they’re actually seeking human connection. Other patients come to us complaining about various symptoms, but are really seeking help for stress or anxiety. When basic emotional needs can’t be met anywhere else in our system—let alone from society at large—the local emergency room is frequently where people turn.

But there’s generally a tension between any patient’s stated complaint and their actual need.

Someone comes in, complaining of a cough. Do they need TB testing? Or an X-ray? Is this coughing a sign of autoimmune disorder? Or in the end, does the patient actually just hope to get a note from a doctor that they can show to their employer? 99% of healthcare delivery is figuring out what a patient really and truly needs.

Healthtech startups, especially those hoping to leverage LLM technology, often miss this distinction. Treating health as if it were like any other consumer need is a serious mistake.

This isn’t to say LLMs have no role in the future of medicine. As a physician, caring for a patient hinges on my ability to interpret what they tell me into a coherent medical narrative, one that aligns with their physical symptoms. In many ways, in other words, a doctor’s role is that of a highly trained prompt engineer.

In fact, during recent ER shifts, after inputting detailed, carefully written prompts about my patients, I have been able to uncover possible diagnoses and glean new information through ChatGPT. My experiences point out a place where LLMs like ChatGPT will, eventually, be an important tool in our diagnosis tool chest.

But they also heighten my concerns that AI—if poorly implemented—may worsen outcomes for many patients.

THE COMING CLASS DIVIDE OF VERBAL PROMPTS?

Over time, systems will be put in place where a doctor/nurse can feed their dialogues with patients into an LLM, along with the patients’ medical history, then produce a list of all possible diagnoses to review. Since LLMs are so finicky and unreliable, I suspect it’ll need to be integrated with an intermediary AI program similar to Grammarly Go. (Essentially, an AI to check an AI.) Instead of imposing grammar rules on responses, however, it’ll apply protocols around health treatment, and review answers with our vast knowledge base of human health.

I’m excited by this potential future for healthcare, since it’ll enable us to treat far more patients far more effectively at scale. And the more patient data that is entered into a system like this, the more effective it will become.

At the same time, I’m also concerned how LLMs might further worsen inequities in our healthcare system. Here’s why:

If you ask ChatGPT-4 about a chest pain, but only say, “My chest hurts – what should I do?”, this terse 64-word response comes back:

These symptoms are very serious and could indicate a life-threatening condition such as a heart attack. **Please seek immediate medical attention. Call your local emergency number immediately or ask someone else to call for you.** It’s crucial to act quickly in these situations. Do not ignore these symptoms and do not attempt to self-diagnose or treat them. Please contact a healthcare professional right now.

Contrast that with this input to ChatGPT:

“I am a 48-year-old male who has had chronic chest pain when I cough from seasonal allergies for the past 6 months. It is not worsened by exertion, movement, or deep respirations. I do not have a fever, light-headedness, or leg swelling. I have tried Zyrtec with some improvement, but am not certain what else to consider. What should I do?”

ChatGPT’s response in that case details, in over 300 words, “five possibilities that you could discuss with your doctor.” It’s an impressively useful output that includes complex diagnoses like gastroesophageal reflux disease and musculoskeletal pain.

The contrast in these two responses illustrate my concern around the rise of LLMs in healthcare.

Education level (and by extension, economic and social background) strongly shapes how verbose and detailed a patient can or will be about their symptoms. Many cultures around the globe consider complaining about one’s physical health as a sign of vanity or lack of religious faith; people raised within traditional gender roles also hesitate to fully discuss their symptoms for various reasons.

To that end, I fear that the quality and usefulness of it will be much better for those who have a greater education level. To prevent that outcome, we’ll need to work hard to create systems that assist patients of all backgrounds with constructing effective prompts so that everyone can benefit from the power of LLMs. In a medical context, those prompts are best created from close interactions between a patient and healthcare staff.

One day, I believe we will have artificial intelligence help us diagnose, treat, document, and communicate with our patients, and with far greater efficiency and accuracy. But a face-to-face relationship that makes a patient feel cared for and listened to, is not only essential to the healing process—it’s necessary for making any AI assistant valuable.

Because an elderly patient may give ChatGPT any number of symptoms about their back pain, but it will take onsite medical staff to discern a lonely man in dire need of human connection.