- | 8:00 am

AI looms large at Davos

AI is a primary topic of conversation at this year’s meeting of leaders and elites in the Swiss Alps.

Welcome to AI Decoded, Fast Company’s weekly LinkedIn newsletter that breaks down the most important news in the world of AI. If a friend or colleague shared this newsletter with you, you can sign up to receive it every week here.

AI IS EVERYWHERE AT DAVOS THIS YEAR

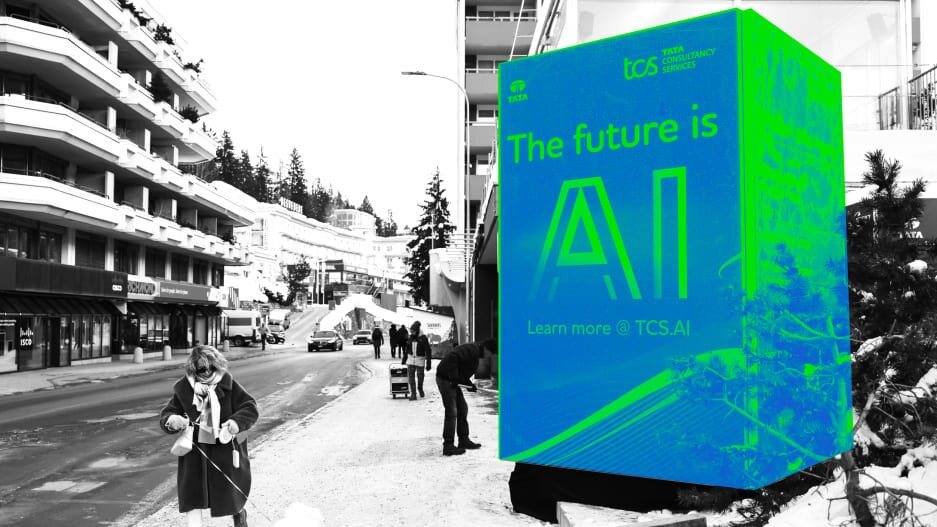

As world leaders and other elites arrived in the small skiing village of Davos, Switzerland, for the World Economic Forum’s annual meeting, they were greeted with display ads and window signs about AI. On Davos’s main drag, the Indian conglomerate Tata erected a pop-up store proclaiming, “The future is AI.” Salesforce and Intel have their own AI messaging plastered over nearby buildings. Down the street is the “AI House,” an ancillary venue hosting a range of panels that feature the likes of OpenAI COO Brad Lightcap and Meta’s Yann LeCun.

Meanwhile, OpenAI CEO Sam Altman and Microsoft CEO Satya Nadella will appear at an event at the main conference later this week called, “Generative AI: Steam Engine of the Fourth Industrial Revolution?” And earlier, Cohere CEO Aidan Gomez spoke on the panel, “AI: The Great Equalizer?” In all, the conference agenda includes 11 panels about AI and AI governance.

Setting the stage for this week’s discussions was the release of a new report from the International Monetary Fund saying that AI will impact 40% of the world’s jobs. And that number rises to 60% in the world’s developed economies. The report also finds that the jobs of college graduates and women are most likely to be transformed by AI, but that those same people are most likely to benefit from the technology in increased productivity and wages.

“In most scenarios, AI will likely worsen overall inequality, a troubling trend that policymakers must proactively address to prevent the technology from further stoking social tensions,” wrote IMF managing director Kristalina Georgieva in an accompanying blog post. “It is crucial for countries to establish comprehensive social safety nets and offer retraining programs for vulnerable workers.”

No doubt, the world stage is a good place to be discussing the changes AI will likely bring—though if climate change is any indication, it’s likely to produce a lot more sound bites than action.

Meanwhile, a new report from Oxfam finds that the global billionaire class saw its wealth grow by 34% (or $3.3 trillion) since 2020, while nearly five billion people around the world grew poorer.

OPENAI’S PLAN TO CONTROL AI-GENERATED ELECTION MISINFORMATION

OpenAI says it’s taking steps to make sure its AI models and tools aren’t used to misinform or mislead voters during this year’s elections. For instance, its DALL-E image generator is trained to decline requests for creating images of real people, including political candidates. The company says it’s been working to understand how its tools might be used to persuade voters of different ideologies and demographics. For now, OpenAI does not allow the use of its models to:

- Build applications for political campaigning and lobbying

- Create chatbots that pretend to be real people (such as a candidate) or institutions (a local government, for example)

- Develop applications that use disinformation to keep people away from the voting booth

Regarding deepfakes, OpenAI says it plans to begin planting an encrypted code into each DALL-E 3 image showing its origin, creation date, and other data. The company says it is also working on an AI tool that detects images generated by DALL-E, even if an image has been altered to obscure its origin or original purpose. These seem like reasonable steps, but with Super Tuesday just weeks away, the company needs to complete these tools and get them activated.

Regulators aren’t moving much faster. The consumer rights watchdog, Public Citizen, points out that three months after closing an open-comment period seeking input about whether it should create new campaign-ad rules around AI tools and content, the Federal Election Commission (FEC) still hasn’t come to a decision. “It’s time, past time, for the FEC to act,” said Public Citizen president Robert Weissman in a statement. “There’s no partisan interest here, it’s just a matter of choosing democracy over fraud and chaos.”

If there is a bright spot here, it’s that state legislatures have moved faster to get anti-deepfake laws on the books. Public Citizen reports that 23 states have now passed or are considering new laws to make the development and distribution of deepfakes a crime.

GENERATIVE AI’S LESSER-KNOWN RISK: SECURITY

As companies hurry to pilot or implement new generative AI, CEOs and CIOs have had plenty to worry about, including the risk of legal exposure due to AI systems hallucinating, violating privacy, or discriminating against classes of people. But it turns out that CEOs are losing the most sleep over the possibility of their AI systems being hacked. For instance, a customer service AI agent could be prompted to spew obnoxious messaging to customers. Or a quality control system could have its training data poisoned so that it can no longer recognize certain kinds of product flaws.

A new survey of CEOs by PwC shows that, among leaders who say their company has already implemented AI systems, 68% say they worry about cyber attacks (66% among leaders who have yet to go live with AI systems). Meanwhile, more than half of CEOs worry that their AI systems will spread misinformation or cause legal problems or reputational harms. Roughly a third of CEOs saw a risk that generative AI systems might show biases regarding certain groups.

In late October, the Biden administration released a set of AI security guidelines, including an initiative to use AI tools to find security vulnerabilities around models, and a directive that the National Institutes of Standards and Technology develop ways of running adversarial tests on AI models to gauge their security. A number of AI laws, some of them directly addressing security, have been proposed in Congress, but none seem close to becoming law.