- | 8:00 am

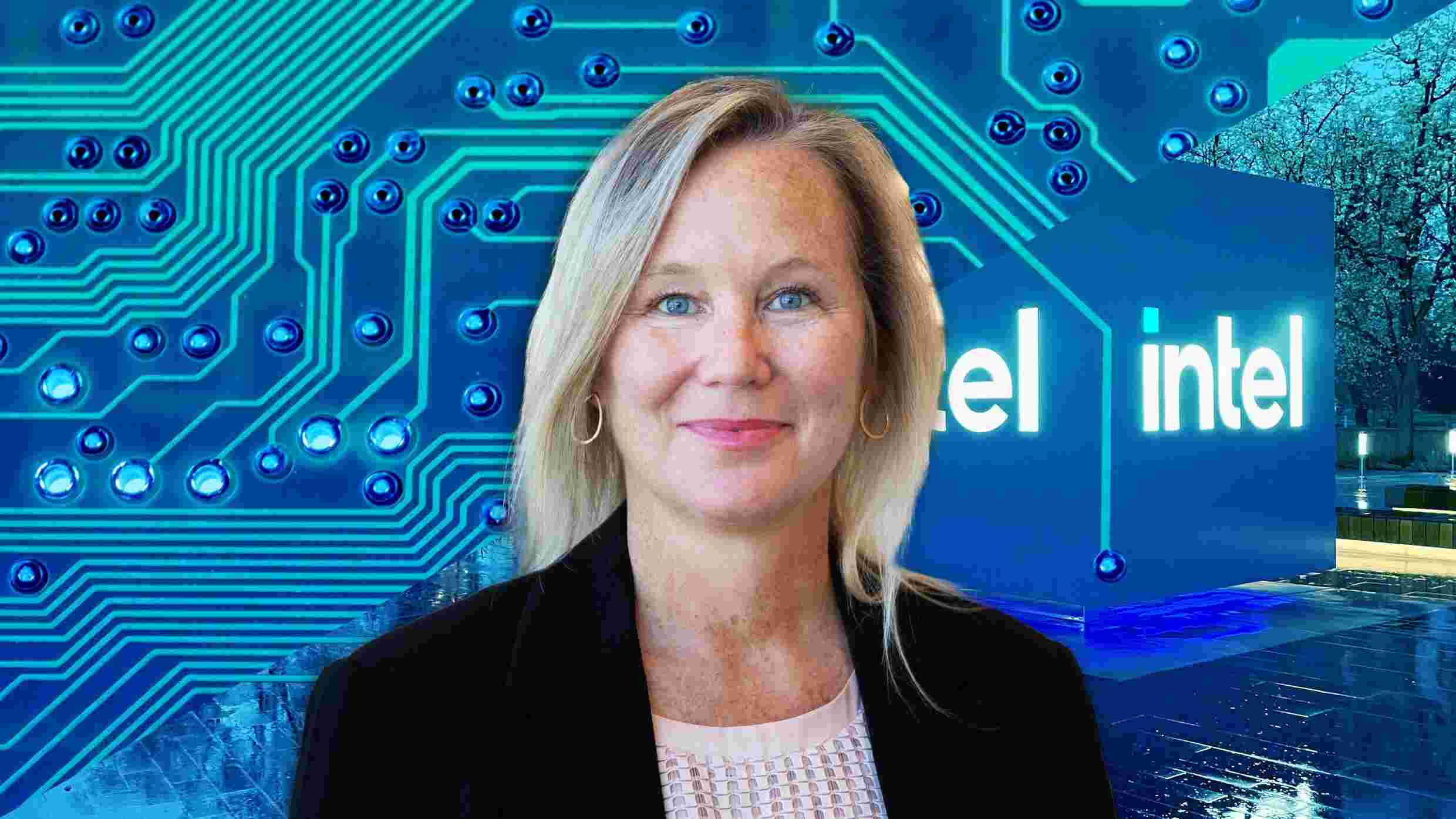

Intel’s chief people officer: Humans need to be at the center of AI

Christy Pambianchi explains how the company is thinking about artificial intelligence and the workforce.

In 1971, a young Silicon Valley startup introduced the first electronically programmable microprocessor to the world. Intel’s innovation helped fuel the personal computing revolution. Now a tech titan, the company aims to play a role in the artificial intelligence revolution by helping feed the sector’s ravenous hunger for chips.

In 2019, Intel purchased AI chip maker Habana Labs for $2 billion; this year, Intel plans to roll out Gaudi3, a chip that is purported to be powerful enough for generative AI software and competitive enough to challenge AI chips from rivals like Nvidia and AMD—though some critics say that Intel has a long way to go in the hypercompetitive AI chip race.

From Intel’s so-called AI Inside program, which guides how the company is employing artificial intelligence in its business; to iGPT, Intel’s proprietary large language model that employees are encouraged to use; to a multimillion AI upskilling and education initiative, Christy Pambianchi, EVP and chief people officer at Intel, says the company is “super excited” to explore how humans can use AI.

Fast Company spoke with Pambianchi about how the company plans to responsibly integrate AI technology within its workforce, how to encourage workers to embrace AI, and how to upskill workers for the AI jobs of the future.

This interview has been lightly edited for length and clarity.

How is Intel applying AI within its workforce?

Obviously we’re working on technologies to enable AI processing with our products. We’ve also created a body of work called AI Inside for our employees to partner together to say, “How could the capability of this computing power and technology apply to us inside the company?” We’ve been front-footed and open about AI. It’s also being championed by our CEO, Pat Gelsinger.

Pat, the head of strategy, myself, the head of finance, and various operating leaders have picked four main areas to focus on at a corporate level. These are the largest areas of work in the company. We’re looking at those and putting teams together to say “Okay, how can the capability of AI transform work in this area?”

Tell us more about AI Inside.

We have 120,000 employees, and almost all of them are technical. Whether it’s our frontline employees making our products versus our engineers inventing them, they all want to play, they all want to be in it. So on one level, AI Inside is our four focus areas: manufacturing, software, chip design, and chip validation.

We started to look really objectively at: What are the bodies of work that we do that are either the most repetitive or potentially have the largest workload? One is all of our manufacturing. For example, in our manufacturing environment, we collect a ton of data, but with today’s technical capabilities, we actually don’t keep it all because it’s just not possible to analyze it all.

But now it will be. That’s one main area where we’re looking at. Another whole bucket is in software. There’s a lot of work to do in quality control and software validation. If that work were improved or automated a little bit by AI, what then could our software experts do? And then the two other areas we’re focused on [for implementing AI] are chip design and chip validation.

At the same time, AI Inside is our agreement on a set of tools and a repository where ideas can be cataloged so that we have the grassroots efforts going on, but in a way that it can be captured. Teams from around the world can go into the database and see if someone’s already done something. We’re trying to have high engagement, high democratization, and ideation. But if you don’t guide [AI experimentation] well, you could just end up with lots of small cells, so to speak: Someone stood up a knowledge base, or someone stood up a tool here and there. You actually just wind up with a lot of technical debt and no real liftoff. We’re trying to find that right balance.

One of the biggest topics in HR right now is how teams are monitoring and measuring worker performance. Does your team use AI for those functions?

We’re looking primarily for AI in any HR application to be something that helps accelerate and augment our work. We don’t have AI, for example, making hiring decisions or internal job movement or promotion decisions or things like that. We do have in our talent acquisition space some areas where technology helps summarize candidate applications and get them to hiring manager teams for reviews—that analysis and synthesis.

In terms of employee monitoring and sensing leveraging AI, we don’t really lean into that. We do have annual employee surveys where we have open-ended questions where people can write in their own independent answers and we’re able to leverage technology to summarize those answers to give us key themes. But we don’t monitor employee emails and things such as that.

The area we’re leaning into AI the most is for the employee development space. We just updated our job architecture, so we have a completely refreshed and updated set of job families and profiles, including skills and experiences. That’s now transparently available to all employees. Eventually it will link to a career hub and learning libraries—that’s where we want to see AI.

Let’s start to give employees nudges, like “80% of the other incumbents in this job role that you’re in have taken these three classes” or “It’s time to build your career plan” or “Here’s how you could find a mentor.” [AI] could almost be a personal coach. And then similarly, for supervisors and managers and team leaders, we could use AI to nudge them about their responsibilities to curate, develop, and enhance their employees’ experience.

Some companies are leveraging AI to do things like generate performance reviews and things such as that, but we’re not. We’re leaning very heavily into employee development, manager capabilities, and really trying to create a super vibrant internal talent pool where employees can realize their full potential, know all the opportunities that are available, and hopefully leverage this technology to help them.

As a chief people officer, how do you manage workers’ fears about AI?

Almost always over the arc of history, when there’s a new technology, usually the knee-jerk reaction is that it’s going to result in massive job loss. We can see that over the arc of hundreds of years, not just the last 20 years. But that’s usually not the outcome. What is the outcome is work evolves and there are new roles, new capabilities, new things that are enabled by the underlying technology. And so I think that’s what is going to happen with AI and with generative AI.

It’s also an evolution from spreadsheets to databases to machine learning to algorithms. We had AI and now this is generative AI. So to me, generative AI is a continuum, versus something that is popped on the scene like it’s brand-new. It’s really an evolution.

I think the way that we can reduce the fear is by being responsible as employers, and then engaging people in bringing AI to life and being cocreators of how it can help enhance their own performance, job satisfaction, and accomplishments as a company. But I do think that fear will only go away over time as people see that.

How do you think about preparing workers for this “evolution,” and upskilling for AI skills?

We definitely take a really long view on talent. You are probably an expert on the dialogue, concerns, etc. around “Is there a workforce shortage?” And “How do we get more technical workers?” So Intel has, through our foundation, long-standing investments in the K-12 space. And then through the foundation or our university teams, a lot of direct donating into the postsecondary space.

But in particular, our Digital Readiness Program is really focusing on how we get our current and the future workforce the right skills and mindsets. We’re collaborating with 27 country governments and 23,000 institutions to train over 4 million people on AI and responsible AI. The way we do that is by providing curriculums and resources to support those governments and institutions in standing up those programs so that they don’t have to use their limited resources to create curricula or think about how to advance the topic.

Then we also have, in that vein, an AI Workforce program that we’ve created which provides community colleges with over 225 hours of preprepared course material that can be used. And then in tandem with the content for the courses, we provide professional training for the school faculty so that the faculty at the institutions then have additional training.

What do you think it means for an employer to be responsible about AI?

Being responsible as an employer, particularly for our employees, is to hire people who are passionate about our purpose and our mission. And then we want them to have a robust career and work for us for life. So part of why we wanted to update the job architecture is so all employees could know of all the jobs we have in the company transparently.

We have a really rich platform for people to be able to learn in the job they’re in, learn skills in other jobs, and continue to get an enhanced education. We encourage people to volunteer and participate in their communities. We know all of those things lead to more overall satisfaction, both at work and in people’s personal lives. And the two are inextricably linked.

At Intel, we love technology and we love to invent technology that can improve the lives of people on the planet. We have a lot of technical people . . . and we do it the right way. Whether that means we’re a responsible steward of the environment, or we want to have open and responsible use of AI, we really believe in this thread of technology for good. And that’s a standard that we hold ourselves to.

Intel has published some guiding principles for responsible use of AI. We’re an early voice in that regard. And I think companies are catching up to that, and I think there’s some commonality across them. But we’ve also used these principles to talk to our employees about AI.

Pat Gelsinger and our leaders are involved in helping at least give input to policy at the national and the international levels about the responsible use of AI. And in the HR community [we are] one of the voices trying to foster and share principles and ideas around the responsible use of AI in human resources. So we’ve just created some general principles around that. One of those principles is that humans should be at the center. AI can be an augmentation, but at the end of the day, humans should still be making the decisions.