- | 8:00 am

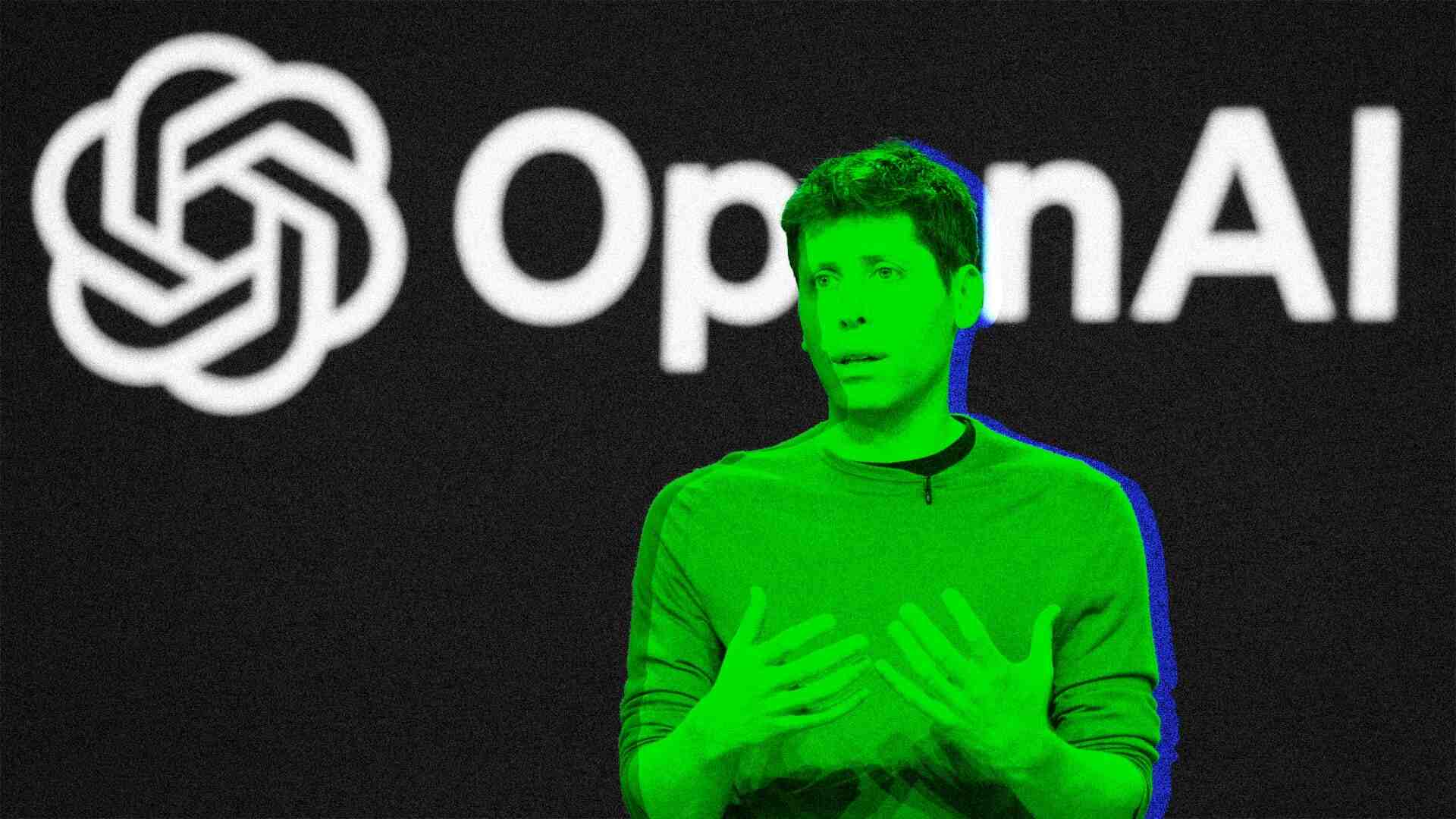

The fog around OpenAI CEO Sam Altman’s 2023 ouster is beginning to clear

New revelations about Altman’s honesty—or lack thereof—matter because the world needs to trust that his company takes AI safety seriously.

Welcome to AI Decoded, Fast Company’s weekly newsletter that breaks down the most important news in the world of AI. You can sign up to receive this newsletter every week here.

WILL SAM ALTMAN BE HONEST ABOUT SAFETY AS THE STAKES GET HIGHER?

Last week Scarlett Johansson accused OpenAI CEO Sam Altman of ripping off her voice for the “Sky” persona in ChatGPT. The allegation seriously called into question Altman’s honesty. One crisis communications expert I spoke with said OpenAI’s response to the Johansson letter—including a story in the Washington Post that seemed to absolve the company—had a very “orchestrated” look to it.

The subject of Altman’s honesty has continued over to this week, mostly because of new information about the reasons for the OpenAI leader’s (somewhat mysterious) firing by the company’s board last November. The board said at the time only that Altman hadn’t been straight with them. Now, one of the board members who ousted Altman, researcher Helen Toner, has for the first time given some actual examples of how Altman was less than honest with the board. She said during a Ted AI Show episode that:

- Altman did not inform the board before OpenAI launched ChatGPT to the public in November of 2022. Board members found out about it on X (formerly Twitter).

- Altman didn’t inform the board that he owned the OpenAI Startup Fund “even though he was constantly claiming to be an independent board member with no financial interest in the company,” Toner said. The startup fund financed startups building on top of OpenAI models.

- In an effort to get her removed from the board, she says, Altman lied to board members about a research paper Toner cowrote. Altman was reportedly angered by a comparison of OpenAI’s and rival AI company Anthropic’s approaches to safety.

- On several occasions, Toner says, Altman misled the board on the number of “formal safety processes” OpenAI had in place. “It was impossible to know how well those safety processes were working or what might need to change,” Toner said.

“The end effect was that all four of us who fired him came to the conclusion that we just couldn’t believe what Sam was telling us,” Toner said. “That’s a totally unworkable place to be as a board, especially a board that’s supposed to be providing independent oversight over the company, not just helping the CEO to raise more money.”

This all relates to safety, and whether OpenAI will put in the hard work of making sure that its increasingly powerful models and tools can’t be used to cause serious harm. With OpenAI’s foundation models finding their way into the business operations of many organizations, any shortcoming could have far-reaching effects.

HOW BIG COMPANIES ARE USING GENERATIVE AI RIGHT NOW

Big companies, despite some setbacks, continue pushing to implement generative AI into their workflows. While every company has different needs, it’s possible to identify some general patterns of how generative AI is being used in enterprises today.

Bret Greenstein, who leads PricewaterhouseCoopers’s generative AI go-to-market team, says AI tools are changing the way coders work within enterprises. Once they start using AI to create requirements and test cases, and optimize code, they don’t want to go back to writing things from scratch. “Once you see it, you can’t unsee it,” he says. PwC announced this week that it’s now a reseller of OpenAI’s ChatGPT Enterprise solution, which includes access to the GPT-4 model, the DALL-E image generation tool, and enhanced security features.

Companies are aggregating huge volumes of customer feedback, Greenstein tells me, then using large language models to find patterns in the data that are addressable and actionable. “Most businesses look for high volumes of documents either coming in or going out—high volumes of labor transcribing, reading, summarizing, and analyzing those kinds of content, whether it’s code or customer feedback or contracts,” he says.

A company in a highly regulated industry may need to constantly assess if new business ideas, contracts, or customer requests are in compliance with its own company policies or with regulations (such as privacy laws). A large language model can be used to read and compare the documents then create a summary of compliance issues, Greenstein tells me.

“I think this idea of starting from scratch, like a person reading something the first time or typing something from a blank screen, is the old way,” Greenstein says. “The new way is letting AI read it first, let AI draft it first, then from that you apply your judgment and critical thinking and accountability to make it great.”

Big companies think there’s a lot of value in letting tech-savvy employees create custom apps, or custom GPTs, instead of making them rely on highly skilled data scientists to create them. “In the really hard-use cases, the really big transformations, you still have experts of data scientists and data engineers, but there are probably 100 times more use cases that are just super users producing something with the knowledge they have and their understanding of the way they work, and helping other people to take advantage of that skill.”

AI SAFETY RESEARCHER JAN LEIKE LANDS AT ANTHROPIC

Former OpenAI AI safety researcher Jan Leike has taken a job at rival Anthropic after his high-profile departure from OpenAI. Leike left OpenAI (along with company cofounder Ilya Sutskever) after the “super-alignment” safety group he led was disbanded. Leike will lead a similar group at Anthropic. “Super-alignment” refers to a line of safety research focused on making the superintelligent AI systems of the near future harmless to humans. Leike is the latest in a growing list of safety researchers who have departed OpenAI, adding to concern that OpenAI is giving short shrift to safety.

“Over the past years, safety culture and processes have taken a backseat to shiny products,” Leike said of OpenAI on X. Anthropic was founded by a group of ex-OpenAI executives and researchers who espouse a more proactive and rigorous approach to AI safety. Anthropic has closed the performance gap with OpenAI in recent years, with its Claude Opus multimodal models challenging OpenAI models on a number of performance benchmarks.