- | 9:00 am

Apple’s artificial intelligence is firmly rooted in reality

Apple customers already rely on the security of the company’s devices and cloud servers, and adding AI to the mix likely won’t change that calculation.

Welcome to AI Decoded, Fast Company’s weekly newsletter that breaks down the most important news in the world of AI. You can sign up to receive this newsletter every week here.

WE FINALLY GET A LOOK AT APPLE’S BIG AI PLAY

The past couple of years of AI have been marked by grandiose claims that the technology could revolutionize the economy (or even destroy the world), along with no shortage of corporate intrigue.

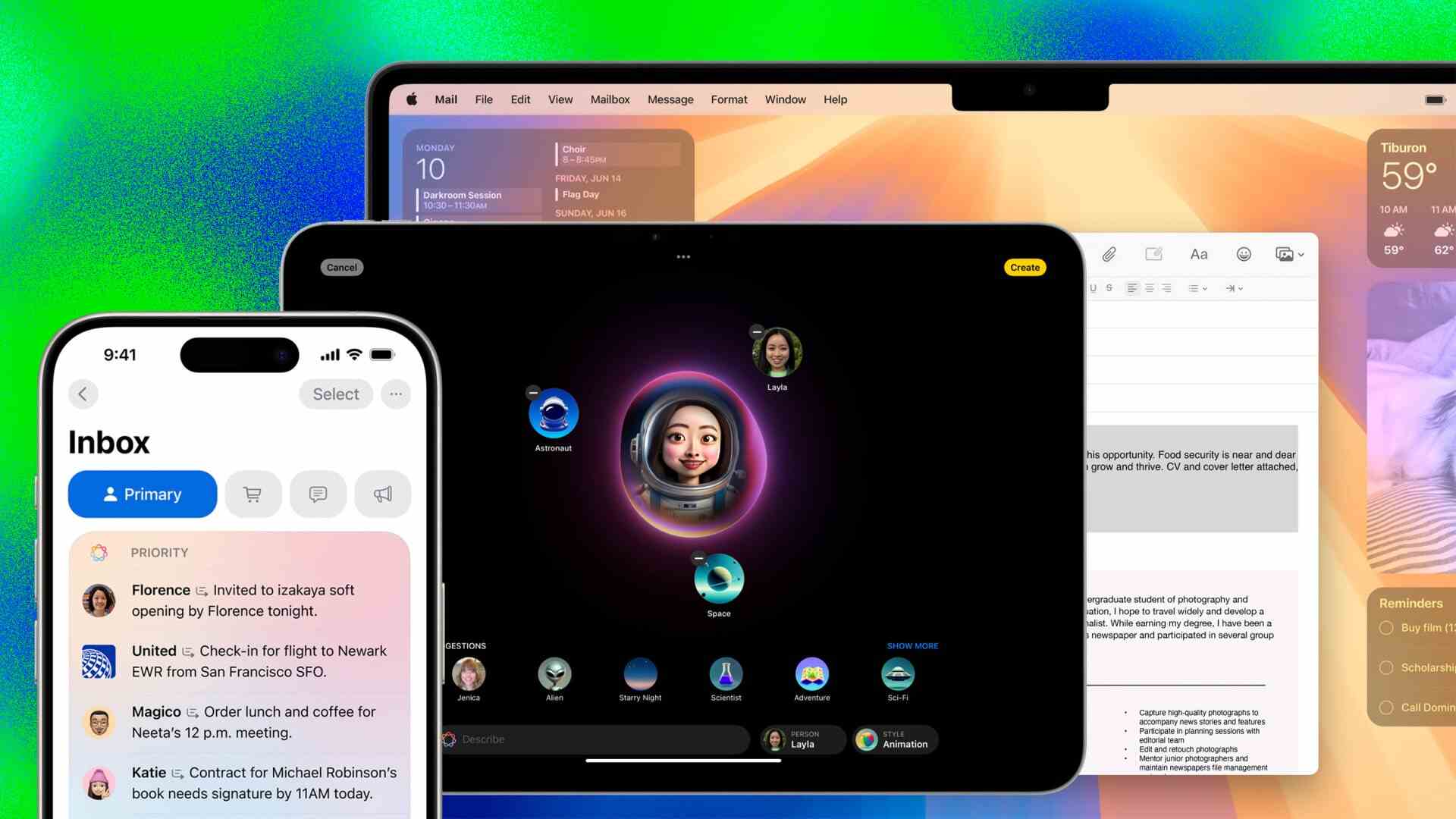

So what’s most notable about Apple’s Monday announcement of new generative AI features may be its understatedness. Apple Intelligence (as AI apparently stands for in Cupertino) tools coming to the Mac, iPhone, and iPad will help draft documents, search for photos, and summarize long email threads—all proven and practical use cases familiar from other AI products. A souped-up Siri will understand more complex commands and queries—“Send the photos from the barbecue on Saturday to Malia” and “When is mom’s flight landing?” were two examples from Apple—and users will be able to create “fun, playful images” and custom emoji to add to their texts and documents.

It’s AI as a practical tool and slightly cringey texting toy, helping Apple customers do the things they already do a little faster and with a bit more flair. There’s no claim the technology will radically reshape your routine—you still have to keep up with your work emails and pick up mom from the airport, and if like many longtime Apple customers you have a creative job or hobby, it’s unlikely to be threatened by “fun, playful” Genmoji.

Apple tends to stand out from the pack when it comes to privacy and security, and indeed the company emphasized that much of its processing will be done on users’ own devices, with other work done in Apple’s secure private cloud. (Users will be able to send some tasks to OpenAI’s ChatGPT, but only if they explicitly choose to do so). That approach gives Apple customers another reason to upgrade their aging devices, since only new products will have access to many on-device features, but it will also likely help soothe customer concerns about AI probing their most sensitive data, including texts, photos, and emails.

Apple customers already rely on the security of the company’s devices and cloud servers, and adding AI to the mix likely won’t change that calculation, giving Apple a powerful advantage over potential competitors that users would be less likely to trust to slurp up data en masse.

ADOBE’S TOS TUSSLE

Adobe continued to do damage control this week after a mandatory terms-of-service update to Photoshop and Creative Cloud software seemed to give the company new rights to access user content and potentially use it to train artificial intelligence tools like Firefly.

The company quickly attempted to clarify on social media and its corporate blog that it doesn’t train Firefly’s AI on customer content and that it doesn’t claim any ownership of customers’ work. Part of the problem is that, as Adobe VP of products, mobile, and community Scott Belsky explained on X, tech companies do feel compelled by copyright laws to claim broad legal permission to ensure features from search to sharing don’t bring unintended legal liabilities. That’s partly what’s behind periodic scares about social media platforms appearing to claim the rights to user photos and posts.

But there’s also the fact that Adobe software is a de facto industry standard for much of the creative world, which is already on edge about AI copying and competition, leaving users with few practical alternatives if terms get onerous. “It’s not that Adobe necessarily made a mistake with its terms of service,” wrote Tedium’s Ernie Smith. “It’s that goodwill around Adobe was so low that a modest terms change was nearly enough to topple the whole damn thing over.”

Also, consumers have seen other companies treat any data they can get their hands on as fodder for AI model training or lucrative licensing arrangements with AI businesses, with some pointing to recently changed terms they say make such deals legal.

On Monday, Adobe issued another clarification of its terms, reiterating it had no nefarious intentions and pledging to revise the official terms by next week. “We’ve never trained generative AI on customer content, taken ownership of a customer’s work, or allowed access to customer content beyond legal requirements,” the company said. “Nor were we considering any of those practices as part of the recent Terms of Use update.”

Adobe also explained it doesn’t scan customer data stored locally and promised to release still more information within a few days. It’s unlikely to be the last company forced to tread carefully in updating even what may feel like boilerplate agreements in the age of AI.

AWS AND OTHERS UNVEIL AI CERTIFICATION PROGRAMS

Businesses wanting to ramp up their use of artificial intelligence say they’re willing to pay more to hire and retain employees who know how to use AI. But many don’t yet have internal training in place, and generative AI is still too new to expect many job candidates to have years of experience or a formal degree in the subject.

That’s why it’s unsurprising to see that Amazon Web Services announced two new certifications this week in the subject. Exams for “AWS Certified AI Practitioner” and “AWS Certified Machine Learning Engineer-Associate” will become available for registration in August and relevant courses looking at subjects like prompt engineering and mitigating bias are already online. Cloud rival Microsoft already has its own comparable sets of classes and certifications, and Google has its own training and certification option, as the companies see AI as a new driver of growth for their computing businesses and look to get potential customers up to speed on using the technology.

The cloud providers have a clear interest in helping people learn to use AI (especially on their technology platforms), but they’re not alone in offering training and certifications in the subject. Universities and community colleges are increasingly offering both short courses and full degree programs in AI—sometimes also empowered by deals with big tech companies—and not just through computer science departments focused on training engineers.

Business schools are training executives to understand the new technology, and the Sandra Day O’Connor College of Law at Arizona State University announced Tuesday it would offer law students certificates in AI. “This will position them for success in the rapidly changing legal landscape, where AI will play an increasingly important role,” said Stacy Leeds, the Willard H. Pedrick dean and Regents and Foundation professor of law and leadership.

At the very least, such a certification could help catch the eye of a recruiter—or AI bot—sifting through résumés for tech-savvy candidates.