- | 9:00 am

This AI taught itself to do surgery by watching videos—and it’s ready to operate on humans

The new smart robot developed by Johns Hopkins and Stanford University researchers learned by watching videos of surgeries. Now it can perform procedures with the skill level of a human doctor.

“Imagine that you need to get surgery within a few minutes or you may not survive,” John Hopkins University postdoc student Brian Kim tells me over email. “There happen to be no surgeons around but there is an autonomous surgical robot available that can perform this procedure with a very high probability of success—would you take the chance?”

It sounds like a B-movie scenario, but it’s now a tangible reality that you may encounter sooner than you think. For the first time in history, Kim and his colleagues managed to teach an artificial intelligence to use a robotic surgery machine to perform precise surgical tasks by making it watch thousands of hours of actual procedures happening in real surgical theaters. The research team says it’s a breakthrough development that crosses a definitive medical frontier and opens the path to a new era in healthcare.

According to their recently published paper, the researchers say the AI managed to achieve a performance level comparable to human surgeons without prior explicit programming. Rather than trying to painstakingly program a robot to operate—which the research paper says has always failed in the past—they trained this AI through something called imitation learning, a branch of artificial intelligence where the machine observes and replicates human actions. This allowed the AI to learn the complex sequences of actions required to complete surgical tasks by breaking them down into kinematic components. These components translate into simpler actions—like joint angles, positions, and paths—which are easier to understand, replicate, and adapt during surgery.

How they did it

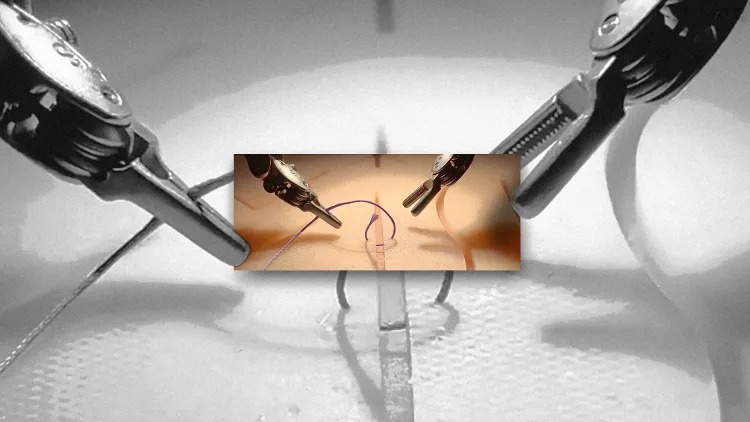

Kim and his colleagues used a da Vinci Surgical System as the hands and eyes for this AI. But before using the established robotic platform (currently used by surgeons to conduct precise operations locally and remotely) to prove the new AI works, they also ran virtual simulations. This allowed for faster iteration and safety validation before the learned procedures were applied on actual hardware.

“All we need is image input, and then this AI system finds the right action,” Kim says. The da Vinci robots were also the source of the videos that the AI analyzed, using more than 10,000 recordings captured by wrist cameras during human-driven surgeries to learn three surgical tasks: Handling and positioning a surgical needle, carefully lifting and manipulating tissue, and suturing—all complex tasks that require fine, extremely sensitive control. This large-scale dataset enabled the AI to learn nuanced differences between similar surgical actions, such as the appropriate tension needed to handle tissue without causing damage.

Those training videos are just a very small part of an extensive repository of surgical data. With nearly 7,000 da Vinci robots in use worldwide, there is a vast library of surgical demonstrations to observe and learn from, which the research team is now using to expand the AI’s surgical repertoire for a new, not-yet-published study.

“In our follow-up work, which we will be releasing soon, we study whether these models can work for long-horizon surgical procedures involving unseen anatomical structures,” Kim writes, referring to complex surgical procedures that require adapting to the patient’s condition at any given time, like when operating on a serious internal wound.

During development, the team worked closely with practicing surgeons to evaluate the model’s performance and provide critical feedback (particularly regarding the subtle handling of tissue), which the robot integrated into its learning process.

Finally, to validate the model, they used a separate dataset not included in the initial training to create virtual simulations, ensuring the AI could adapt to new and unseen surgical scenarios before proceeding to test it in physical procedures. This cross-validation confirmed the robot’s ability to generalize rather than merely memorize actions, which of course is crucial given the number of potential unknowns that may arise in the operating theater.

It all worked beautifully. The robot’s model learned these tasks to the level of experienced surgeons. “It’s really magical to have this model where all we do is feed it camera input, and it can predict the robotic movements needed for surgery,” Axel Krieger, assistant professor in mechanical engineering at Johns Hopkins and senior author of the study, says in an emailed statement. “We believe this marks a significant step forward toward a new frontier in medical robotics.”

A breakthrough discovery

One of the keys to this success is the use of relative movements rather than absolute instructions. In the da Vinci system, the robotic arms might not end up exactly where they are intended due to tiny discrepancies in joint movement that accumulate over multiple actions and can eventually lead to significant errors—especially in a sensitive environment like surgery. The team had to find a solution, so rather than depending on those measurements, it trained the model to move based on what it observes in real time while performing the operation.

But the main innovation here is that imitation learning removes the need for manual programming of individual movements. Before this breakthrough, programming a robot to suture required hand-coding every action in detail. This method was also error-prone and a major limitation in advancing robotic surgery, Kim says.

It limited what the robot could do because of the development effort, and the lack of flexibility that made it extremely difficult for robots to do new tasks. Imitation learning, however, allows the robot to adapt quickly to anything it can watch, learning similarly to a surgical student. “[We] only have to collect imitation learning data of different procedures, and we can train a robot to learn it in a couple of days,” Krieger says. “It allows us to accelerate toward the goal of autonomy while reducing medical errors and achieving more precise surgery.”

To quantify how well the AI works, the researchers defined key performance metrics, such as precision in needle placement and consistency in tissue manipulation using a set of physical mock surgical environments, which included synthetic tissue simulators and surgical dummies. The results left them speechless. “The model is so good at learning things we haven’t taught it,” Krieger says. “For instance, if it drops the needle, it will automatically pick it up and continue.”

Such adaptability is not only important to keep learning new skills but also crucial for handling unpredictable events in live surgeries, like an artery rupturing or a patient’s vitals changing suddenly. Additionally, the model demonstrated improved time efficiency, reducing the completion time for standard surgical tasks such as suturing by approximately 30%, which is particularly promising for time-critical operations.

The future

The scientists envision a scenario where these robots assist surgeons in high-pressure situations, enhancing their capabilities and minimizing human error. These future AI surgeons will significantly impact the availability of surgical care, making high-quality medical interventions available to a broader population. Initially, this would be especially true in underdeveloped areas lacking medical infrastructure. Diagnostics AI is already playing a role in making medicine more affordable in those zones of the world, so surgeons is the next logical step.

Before all that happens, however, this autonomous learning AI needs to prove that it is reliable in any given situation, “at least as good or better than a human surgeon, statistically speaking,” Kim says. He tells me that one way to ensure safety is through statistics: “If the model is able to perform 1,000 surgeries without any complications—including really hard-edge cases—then that might be a decent way to measure its reliability.”

Their work shows that if the model has been trained on similar situations, it is able to handle them. “The model is very good at generalizing and interpolating based on data it has been trained on before,” he says. If the model encounters a slightly different organ structure, it should be able to recognize it and interact appropriately, thanks to a training that is designed to generalize across a variety of anatomical and situational challenges, like patients’ anatomical differences, unexpected bleeding, or tissue abnormalities.

Regulations and ethics

There are also ethical and regulatory challenges that need to be addressed before such an AI can be deployed in real surgical environments without human oversight. The leap to autonomous surgical robots introduces new ethical concerns. There’s the issue of accountability: Who is going to be responsible if there’s a problem? The company that made the AI surgeon? The medical professionals supervising it (if there’s any supervision)? There’s also the question of patient consent, which will require educating both the person undergoing the surgery and the people around that person on what these AIs are, what exactly they can do, and what risks are posed in comparison to human surgeons.

Kim admits that right now the future is in a gray area where everyone can merely speculate on what should or will happen. Regulatory authorities will have their hands full, from addressing accountability and ethical concerns when allowing AI surgeons to operate autonomously, to setting standards for obtaining informed consent from patients.

But when choosing between having an emergency, lifesaving procedure performed by an autonomous surgeon or having no treatment because a human surgeon isn’t available (like in a remote location or an underdeveloped area), Kim argues that the better choice is clear. I can easily imagine a near future in which—given statistical proof that AI surgeons operate safely—people start choosing AI robots over their human counterparts.

What’s next?

Beyond the ethical and legal challenges, more work is needed to enable practical implementation. Hospitals will need to invest in infrastructure that supports AI robotic surgery, including physical hardware and technical expertise for operation and maintenance. Additionally, training medical teams to manage the process will be critical. They will need to understand the machine and when to intervention is necessary, eventually transitioning human surgeons from direct surgical tasks to roles focused on supervision and safety.

At the practical level, the researchers envision a phased introduction, beginning with simpler, low-risk surgeries like hernia repairs and gradually advancing to more complex operations. A gradual approach will help validate the robot’s reliability while addressing regulatory and ethical concerns over time, as well as helping the population to trust AI to perform life-critical operations.

“We are still in the early stages of understanding what these machines can truly achieve,” Krieger says. “The ultimate goal is to have fully autonomous surgical systems that are reliable, adaptable, and capable of performing surgeries that currently require a highly trained specialist.”