- | e&

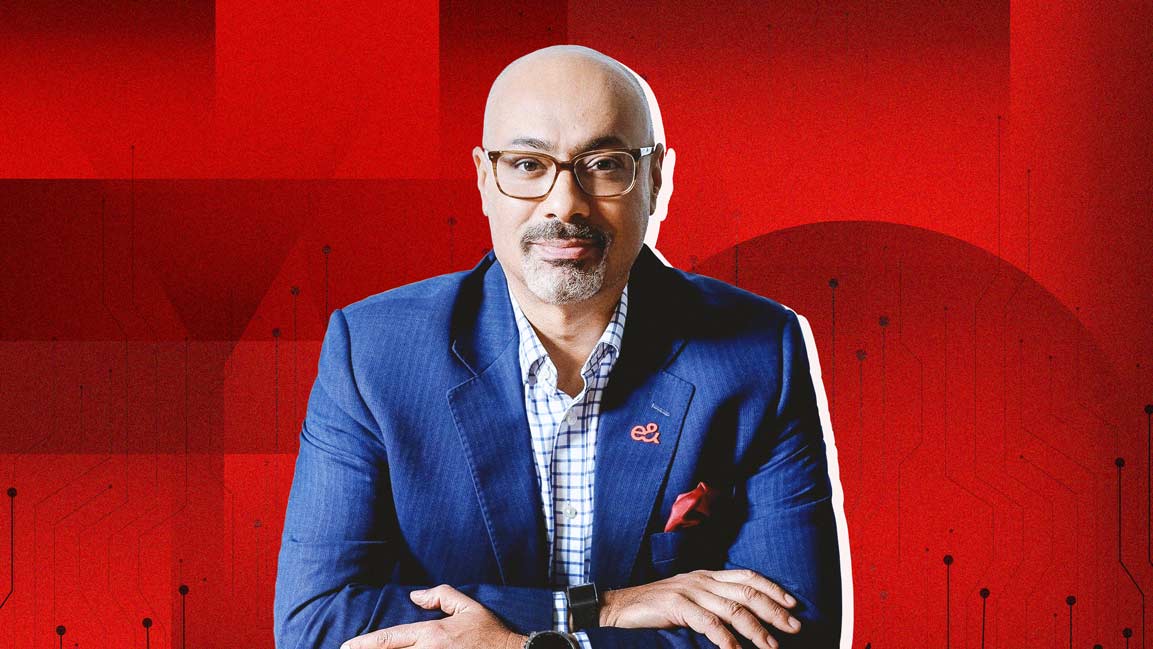

e&’s Hatem Dowidar on the Middle East’s role in AI’s defining decade

Hatem Dowidar, Group CEO of e&, explains why the next decade will decide whether artificial intelligence becomes a shared resource or a tool of inequality.

Whoever controls AI shapes the future. Its influence on economies, national security, and identity is profound. Governments are no longer asking whether AI matters. They are asking who wields it and who benefits.

In the UAE, this conversation centers on sovereignty.

“AI goes beyond efficiency. It is a cornerstone of sovereignty,” says Hatem Dowidar, Group CEO of e&, the Abu Dhabi–based technology group.

The UAE is building its AI capabilities with a focus on sovereignty, digital independence, and global competitiveness. Central to this effort is the UAE Sovereign Launchpad, a collaboration between Amazon Web Services, e&, and the UAE Cybersecurity Council. By combining the scale of a global cloud provider with national oversight, the initiative ensures both security and strategic control. It is expected to contribute US$181 billion to the UAE’s digital economy by 2033, while developing intellectual capital through AI-focused labs and training programs.

He emphasizes the importance of full ownership in this process. “By owning data, models, and infrastructure, nations and organizations secure their digital future,” he says. “The UAE Sovereign Launchpad embeds AI as a strategic priority to drive independence and global competitiveness, aligning public vision with private innovation to scale solutions that uphold national priorities.”

For the UAE, AI is the new energy. Just as dependence on foreign oil once shaped geopolitics, reliance on external AI providers could leave nations vulnerable. By localizing control over data and infrastructure while engaging in global partnerships, the UAE aims to build resilience.

BUILDING TRUST IN AI

If sovereignty sets the foundation, trust determines whether AI can stand the test of time. Concerns about opaque algorithms and biased outputs dominate headlines, and for Dowidar, trust cannot be an afterthought.

“Trust in AI is born from transparency. If we want people to use these systems, they need to be able to understand them–to see that they’re fair and accountable. You can’t simply bolt on good governance as an afterthought; it must be part of the original design. When we create open and easy-to-understand AI, it gives people the confidence to embrace it. It’s as simple as that.”

In 2024, e& launched a Responsible AI Framework built around eight principles, with risk assessments, governance structures, and oversight by an AI Governance Steering Committee. With board-level oversight, AI is now embedded in the group’s enterprise risk framework. Partnerships with IBM add automated monitoring for bias and compliance, a capability highlighted earlier this year at the World Economic Forum in Davos.

THE HUMAN SIDE OF AI GOVERNANCE

“AI has reached a point where the question is no longer if it works, but how and why,” says Dowidar.

Guiding AI requires a new kind of leadership. “Executives have to set the tone, define the risks, and take full ownership of the outcomes. That’s why at e&, we’ve made AI governance a foundation of our enterprise risk strategy, with leaders driving the conversation instead of just reacting to it.”

This model embeds AI oversight within the company’s Board Risk Committee, treating it as a strategic matter rather than a backroom issue. At Davos 2025, e& and IBM unveiled a joint generative AI governance solution to automate risk management and compliance monitoring, demonstrating that governance must evolve as quickly as the technology.

Dowidar sees this approach as a competitive advantage. “No single player can govern AI alone,” he says. “Public–private partnerships are essential to co-design frameworks that protect societies and allow innovation to flourish.”

GOVERNANCE BY DESIGN

The question is no longer whether AI should be governed, but how to embed governance into its DNA.

“Governance must start from day one,” Dowidar says. “If you wait until a model is built to think about ethics, accountability, and safeguards, it’s already too late. At e&, we weave governance into every stage, from partnering with vendors to sourcing data to developing employee tools and customer-facing systems. It’s part of our design language.”

This “governance by design” approach evaluates vendor partnerships, audits data sources, and reviews system outputs before deployment. By embedding oversight early, the company can anticipate risks, clarify responsibilities, and demonstrate to customers and regulators that AI is treated as a strategic capability with a societal impact.

REGULATION, INCLUSION, AND ACCESS

As AI becomes pervasive, questions of regulation, fairness, and accessibility are increasingly urgent.

“AI cannot become a luxury for a select few,” says Dowidar. “We have to treat this as a shared responsibility – governments, regulators, and industries all need to work together. At e&, we’re investing in people, from the boardroom to the front lines. We’re not just democratizing access to technology but empowering everyone, from non-technical staff to women and youth, to be part of this future.”

Initiatives like Citizen X equip employees with AI fluency, while executive training with Emeritus prepares leaders for oversight. The AI Graduate Programme supports Emirati youth, with 62 percent female participation. Partnerships with Microsoft extend skilling programs to small and medium-sized businesses, while AI platforms developed with UNDP and Datalyticx address climate and health challenges across the Arab States.

“AI should be treated like critical infrastructure. When it becomes a fundamental pillar of our society, accessibility is a matter of social justice and economic stability,” Dowidar notes. “That’s why building a responsible and inclusive digital ecosystem is a strategic investment.”

e& has pledged US$6 billion to expand connectivity across 16 countries through the ITU’s Partner2Connect coalition.

SHAPING A RESPONSIBLE AI FUTURE

The next decade in the Middle East will be defined by how the region leads in AI, rather than simply adopting it. Countries like the UAE are embedding AI into national strategies, education, and institutions to build a sustainable ecosystem that serves current and future generations. Initiatives such as integrating AI into the national curriculum and establishing institutions like MBZUAI demonstrate a commitment to ensuring AI is a tool for today’s innovators and a foundation for future growth.

“The last thing we should do is simply consume AI. The Middle East’s ambition is far greater than that. Over the next decade, AI governance in our region will be defined by three things: trust, sovereignty, and inclusion,” Dowidar says.

Organizations must go beyond innovation and focus on governance, leadership, and inclusion to ensure AI benefits society.

“If we get governance, leadership, and inclusion right, the Middle East can lead the world in responsible AI. The challenge is immense, but so is the opportunity. The next decade will define whether AI becomes a tool for everyone or a privilege for a few,” Dowidar adds.