- | 9:00 am

Leaders from Box, Meta, and LinkedIn on how AI is reshaping the future of work

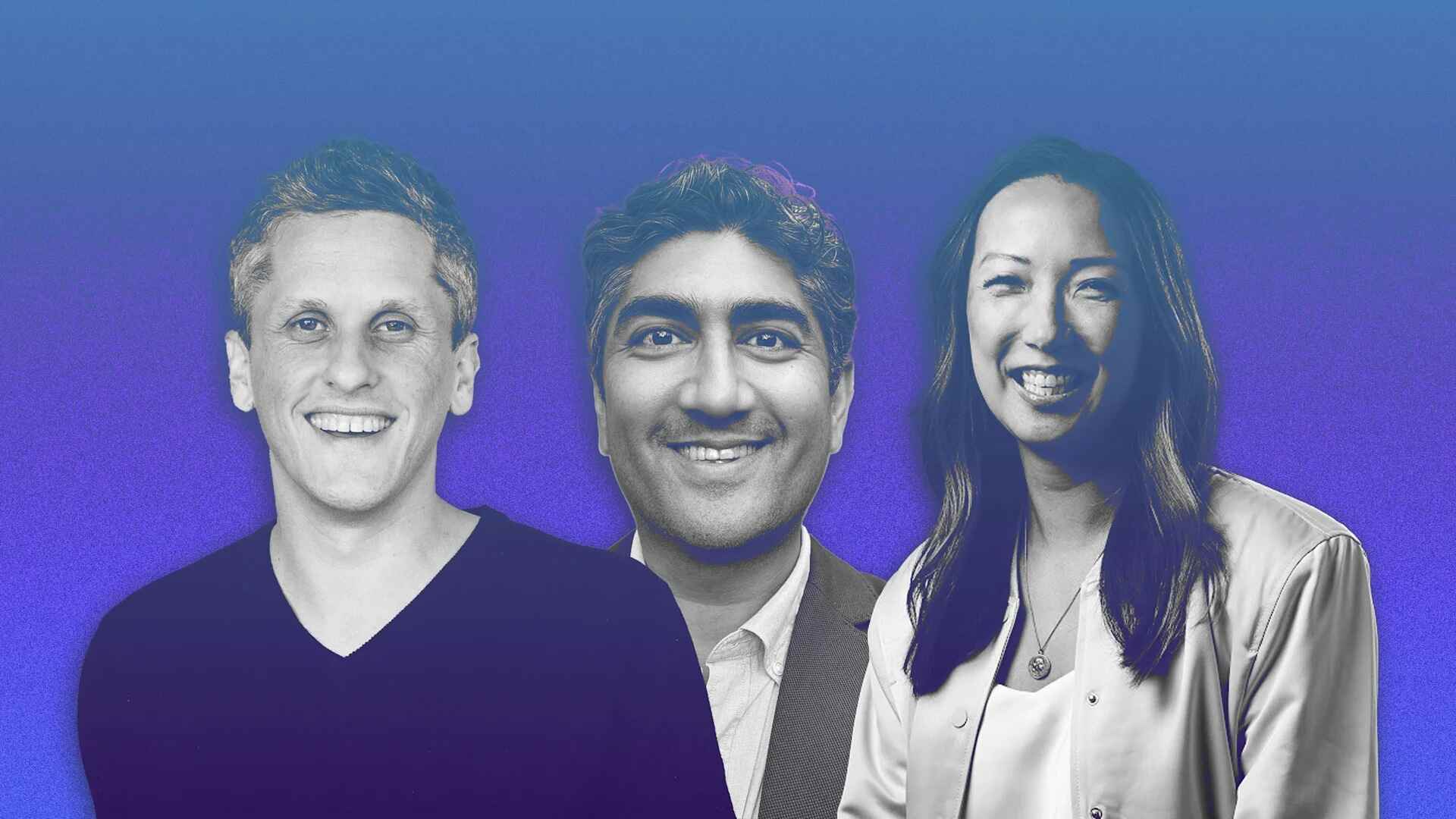

Box CEO Aaron Levie, LinkedIn chief economic opportunity officer Aneesh Raman, and Meta head of business AI Clara Shih share insider perspectives on AI at work.

AI is radically changing the future of the workplace—from redefining jobs to fueling the rise of so-called “workslop.” Live onstage at the Masters of Scale Summit in San Francisco, Box CEO Aaron Levie, LinkedIn chief economic opportunity officer Aneesh Raman, and Meta head of business AI Clara Shih share their insider perspectives on AI optimism, uncertainty, and navigating this unprecedented era.

This is an abridged transcript of an interview from Rapid Response, hosted by former Fast Company editor-in-chief Bob Safian and recorded live at the 2025 Masters of Scale Summit in San Francisco. From the team behind the Masters of Scale podcast, Rapid Response features candid conversations with today’s top business leaders navigating real-time challenges. Subscribe to Rapid Response wherever you get your podcasts to ensure you never miss an episode.

Aneesh, there’s a real debate about the impact of AI on work. Some say it will be positive, and some say it will be negative. You’ve said it will be spectacular. Why?

Raman: I’m at LinkedIn. We are, if nothing else, a platform of people. We go where the species goes. So for two years, I have thought about human intelligence as my core focus. . . . For humans, work has kind of sucked since the industrial age. We have turned ourselves into efficiency machines. Whether you’re at the assembly line or sending emails, it’s more, better, faster. More, better, faster. . . . That isn’t who humans are. AI is going to out-efficiency us. Robots are going to out-efficiency us.

That’s okay. We became the apex species—not because we were the most efficient, but because we were the most imaginative and the most innovative. . . . AI is going to force us finally to broaden our view of human intelligence. I’m pessimistic about our state of being ready for that because it’s entirely new systems of education, employment, and entrepreneurship. But we’re going to have to fix that, and that’s great, I think.

Clara, we’ve heard this term that’s become very popular: “workslop.” And AI-generated work is creating more work. It’s like volume over quality. . . . Is workslop just a phase for AI? Is it like a bug that we’re going to fix, or is this a feature and something that we’re always going to have to be vigilant about when we’re in a world where so much can be created so easily?

Shih: I think there’s always been workslop. I’ve been responsible for some workslop, especially earlier in my career. I think certainly that AI makes it easier to create lots of workslop, just like any new technology. I can imagine the very first spreadsheets, when they were invented, people weren’t sure how to use them. The features probably didn’t include checking the formulas, and so there were probably some really bad, incorrect spreadsheets. The same thing is happening now.

Raman: Like any tool, use it. Do not misuse it. Do not overuse it. There’s already lots of research. MIT has great research with brain scans that if you overuse AI, you’ll deplete your ability to grow critical thinking skills. AI helps individuals get started, but if everyone’s using it across a team, sameness creeps in. So it’s all about how you use it.

Aaron, you founded Box. You know how important and distinctive a culture can be in an organization. Each company is different. All of our cultures—each one is different. When we add AI agents to our work, do we need to orient them like we do new employees? If we all use off-the-shelf AI, are we going to end up with off-the-shelf culture?

Levie: Certainly. And by the way, I’m actually fine with lots of workslop because all I see is lots of files that are going to get generated.

That’s good for you.

Levie: I think everybody here is familiar with context engineering. It’s sort of a simple analogy—which is if you have an employee off the street, a superintelligent person, who doesn’t know what kind of job they’re in. They just appeared. And you’re, like, “Okay, today you’re a lawyer and the next day you’re a marketer and the next day you’re a coder.” That’s kind of what an AI model is. And so you have to give it the context necessary to be able to perform its tasks.

And so maybe, actually, ironically, if anything, you’ll have to get more context than the person would. It’s actually very easy for an employee to pick up the general cultural sort of norms and work practices, because they can just look over at one other person and say, “Oh, I see the way that you just collaborated over there.” And so we’re more of a collaborative culture versus we just make really quick decisions and then move forward. An AI agent again doesn’t know, “Did I just join SpaceX, or did I join Patagonia?” I’m assuming those are two different ends of the cultural spectrum. So you’re going to have to tell the agent effectively, like, “Who are you right now? And here are the norms in our organization, and here is the context about the business process that you’re involved in.”

Clara, I talked to one CEO last week who said that he’s being pressured to adopt AI in areas that he’s not sure are actually beneficial for the business. But he feels like, “Oh, I’m getting this push from all different parts of the organization, investors, and the board.” How do leaders strike a balance between “I got to be in this” versus “It’s not really showing any measurable impact yet”?

Shih: I see this all the time from various leaders that I meet with. I think it’s first being hands-on and really getting in there and understanding the capabilities. Because I think with that judgment, with that firsthand experience, only then can leaders really know, “Okay, I want to apply it here but not here.” Another really great success formula is splitting up the team, having people focus on immediate use cases. What can I unlock today that will show me ROI this quarter, next quarter, versus what are the bigger bets where I just see the secular trend, and we have to skate to where the puck is going?

Levie: Here’s one pitfall that a lot of existing organizations will fall into, like us included. When I walk around and try and talk about what kind of processes we can bring automation to, I think there is this idea that we have this sort of end-state utopian view of AI can do anything and can automate anything. The reality is that if you drop AI into today’s business process, it’s going to actually do very little, and you have to actually re-engineer your workflow and you have to re-engineer your process. I think a lot of times, unfortunately, you’ll see people try and drop AI into a process where, even with the best automation, you’re going to get a 10% gain or whatnot of that workflow.

It’s not world-changing.

Levie: It’s not world-changing, but it’s because you didn’t think about re-engineering the workflow for AI. And we thought AI would work how we do. It turns out it might be the case that we have to work how AI does, and we have to be actually in service of the agent to make it most productive—as opposed to this unfortunate reality where we probably thought it would make us productive.

It’s a little terrifying. Your job is to make the AI more productive?

Levie: Totally. Well, so there was some off-site 15 years ago where one of our engineering managers told me about the inverted pyramid. His job is to enable all the people below him technically in a hierarchy, but to be as productive as possible. And it was sort of this inversion of, like, he works for them to make them as effective as possible. And the reality is that that’s kind of what we’re going to be doing with AI agents for quite some time, because we’re going to be working to make them effective. . . . And if you look at the five- and 10- and 20-person startups that are AI-native fully, they don’t have a single business process. That’s basically what they’re doing. They’re working to support agents to be supereffective, and that’s just a totally different way to work.

Raman: Make them more productive to make you more impactful.

Shih: Yeah. Help you help me.

Raman: I think we have to be affirmatively pro-human. It’s hard to state how radical it is.