- | 8:00 am

Apple Intelligence sure looks lame, but it shows how Apple can still win AI

Apple AI doesn’t need to be the smartest in the room. It just needs to drown out all the other conversations.

I want to tell you about a future. A future where, just by talking to your phone, you can book appointments, set timers, play music, check the price of a stock, and find out the weather.

These are examples of Apple’s new AI capabilities, announced this week at the World Wide Developers Conference.

These are also examples of Apple’s new AI capabilities, announced in 2011 with the introduction of Siri.

Oh, how far we’ve come!

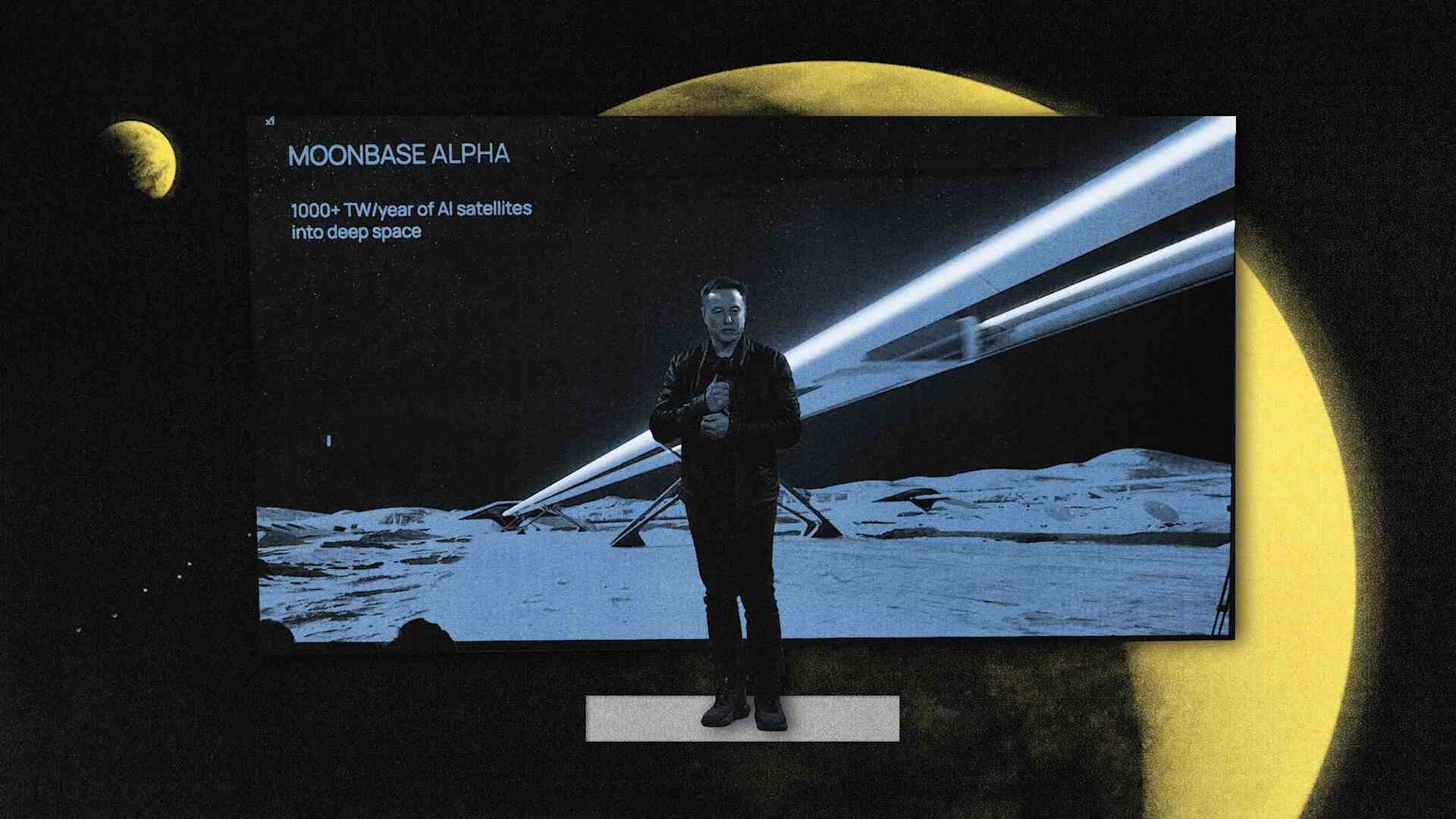

It’s hardly a secret that Apple has spent the past few years falling behind in the AI race. While Microsoft invested $10 billion into OpenAI to fuel an AI renaissance, Apple was wearing Vision Pro blinders. According to Bloomberg, it only accelerated spending into its own AI research late last year—earmarking a billion a year for the investment (which sounds like a lot of money, until you realize that’s just 1/383rd of Apple’s total revenue, and about 1/100th of its profits).

Apple is behind, and it’s jogging briskly to catch up. It’s not only trailing OpenAI—which will provide an unspecified amount of support to Apple’s AI features via a new partnership—but if you look at what Apple has actually shipped, it’s also behind Samsung, which has teamed closely with Google for features on its Galaxy phones, like the option to circle anything on your screen to search it, and translate calls in real time (the latter of which Apple will debut later this year).

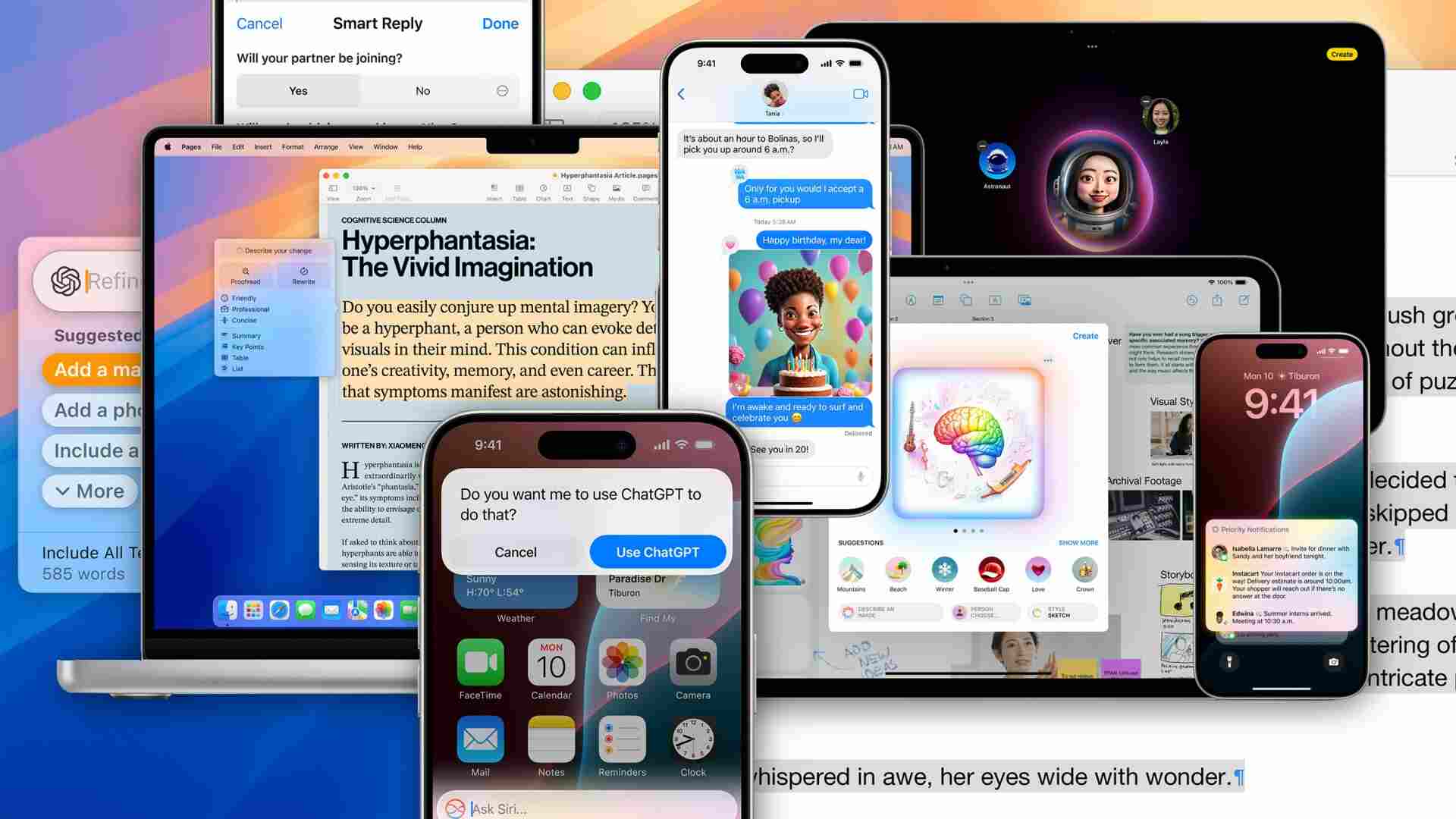

Aside from the pile of Siri 2011 features, Apple dedicated much of the presentation to showing off some bare-bones integrated generative AI tools, ranging from texting friends generated images in Messages to writing emails for you in Mail. It also demonstrated a series of experiments with the Apple Pencil, which could smooth your handwriting or complete math equations as you drew them. These are UX curiosities, for sure, but they’re the sort of tools that seem built to demonstrate tech more than solve actual problems.

APPLE’S AI ACE

However, as Craig Federighi, Apple senior vice president of software engineering, explained on stage, Apple isn’t interested in your typical approach to AI. The company wants to build “a personal intelligence model” on your phone that “draws on personal context.” So what if they don’t generate the most photorealistic images on command? The implication is that since Apple understands you better than any other AI platform can, it will outperform other platforms in the long run.

Apple really only offered one example of what it meant by this, and it was as underwhelming as it was revealing. Kelsey Peterson, director of machine learning and AI at Apple, walked through a demo in the prerecorded keynote.

Peterson asked, “Siri, when is my mom’s flight landing”—and Siri cross-referenced flight details found in email, then tracked the flight online. Asking, “what’s our lunch plan”—Siri found the spot mom sent in a text. Then when finishing the conversation with, “How long will it take to us get there from the airport?” a map pops up with traffic data.

Make no mistake: There’s nothing all that impressive happening here when it comes to LLM technology. These are straightforward questions asked with very specific language that have perfectly clear answers. (Apple did nothing to synthesize or suggest anything special from this query—like “remember to buy your mom lilies, her favorite, but don’t go to Flower Mart because they were wilted last time.”) However, what is impressive is Apple’s reach.

Apple can effortlessly sift through your email and Messages (and, eventually, various other apps via specialized APIs that Apple announced) to find the answer that it needs. The company has access to an incredible amount of information on you; when handed over to an LLM, it can jog your memory almost instantly about anything.

A MASSIVE DATA SET

This reach is Apple’s advantage in the great AI race—in the United States, at least. Microsoft recently demonstrated that it could record every moment of your computer screen to serve as an AI memory, and while that (extremely creepy) idea could be powerful for work contexts, perhaps, it still doesn’t reach the most intimate computer in the world: the phone in your pocket. Apple’s moat around Messages is particularly powerful in the US. Apple owns less than 60% of the U.S. smartphone market, and iPhone users vastly prefer their blue bubble. 85% of teens in the U.S. are on Messages today. And texting has been the preferred method of communication since 2014.

Apple doesn’t need to have the best LLM to own AI. It just needs to own the thing AI references. And your years of personal message history are an incredible repository of information.

Apple is so close to its users, that it presented AI as a feature without even sweating about our likelihood to consent. And to be fair, no one seems to be asking, How the heck can these weird, unknown AI systems be analyzing my private texts?!? Instead, we’re blindly confident that Apple will use our info for our benefit. Whereas every Apple competitor would need to beg for this data, Apple simply gets the benefit of the doubt.

ACCESS IS POWER

As long as Apple commands this channel to your data (let’s ignore allegations of anti-competitive practices for a moment), it also ensconces itself against relying on any particular AI provider all that much. AI assistants will often juggle several models at the same time—basically asking questions to different AI systems in tandem to get you an answer, or pointing simpler questions to AIs that are cheaper to query because they require less processing power.

For now, Apple has inked a partnership with OpenAI to use ChatGPT for at least some of its intelligence offerings. But longer term? There’s nothing to say that Apple couldn’t get its own models running, or license LLM power from competitors instead, to offer its “Apple Intelligence” (the company’s cheeky rebrand for AI) without anyone caring what’s actually running under the hood.

Who can challenge Apple in this space? Any promise of dedicated hardware has flopped this year, as Humane and Rabbit have both shipped disappointing products. The Samsung Galaxy is an interesting counterpoint to consider with the right partnerships; Google has already teased how its Bard AI could work within Google Messages (used on Samsung phones). But Samsung won’t ever see inside popular third-party messaging platforms like WhatsApp, which feature protected messages.

So long as Apple can own the phone—and particularly our phone connected to its proprietary text-messaging service—it holds the real estate of modern AI. It will see a slightly different, and perhaps deeper, part of our social life than any other product or platform.

And if Apple can’t figure out something more powerful and essential to do with all that than pulling up some flight information, well, then maybe Apple Intelligence isn’t quite as intelligent as the company would lead you to believe.