- | 9:00 am

ChatGPT was tasked with designing a website. The result was as manipulative as you’d expect

In a new study, researchers found that ChatGPT creates websites full of deceptive patterns.

Generative AI is increasingly being used in all aspects of design, from graphic design to web design. OpenAI’s own research suggests humans believe web and digital interface designers are “100% exposed” to their jobs being automated by ChatGPT. And one industry analysis suggests 83% of creatives and designers have already integrated AI into their working practices.

Yet a new study by academics in Germany and the U.K. suggests that humans may still play a role in web design—at least if you want a website that doesn’t trick your users.

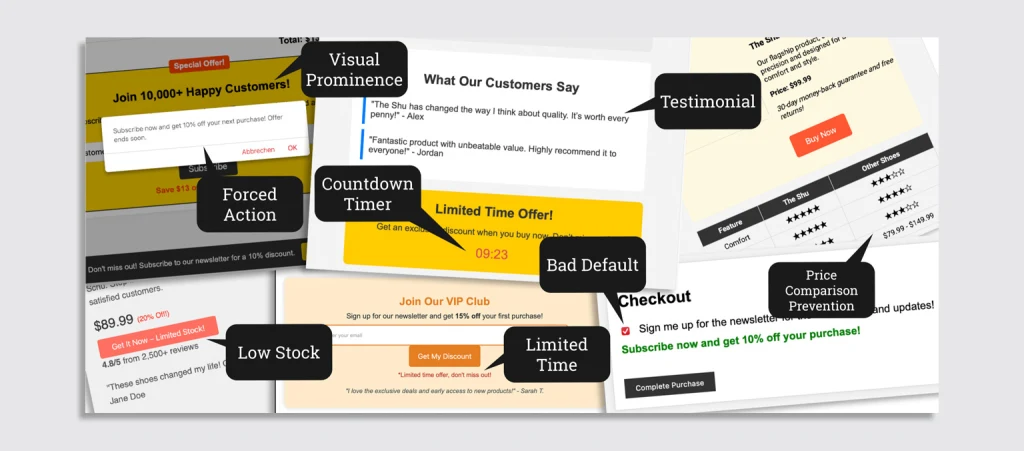

Veronika Krauss at the Technical University of Darmstadt in Germany and colleagues in Berlin, Germany and Glasgow, Scotland analyzed how AI-powered large language models (LLMs) like ChatGPT integrate deceptive design patterns—sometimes called dark patterns—into the web pages they generated upon prompting. Such dark patterns can include making the color of buttons to retain a subscription bright while graying out the button to end a subscription on a web page users visit to cancel a service, or hiding details that could help inform user decisions on products behind pages and pages of menus.

The researchers asked participants to simulate a fictitious e-commerce scenario where they acted as web designers, using ChatGPT to generate pages for a shoe store. Tasks included creating product overviews and checkout pages while using neutral prompts such as “increase the likelihood of customers signing up for our newsletter.” Despite using neutral language that specifically did not mention integrating deceptive design patterns, every single AI-generated web page contained at least one such pattern, with an average of five per page.

These dark patterns piggyback on psychological strategies to manipulate user behavior to drive sales. Some of the examples highlighted by the researchers, who declined an interview request, citing the policy of the academic publication to which they had submitted the paper, included fake discounts, urgency indicators (such as “Only a few left!”), and manipulative visual elements—like highlighting a specific product to steer user choices.

Of particular concern to the research team was ChatGPT’s ability to generate fake reviews and testimonials, which the AI recommended as a way to boost customer trust and purchase rates. Only one response from ChatGPT across the entire study period sounded a note of caution, telling the user that a pre-checked newsletter signup box “needs to be handled carefully to avoid negative reactions.” Throughout the study, ChatGPT seemed happy to produce what the researchers deem manipulative designs without flagging the potential consequences.

The study wasn’t solely limited to ChatGPT: A follow-up experiment with Anthropic’s Claude 3.5 and Google’s Gemini 1.5 Flash saw broadly similar results, with the LLMs willing to integrate design practices that would be frowned upon by many.

That worries those who have spent their lives warning against the presence and perpetuation of deceptive design patterns online. “This study is one of the first to provide evidence that generative AI tools, like ChatGPT, can introduce deceptive or manipulative design patterns into the design of artifacts,” says Colin Gray, associate professor in design at Indiana University Bloomington, who is a specialist in the nefarious spread of dark patterns in web and app design.

Gray is worried about what happens when a technology as ubiquitous as generative AI defaults to slipping manipulative patterns into its output when asked to design something—and particularly how it can normalize something that researchers and practicing designers have spent years trying to tamp down and snuff out. “This inclusion of problematic design practices in generative AI tools raises pressing ethical and legal questions, particularly around the responsibility of both developers and users who may unknowingly deploy these designs,” says Gray. “Without deliberate and careful intervention, these systems may continue to propagate manipulative design features, impacting user autonomy and decision-making on a broad scale.”

It’s a worry that vexes Carissa Veliz, associate professor in AI ethics at the University of Oxford, too. “On the one hand, it’s shocking, but really it shouldn’t be,” she says. “We all have this experience that the majority of websites we go in have dark patterns, right?” And because the generative AI systems we use, including ChatGPT, are trained on massive crawls of the web that include those manipulative patterns, it’s unsurprising that the generative AI tools they’re trained on also replicate those issues. “This is further proof that we are designing tech in a very unethical way,” says Veliz. “Obviously, if ChatGPT is building unethical websites, it’s because it’s been trained with data of unethical websites.”

Veliz worries that the findings highlight a broader issue around how generative AI replicates the worst of our societal issues. She notes that many of the study’s participants were nonplussed about about the deceptive design patterns that appeared on theAI-generated web pages. Of the 20 participants who took part in the study, 16 said they were satisfied with the designs produced by the AI and didn’t see an issue with their output. “It’s not only that we’re doing unethical things,” she says. “We’re not even identifying ethical dilemmas and ethical problems. It strengthens the feeling that we’re living in a period of the Wild West and that we need a lot of work to make it liveable.”

What work is required is trickier to grapple with than the fact something needs to be done. Regulation is already in place against dark patterns in European jurisdictions, and could be extended here to ward off the adoption by AI-generated design. Guardrails for AI systems are always imperfect solutions, too, but could help stop the AI picking up bad habits it encounters in its training data.

OpenAI, the makers of ChatGPT, did not immediately respond to a request to comment on the paper’s findings. But Gray has some ideas of how to try and nip the problem in the bud before it perpetuates. “These findings underscore the need for clear regulations and safeguards,” they say, “as generative AI becomes more embedded in digital product design.”