- | 8:00 am

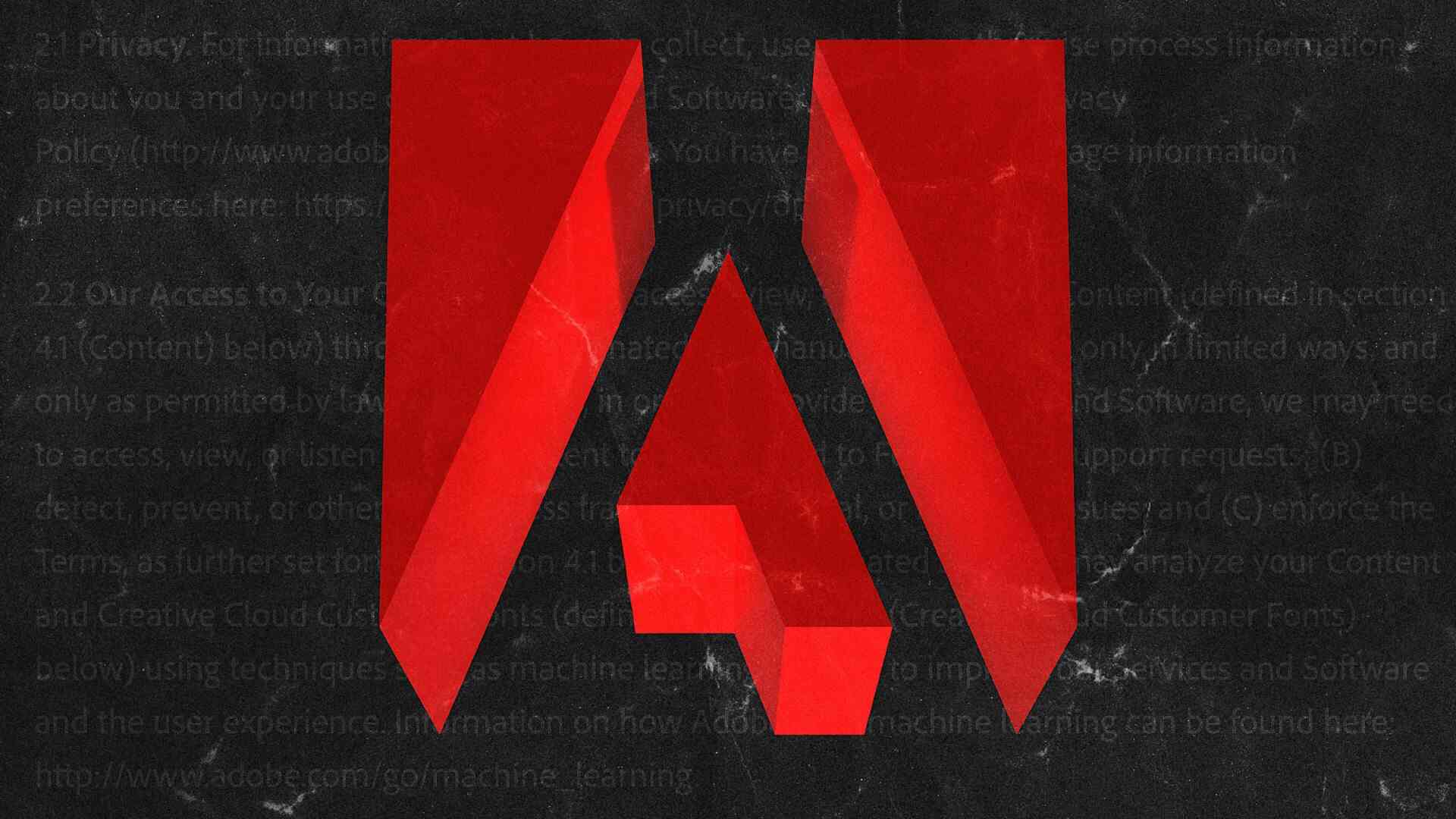

Creatives are right to be fed up with Adobe and every other tech company right now

The generative AI boom has eroded trust between creatives and Silicon Valley. Adobe’s latest misstep adds more fuel to the fire.

Adobe is in hot water once again. Yesterday, a dialog box popped up on the screens of users all around the world, informing them of updated my-way-or-the-highway terms of use that couldn’t be appealed or disagreed with if they wanted to keep using Photoshop or Adobe’s Creative Cloud suite of tools. Key to this dialog was the phrase: “Clarified that we may access your content through both automated and manual methods, such as for content review.”

The user who posted the screenshot on X dug into the updated TOS and was outraged: “This is pure offensive insanity. Adobe is claiming royalty-free rights to copy and make new content out of customers’ assets. Adobe is claiming that they can then sub-license customer assets to other companies. They describe a “reasonable” use-case, but describe no limitations.”

Not long after, Adobe users exploded across the internet with an anger the force of a supernova.

The shockwave later reached Reddit, where people wondered why Adobe wanted to spy on their work. Others wondered about how this applied to people working on NDA material, from film crews to lawyers to doctors.

In a blog post the company published last night, Adobe denies it is spying on users in categorical terms. Earlier in the day, Scott Belsky—VP of Products, Mobile and Community at Adobe and cocreator of the creative portfolio platform Behance—tried to do some damage control, replying to the onslaught of outrage from Adobe users.

Belsky quickly clarified that Adobe would not use user generated content to train its Firefly AI model. He also claimed that most technology companies that operate cloud-based software require some form of the language Adobe used in its updated TOS. If you want to share your work with a preview link, if you want to edit files in the cloud, if you want to access Adobe’s cloud based features, for example . . . well, digital services don’t come free. There’s a cost to doing business, and “limited” access, Belsky says, is just part of the fee.

Still, Belsky admitted that the language was unfortunate. Adobe only allows you to opt-out of some content analysis that includes machine learning “to develop and improve our products and services.”

TIRED OF BEING JERKED AROUND

Despite assurances, it’s still unclear what the TOS will mean for users in the long run—and those users aren’t pleased. As one Fast Company photo editor said in Slack when the news broke: “I think Adobe slipped this in like a raptor testing the fences. And they got shocked… this time.“

That seems to be the pervasive sentiment coming from the creative community right now. Trust is broken. Creatives are fed up. They’re fed up with tech companies jerking them around. Fed up with broken promises. Fed up with updated TOS.

And Adobe is only the most recent example. OpenAI has been sued for scraping content from others. Midjourney and Stability, too. Shutterstock licensed users’ material to OpenAI for Dall-E training using a clause they buried in updated TOS. And Meta just recently decided to screw everyone up by unilaterally declaring it was going to use your images and videos to train its generative AI (unless you lived in the EU, in which case you could opt-out in via a purposefully deceptive form).

Who could blame people for not trusting Adobe’s intentions? As an Adobe user, I share the feeling 100%. Despite the company’s claims about it being the responsible AI in the room, it was still partially trained with Midjourney AI images and can create the same racist garbage that other generative AIs do.

I reached out to Adobe with some questions that required very straightforward answers: ”Why have you changed the terms now? What was exactly the reason for this change? Why use this equivocal language to begin with if you claim you didn’t really mean what people understood according to their official response?”

Adobe replied with this, the same legalese from their official blog post:

“Access is needed for Adobe applications and services to perform the functions they are designed and used for (such as opening and editing files for the user or creating thumbnails or a preview for sharing). Access is needed to deliver some of our most innovative cloud-based features such as Photoshop Neural Filters, Liquid Mode or Remove Background. You can read more information, including how users can control how their content may be used. For content processed or stored on Adobe servers, Adobe may use technologies and other processes, including escalation for manual (human) review, to screen for certain types of illegal content (such as child sexual abuse material), or other abusive content or behavior (for example, patterns of activity that indicate spam or phishing).”

There was one last question in my mail: “Can Adobe assure its customers that it will never ever change terms like Instagram/Meta did to train your AI?”

There was no answer to that one.

You can draw your own conclusions, but it’s time for tech companies to stop screwing around for their own benefit, listen to the users who pay them, and act in a transparent way. Their time is up.