- | 9:00 am

This simple icon makes it easy to spot AI-generated content

The majority of Americans don’t trust AI. These three design concepts from Artefact could help reduce anxiety and engender agency in the AI age.

In the six months since OpenAI let ChatGPT loose onto the world, “the discourse” around generative AI has become omnipresent—and borderline incoherent. Forget whether artificial intelligence will remake the economy or kill us all; no one can agree on what it even is: an alien intelligence, a calculator, that Plinko game from The Price Is Right? But while experts joust over metaphors, three-quarters of Americans are fretting over something much more practical: Can we trust AI?

For Artefact, a Seattle-based studio founded to “define the future through responsible design,” this is the question that actually matters. “Am I understanding what’s actually in front of me? Is it a real person, is it a machine?” says Matthew Jordan, Artefact’s chief creative officer.

Visual stunts like the swagged-out Pope have already introduced a new vernacular of images “cocreated” with AI. But what’s funny in a viral meme seems less so in political attack ads. If the hypesters are right, and AI is coming for every kind of information we encounter—from our work emails and Slack messages to the ads we see on our commutes and the entertainment we consume when we get home—how important is it that we know exactly how much of that information was created with AI? And how can designers make it easier for us to trust the information we’re getting?

Artefact believes that building trust around generative AI boils down to three principles: transparency, integrity, and agency. The first two—showing who you are (transparency) and meaning what you say (integrity)—are familiar enough concepts from human relationships and institutions. But agency is important precisely because AI isn’t human.

Even though generative AI (especially chatbots powered by the latest large language models) can convincingly mimic some of the psychological cues that human beings consider trustworthy, that same mimicry can quickly become unsettling if there’s no way to predict or steer the system’s behavior. Agency is all about making the opportunities clear to users. “They want to maintain control over their experience,” says Maximillian West, Artefact’s design director.

At Co.Design’s request, Artefact translated these principles into three scenarios that envision what more approachable encounters with AI could look like. “We didn’t want to make the judgment that AI[-generated] content is bad,” West says. “We were interested in the idea of giving users more affordances to have that sense of control, because that feeling of trust is not being garnered in the same way it is with a human.”

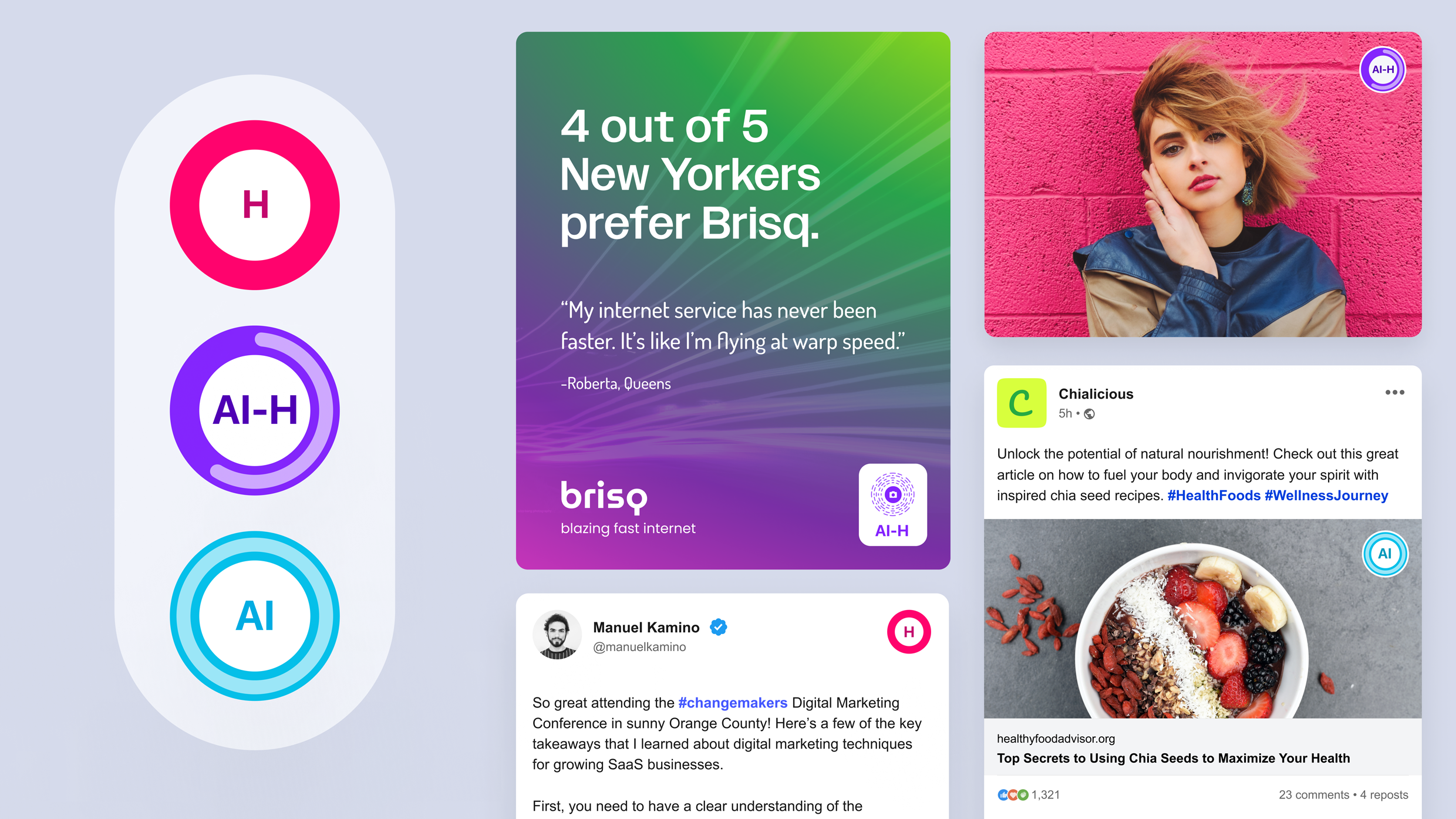

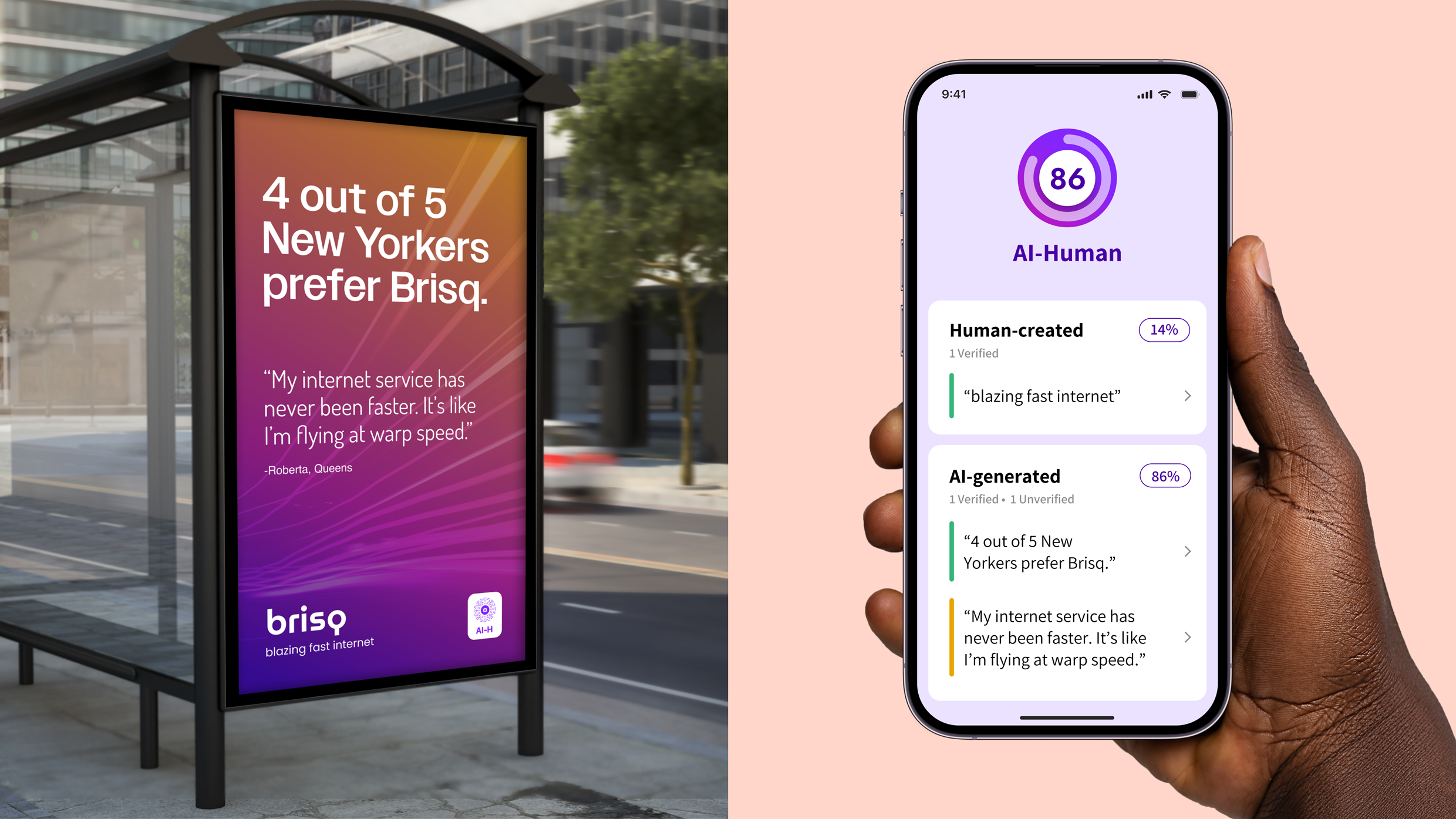

The first step toward trusting generative AI is knowing when it’s there. Inspired by TV content ratings and nutrition labels, Artefact designed a standardized badge system designating three no-nonsense categories: H (100% human generated), AI (100% machine generated), and AI-H (contains both human- and machine-generated content). A ring inside the AI-H badge visually depicts the ratio of human- versus machine-made content, while a soothing blue hue—“a color of trust,” according to West—takes over the badge as it gets closer to 100% AI.

The circular shape was chosen to seem authoritative but not pushy. “It doesn’t have to feel as forceful as a big square. It’s like a little tool tip in the corner that expands on the details of that content,” West explains. On a news article or social media feed, clicking or tapping the badge would reveal more information; in physical media like outdoor advertisements, the badge could be scannable like a QR code.

“Providing immediate transparency about generative content in a familiar and accessible way—that’s this simple grading system,” West says. “It’s really driven by this principle of ‘inform at a glance’ first, and then allowing the user to dig in progressively to see those details.”

Detecting the mere presence of AI is one thing. But given the technology’s propensity for fabricating falsehoods—“hallucinating,” in the industry’s cutesy parlance—how should the content itself be judged? It’s a question with serious stakes, especially for users seeking medical or financial advice from companies increasingly eager to AI-ify their services.

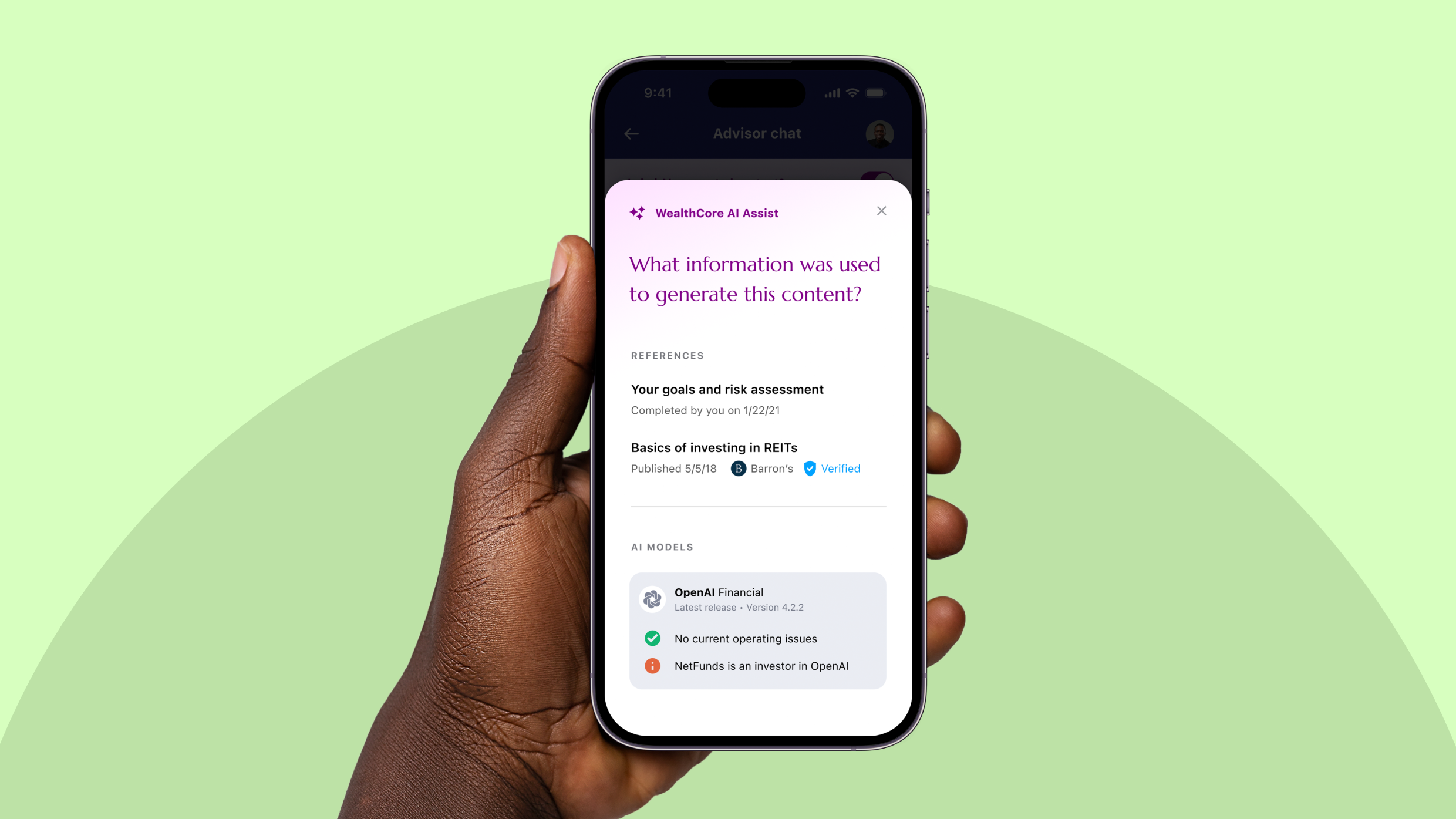

Artefact imagined a personal-finance app in which chats with a human adviser are commingled with AI-generated recommendations. “My assumption is that everything that I hear is actually coming from my adviser’s mouth, but the adviser might be using this generative tool as part of a back-end process,” West explains.

Extending the AI badges’ theme of progressive disclosure, Artefact designed a nested series of prompts that invite users to pop the hood of the app’s AI. A toggle at the top of the screen highlights machine-generated text, separating it from the human commentary. From there, a user can drill down into the provenance and processes behind the AI content: Verified information sources, language-model specifications, and even potential conflicts of interest are neatly laid out.

“We’re advocating [for] understanding how the sausage was made,” West says. “If industry is going to integrate these tools in backstage processes that are not visible to the end user, we want to balance the scales a bit more.”

Two things about digital life stress people out: There’s too much information, and too much of it is bullshit. AI amplifies both. But what if there were a noise-canceling filter for machine-generated content?

People already expect this level of control over other sorts of digital noise, like mobile notifications. Artefact envisioned an interface much like the Settings pane in iOS, but for app-specific AI preferences. Like seeing whacked-out Midjourney art in your social feed but don’t want to let fake news photos into your work Slack? No sweat: Just flip a few toggles.

“Every time I interact with content [that may be machine-generated], I don’t want to have to be clicking different buttons in that context,” West says. “We think that industry could potentially explore how to control that at the OS level.”

Combining these design patterns could make our encounters with generative AI feel utterly mundane—which is exactly how they should feel when trust is established. Artefact imagined this Slack interaction, in which a coworker unintentionally shares a questionable photo:

The AI-H badge labels it at a glance (transparency); an “About this image” button provides a way to dig deeper (integrity); finally, app- and OS-level filters parse the image’s metadata to generate a “provenance tree” that lets the user judge the content for herself (agency). No fuss, no muss.

Of course, none of these designs have a chance of being implemented without the necessary technical and regulatory infrastructure in place. If there’s no Food and Drug Administration, Federal Communications Commission, or even Motion Picture Association of America for generative AI, the badges have no authority. If businesses see no upside in creating trackable metadata for their AI-generated content, “learn more” provenance drilldowns can’t happen. And without both of these elements, OS-level preference panes will have nothing to control.

But that’s why Artefact calls these designs “provocations.”

As Jordan puts it: “I think of it as not quite a wake-up call, but just a very clear, tangible, actionable thing. So instead of talking about ‘trust’ more abstractly or idealistically, it’s actually making a thing that organizations, businesses, and maybe the government can start to look at. Up the ladder is where the change happens. But if we can really nail what it looks like as an experience that they, as people, are familiar with—that’s where it starts.”