- | 10:09 am

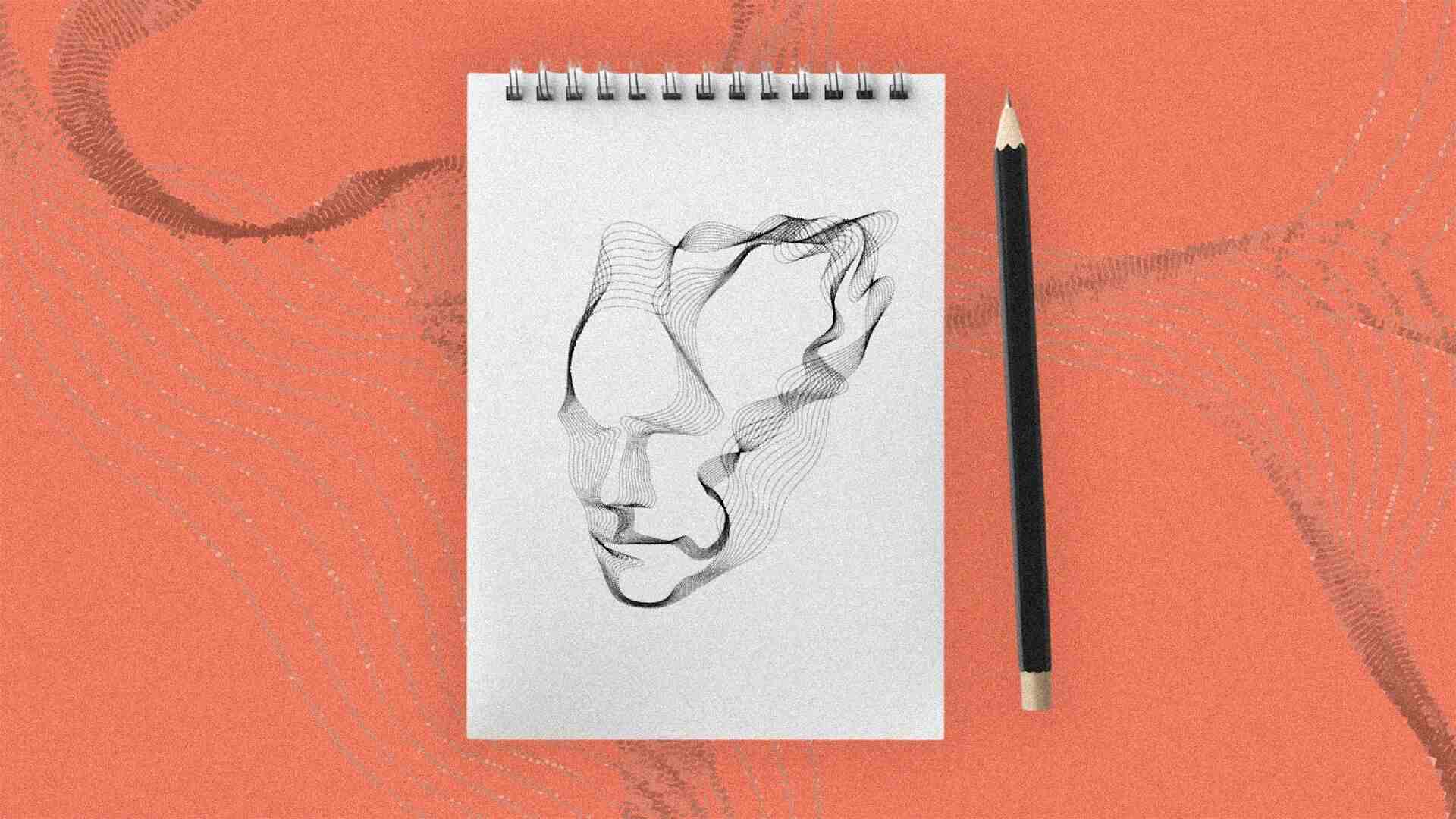

3 factors that must be considered to build AI we can trust

Trust is key to any effective technology implementation. If people don’t trust it, they’re not going to use it. This challenge comes up again and again, especially with AI. While we have mountains of data to train systems on, creating a system that users trust demands thoughtful use of data to ensure meaningful results, and therefore, trustworthy AI.

An example of how this shows up in the real world is the case of seatbelts. Seatbelts are safer for men than women. Why? Data bias. When seatbelts were initially designed and safety tested, the test dummies were modeled on the average body dimensions of men who served in the U.S. Army in the 1960s. Could this existing data be used to create AI that predicts injury risks as accurately for women as for men? Unlikely. But does that mean it’s not trustworthy?

All systems have weaknesses. AI struggles more than traditional software because AI performance depends on the data quality, population properties, and the ability of a machine-learning algorithm to learn a function. You need to study how those features affect the problem to solve in order to build in failsafe mechanisms to warn users when the AI becomes unreliable.

In the seatbelt example, this means acknowledging that because of the original data set that was used, today’s seatbelts are safer for men than women. That doesn’t mean they shouldn’t be made safer for everyone and certainly not that we should stop using them altogether. Just that better data is needed.

Now let’s compare this example with a multibillion-dollar chip factory. The world is currently faced with a massive chip shortage, so it’s imperative that systems run as efficiently as possible. When the AI-powered manufacturing system correctly interprets data, it maximizes production yield and throughput, getting chips where they’re needed, when they’re needed. Failing to interpret the data can result in costly delays.

The need for meaningful AI becomes even more important as we zoom in. Suppose you supply equipment to a chipmaker. To minimize repair time, you want to predict as early as possible when equipment parts will fail. If you can predict a failure early, you can schedule the swap during a periodic maintenance slot. But if you can’t predict it, it could result in an unscheduled equipment outage, which could cost millions per day.

To prevent those issues, you build a deep learning model to classify defects based on part pictures. But because you don’t have enough pictures of part failures (because you don’t have enough data), you use data augmentation techniques like rotation and flipping. This provides more data, which could mean better accuracy, but not necessarily a trusted result. Yet.

To create truly meaningful AI, you need more than just high data volumes. An expert must verify the data is meaningful in the real world. In this case, creating more data from flipped and rotated images is not meaningful because the augmented pictures don’t reflect real-life failure points on the equipment. The AI could still be especially useful for locating suspicious areas and isolating patterns, but not to make production decisions.

3 CRITICAL AREAS THAT IMPACT AI

Ultimately, the best way to understand where the weaknesses lie in your data set is to test it, test it again, and keep testing it. AI requires many iterations and confrontations with the real world. Each confrontation can expose different flaws and allow the AI to interact with scenarios that can’t be predicted.

When you first put an AI solution to market, the key to making sure that it is trustworthy is to look critically at three areas.

Remove bias in your data population: When your technology is making decisions for humans, it’s critical that the AI is evaluating each case objectively. While companies are becoming more aware of the racial and gender bias that can be unintentionally built into algorithms, there are some forms of bias that may be more difficult to track. In particular, analysts need to continuously evaluate results for things like income and education bias.

Control for drift: Particularly for predictive maintenance technology, drift can be a major factor influencing your results. Analysts need to pay close attention to data sets, not just in the first few weeks or months of execution, but over the first few years to ensure that systems are truly spotting abnormalities and not getting clouded by benign deviations in the data. For example, this would look like ensuring that AI is spotting signs of unexpected failures in equipment, not just normal wear and tear.

Check explainability: Humans have a hard time trusting what they can’t explain. Trustworthy AI has to be able to provide an explanation for every step in its decision-making process. There are many existing frameworks for this, and they should be included as standard in all solutions. However, it’s also critical to keep humans in the loop to check the AI’s work. If an explanation doesn’t make sense or feels biased, it’s a sign to take a deeper look at the data going in.

AI is as trustworthy as the data you train it with. The more accurately it represents the real world, the most trustworthy it will be. But mathematically capturing the world isn’t enough. You miss basic human judgment, common sense. Explainability is the biggest challenge experts need to solve in the coming years, and key to helping humans trust and adopt AI.

Arnaud Hubaux PhD is a product cluster manager at ASML.

Ingelou Stol is a former journalist and works for High Tech Campus Eindhoven, including the AI Innovation Center, a European knowledge platform for AI developments, founded by ASML, Philips, NXP, Signify and High Tech Campus. Stol is also the founder of Fe+male Tech Heroes.