- | 9:00 am

AI is changing recruitment in the Middle East. But can bias be eliminated?

In a region that has a multicultural workforce, experts discuss if AI technology reduces bias in the hiring process or adds to it

Have you noticed something baffling in the Middle East job scene? There are record-level job openings, but many still apply for dozens of jobs, even in sought-after fields like software development and digital marketing, and get no interview call. At the same time, companies complain they can’t find the right talent.

Job seekers with the necessary experience worry they’re being unfairly weeded out.

“This was the first time in my life where I was sending out resumes, and there were no calls for an interview,” said Sofia Ahmed, 45, a software developer in Dubai, who sent out her resume more than 50 times for jobs she said she was qualified for. No job offer materialized. Now, she wonders if she was being automatically excluded in some way.

Are AI-powered tools to be blamed?

“Algorithmic tools are just that, tools. We need the human element to assess an individual’s corporate culture beyond what an algorithm can screen for at scale,” says Nancy Gleason, Associate Professor of Practice of Political Science and Director of Hilary Ballon Center for Teaching and Learning at NYUAD.

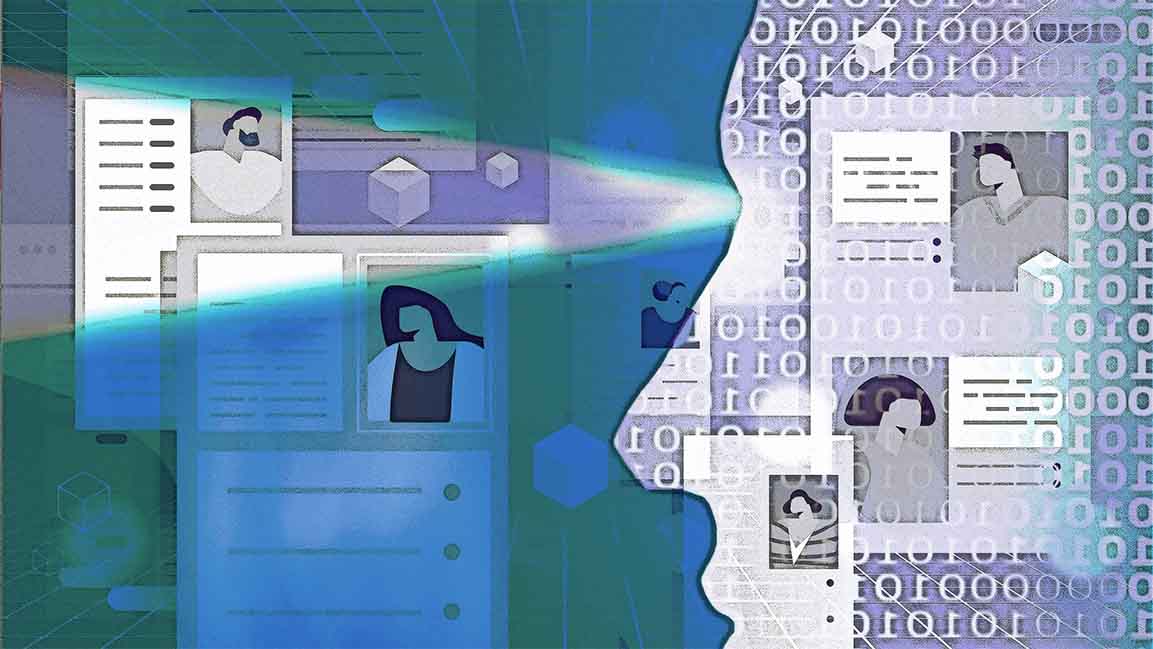

Findings have shown that some AI tools discriminate and use criteria unrelated to work, but AI algorithms are now used extensively in hiring and are playing a role in recruitment. Companies use AI for at least one part of the recruiting and hiring process.

It is estimated at least half of all resumes submitted for jobs are read by algorithms.

“AI in hiring is gaining popularity in the Middle East, offering advantages like efficiency and data-driven decision-making, and helping recruiters find the best match of candidates for the job,” says Chaitanya Peddi, Co-founder of Darwinbox, an end-to-end HR technology solution.

So, in a region that has a multicultural workforce, will AI technology reduce bias in the hiring process or add?

AI-enable software will likely change the biases in hiring processes but not reduce them, says Gleason. “AI-enabled platforms create new ways of measuring skills and competencies beyond the credentialing and experience metrics we use today.”

What we value in employees in the future will likely have bias, but it will be around competencies, Gleason says. “We need to monitor closely what new biases arise and prevent them from setting us back on ensuring multicultural workforces exist and thrive.”

According to Peddi, technology is critical in surfacing and mitigating bias in the hiring process. “Darwinbox’s analytics framework and bias detection help organizations identify and rectify bias at every stage of the hiring funnel, starting with ensuring inclusive JDs, surfacing inequitable representation in hiring channels, and guidance to hiring managers fostering a fair hiring process that aligns with the region’s multicultural workforce dynamics.”

DEMOCRATIZE THE HIRING PROCESS

Some say AI tools democratize the hiring process. If a company is drowning in applications, AI helps analyze them and assesses every candidate the same way.

Marc Ellis, an HR and recruitment company, set to introduce a virtual interview platform powered by AI, called AIVI, that offers real-time feedback to the candidates, claims it has already started garnering interest from job aspirants and big companies.

“The assessment will be based on the interview questions, which will be around experience, skills, and knowledge, the candidate’s response in comparison with the skills expected in the job description, and information provided by the employer. So there will be no room for bias,” says Aws Ismail, Director at Marc Ellis.

The platform is for the first round of interviews, which is the most time-consuming due to many applicants, adds Ismail.

Ismail says its AI-powered platform uses the exact data it is provided with. “If the job description and the CV uploaded are accurate, the questions and assessments generated will be exactly as per the information provided, and therefore, it can’t reject qualified candidates.”

Research has found that, on average, a hiring manager spends at least 4 to 5 hours per week doing interviews, equivalent to nearly three days of work per month. Although algorithmic tools are efficient and save costs for companies, there are chances that these tools can reject qualified candidates. It’s not foolproof.

During the testing phase, Ismail says the platform encountered challenges in picking up the candidate’s voice and brought back some inaccurate results. “Having noticed this, the AI engine is getting trained to recognize different accents and dialects to ensure better results going forward. This will only get better in time.”

Although AI-backed tools are designed to prioritize candidates based on how their skills align with the skills specified in the job description, and streamline the recruitment team’s efforts by objectively ranking candidates, Peddi says, “AI-backed tools work most effectively when used in conjunction with human judgment.”

“They should be seen as partners that augment and enhance the decision-making process rather than replace it, ensuring a balanced and comprehensive evaluation of candidates’ qualifications and potential,” he adds.

REGULATION OF AI IS ESSENTIAL

As many are beginning to use AI in the hiring process, experts say regulating the use is crucial for ensuring fairness, transparency,

data privacy, accountability, and ethical use. “These regulations are essential to prevent biases, protect candidates’ data, and maintain ethical standards in hiring practices, fostering trust in AI technology and its responsible application in the recruitment process,” says Peddi.

In the medium-term, Gleason says regulating the use of AI-enabled recruiting tools will be difficult to implement due to the geographic spread of companies and the proliferation of different platforms, tools, and websites that offer services in this area.

“Ideally, firms will set their standards for themselves quickly and develop mechanisms for constant monitoring to avoid oppressive algorithms that run counter to the values, mission, and vision,” she adds.

According to Ismail, regulation can be as simple as storing data accessible to both employers and candidates of the interview that was conducted, and that will provide clarity should any queries arise about the interview result.

“The beauty about AI is that it is always learning and evolving, and therefore, the quality of the interviews will only get better and better, and therefore, it will effectively regulate itself.”