- | 12:00 pm

Internet pioneer Vint Cerf: As AI becomes part of online life, we must embrace truth and accountability

A so-called father of the internet, Cerf says he worries about AI’s tendency toward misinformation.

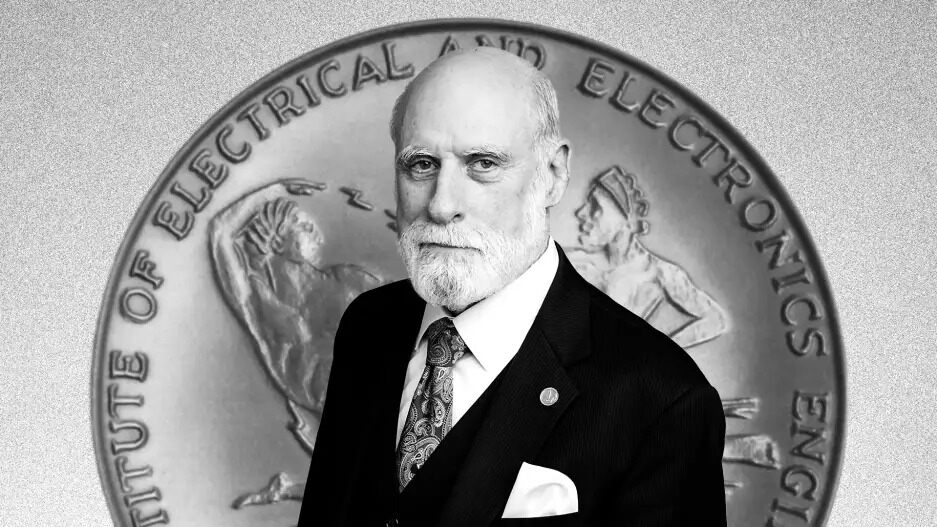

Vint Cerf, widely known as one of the “Fathers of the Internet,” will be presented next month with the Institute of Electrical and Electronics Engineers’ prestigious Medal of Honor. But awards are nothing new for Cerf, the codesigner (with Rober Kahn) of the TCP/IP protocols that enable the movement of all kinds of information over the internet.

Cerf’s latest accolade, which takes place during IEEE’s Vision, Innovation, and Challenges Summit in Atlanta, comes at a time in the internet’s growth that is at once hopeful and perilous. While the internet has become a core part of personal and business life, it’s also become a major platform for degrading truth and dispensing harm in both the digital and physical realms.

Fast Company chatted with Cerf, who now works as vice president and chief internet evangelist at Google, about the threats that generative AI and associated problems may pose to the internet and its users. His thoughts are transcribed below, lightly edited for length and clarity.

ON MISINFORMATION IN THE 21ST CENTURY

The availability of information, its rapid accessibility, of course, is vital to an informed society.

So the point I want to make here is that the internet and the World Wide Web and the indexing services like Google Search have all become means by which information is discoverable and accessible. And the problem . . . is that some of the information isn’t reliable. That’s also true for books and newspapers and magazines and television and so on. All of that is potentially hazardous information. It’s just that the World Wide Web has made it stunningly possible to form nuggets of information, good or bad, from an enormous pile of material—it is a massively facilitating capability. But now it puts a burden on those of us using the system because we have to recognize that the information is going to be highly varied in quality.

We try very hard at Google to serve up answers that have reliable information in them.

You may recall the story behind the PageRank. Larry Page and Sergey Brin were graduate students at Stanford when they came up with this clever idea that says the pages that are pointed to by lots of other pages must be more important than the other ones. So why don’t we bring an order to them that way? And now we have a couple of hundred or more indicators that help us figure out how to rank-order the 10 million hits that we got. Because you can’t look at all 10 million at once. So this tool is very important, but it is not perfect.

ON AI CHATBOTS

I’m beginning to think that we need more and more indicia of provenance of the material that we serve up. And this is especially true when we start getting off into the chatbot space because AI chatbots are like salad shooters.

AI chatbots ingest information willy-nilly—huge amounts of stuff of varying quality. And even if it’s all factual, here’s what I discovered by my own little experiment. I decided to get one of these things to write an obituary for me. It tended to conflate all kinds of factual things incorrectly. So, for instance, it would attribute to me stuff that other people did and it attributed to other people the stuff I did. And I remember looking at this thinking, Well, how did that happen?

Imagine that facts are like vegetables and you’re going to make a salad. How do you do that? Well, you get this little thing called the salad shooter, right? You stick the carrots and the turnips and the lettuce in there. And it chops it all up into little pieces and puts it in a big bowl, which you then toss up some more, and then you hand it out.

So what’s the analogy here? Suppose you take factual sentences and chop them all up into these little phrases, which are called tokens in the training systems. And then when [the AI] responds to your prompting, it just pulls up all these bits and pieces of factual stuff and it puts them together in a grammatically credible way. But that’s all it does—it’s grammatically credible, but that’s the only credibility it’s got. The result is that you can produce very convincing, very authoritative-sounding text that’s totally wrong. But because of the way the weighting system of the neural networks is struck, it looks very believable, especially if you don’t know what the facts are.

A SUPEREGO FOR AI

This is a long way of saying that finding out some way of associating provenance with assertions that are made in the chatbot world will be a very important thing to invent for those of us who are absorbing the stuff. Because even if it’s all factual, which isn’t the case, you run into this problem. And I have seen some attempts to control this.

Remember Freud’s theory. There was the id, the ego, and the superego. The superego, like the prefrontal cortex, is supposed to exercise executive functions to keep you from doing the thing that you would otherwise [not]. So I’m beginning to suspect, although I don’t know for a fact, that people are starting to combine these neural networks and you count the one down here, which is like the chatbot thing, it generates God knows what all. And then there is this executive function saying “What are you doing?” And sometimes it will just stop it and say “I’m not talking to you anymore or we’re not talking about that subject anymore.”

It’s almost amusing, but it’s also important. You and I as consumers of these various tools now have a burden. The price we pay for having instant access to all this information is to think critically about it. And we need to give people tools, provenance being one of them.

ASSOCIATING INPUTS WITH OUTPUTS

I think this is a technical goal in part, and that’s to find a way to associate the output of these systems with their input. And at the moment the salad shooter thing destroys a lot of the connections of provenance because there is ambiguity as to the sources of the information. So this is an interesting challenge. I don’t pretend to have a technical answer yet, but figuring out a way for the system to correctly associate that, with references, would be good. And I don’t think we know quite how to do the binding of the output. There [isn’t] any ability to select what the origins were of these [outputs].

ON IDENTITY AND ACCOUNTABILITY

One of the things I keep talking to myself and others and anyone who will listen about is accountability and agency. Just generally I think we need to find ways of holding parties accountable for what they do and say in the internet environment. And those parties could be individuals or companies or even countries. That means identifiability is important. And that leads into some tough questions about anonymity and pseudonymity and protecting people’s privacy, but at the same time holding them responsible for what they do.

I need to give you another analogy. I do believe in anonymity, or at least pseudonymity, but a license plate has this interesting characteristic. It’s just gobbledygook on the license plate, but some people, police in particular, have the authority to penetrate the anonymity and ask who owns the car. But they only get to do that because they have the authorization to do that. So we need to have a similar kind of penetration of pseudonymity at need and with adequate protections. In our case at Google, for example, that’s a court order before we were willing to reveal any information.

A SOCIAL CONTRACT FOR DIGITAL LIFE

This is not a new problem. Our civilizations have had this problem forever. If you remember the social contract in Rousseau, he’s basically saying citizens give up a certain amount of freedom in exchange for protection and a safe society. That is, my freedom of action [to swing my fist] stops 3 microinches from your nose. And that’s an important concept.

So I think we’re struggling now in this online space to figure out how to instantiate those options. And on the agency side, the same argument holds. We have to provide to individuals and organizations and countries the means by which to protect themselves from harm in this online environment—from being misled, from being defrauded, etcetera, etcetera. How the hell are we going to do this? How do we preserve people’s rights and freedoms while at the same time building guardrails so that people are not harmed by this environment?