- | 8:00 am

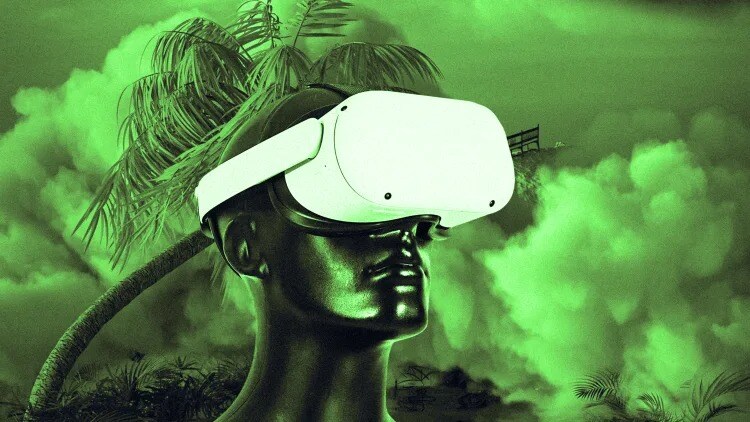

Meta is adding AI to its Quest headsets. That could be a sign of what’s to come

The social giant has been planning AI-powered mixed reality headsets for years. The latest Llama brings it closer to that goal.

META’S AI MIXED REALITY VISION IS COMING TOGETHER

Meta says it will put its Meta AI assistant in its Quest mixed reality headsets starting this August. On the Quest 3, the AI will be able to look at real-world objects via the headset’s passthrough video (i.e. a live view of the outside world that’s broadcast onto the device screen) and provide information about them. That means you can both watch a video within the headset of a Palm Springs resort and query the AI for good vacation outfits, or hold up a pair of shorts via passthrough and ask the AI what color shirt would match.

Behind this magic is a Meta’s Llama open-source model. The AI functions within Quest 3 will have to be powered by a new Llama model that is multimodal, one that can use computer vision to process and reason on visual images. (The model powering the Quest is different from the models Meta announced on Tuesday.) The Meta AI assistant is already offered within the Ray-Ban Meta smart glasses, but only as audio.

The Quest 3 announcement marks the social media giant’s next step toward its yearslong goal of using an AI assistant as a central feature of its mixed reality headsets and glasses. Meta Reality Labs chief scientist Michael Abrash told me in 2020 that Meta head-worn devices would one day collect all kinds of information about the user’s habits, tastes, and relationships—all from the device’s cameras, sensors, and microphones. All that data, Abrash said, could be fed into powerful AI models that could then make deep inferences about what information you might need or activities you might want to engage in.

“It’s like . . . a friend sitting on your shoulder that can see your life from your own egocentric view and help you,” Abrash said at the time. “[It] sees your life from your perspective, so all of a sudden it can know the things that you would know if you were trying to help yourself.”

Meta still has a lot of work to do to make head-worn computers smaller, lighter, and more fashionable. The company also has to invent new chips that are small enough and powerful enough to run AI models. Still, Abrash’s vision for AI-powered mixed reality seems closer than ever.

WHERE WOULD KAMALA HARRIS STAND ON AI REGULATIONS?

Silicon Valley is just now coming to grips with what a Kamala Harris administration might look like, including where she may stand on regulating AI. The assumption is that Harris would continue promoting the main themes of Biden’s executive order on AI. While the executive order directs federal agencies to develop usage guidelines for AI, it also roughs out some directives for regulating private U.S. companies developing the largest frontier models. Those companies, such as OpenAI, Anthropic, Meta, Google, and Microsoft, would have to periodically report to the government their AI safety practices.

Some in the tech industry have cried that such requirements would slow down progress in AI research. Others say that the government’s definition of a “frontier” model will soon capture the work of smaller AI companies, which will be put under undue burden to comply with regulations. Harris, whom Biden originally tapped to lead the White House’s talks with AI companies, has called the either/or of regulation versus progress a “false choice.”

Still, Harris seems to believe that AI may pose serious dangers to the U.S. In a November 2023 speech, she warned that AI could “endanger the very existence of humanity.” She also told tech execs like Microsoft’s Satya Nadella, OpenAI’s Sam Altman, and Alphabet’s Sundar Pichai they have a “moral” imperative to make sure AI is safe.

ANDURIL WON A CONTRACT TO DEVELOP AUTONOMOUS FIGHTER JETS

The Air Force is operating with fewer pilots than ever before in history, but it must always stay prepared for combat. Enter the Air Force’s Collaborative Combat Aircraft (CCA) initiative, which envisions U.S. fighter planes flanked by one or more unmanned, autonomous aircraft. An F-35 fighter jet pilot, for example, might use an autonomous drone aircraft to zoom ahead and report back recognizance information (collected using specialized sensors and cameras). This allows the $110 million F-35 to hang back in safer airspace. Another munitions-laden drone might be used to hit a target so that the position of the F-35 isn’t revealed.

Anduril, the defense tech company founded and funded by Palmer Luckey, was one of two companies selected by the Air Force to build prototypes of the drones. (General Atomics was the other.) Anduril’s Fury drone looks something like a gray shark with wings. It can fly at near mach-1 speed and endure 9Gs of stress. The Furies are controlled by an AI platform called Lattice, which is shared between the fighter jet and support personnel on the ground or at sea.

Since the point of CCA is to use drones as proxies for human-piloted aircraft, the cost of the machines must be low enough to make the drones expendable in some situations. Anduril stresses that it uses readily available off-the-shelf components whenever possible when building its equipment, as well as available digital design and testing tools, in order to keep the cost of the drones down.

The Pentagon is still working on creating a clear definition of “autonomy” for AI drones of all kinds. The CCA drones may never reach full autonomy, because of a law requiring that they always must respond to a human operator “within the kill chain.” But the Air Force has realized numerous use cases that don’t require full autonomy. The CCA program is viewed by many within defense tech circles as a turning point in the way the Pentagon sees, and buys, AI.