- | 8:00 am

OpenAI’s new o1 models push AI to PhD-level intelligence

The new models can methodically reason through complex tasks, recognize their mistakes, and employ novel problem-solving strategies.

OpenAI introduced on Thursday OpenAI o1, a new series of large language models the company says are designed for solving difficult problems and working though complex tasks.

The models were trained to take longer to perform tasks than other AI models, thinking through problems in ways a human might. They can “refine their thinking process, try different strategies, and recognize their mistakes,” OpenAI says in a press release. The models perform similarly to PhD students when working on physics, chemistry, and biology problems.

The o1 models scored 83% on a qualifying exam for the International Mathematics Olympiad, OpenAI says, while its earlier GPT-4o model correctly solved only 13% of problems.

OpenAI provided some specific use case examples. The o1 models could be used by healthcare researchers to annotate cell-sequencing data, by physicists to generate complicated mathematical formulas needed for quantum optics, and by developers to build and execute multistep workflows. They also perform well in math and coding.

Within OpenAI, the o1 models were first codenamed “Q*” (pronounced “Q-star”), then “Strawberry.”

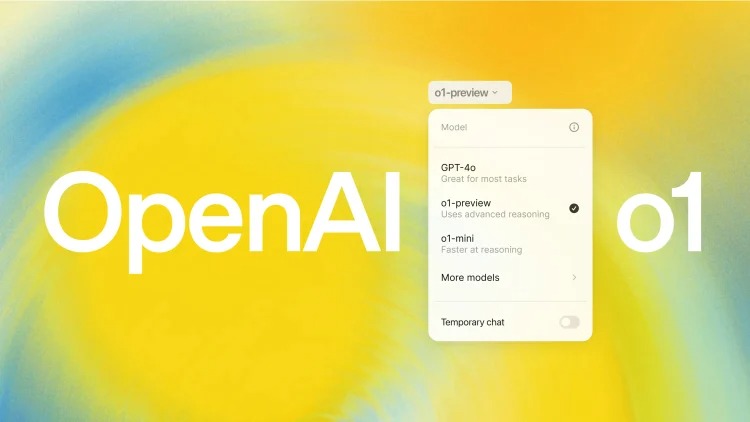

OpenAI says it’s taking a slow and cautious approach to releasing the new models. It’s releasing a couple of “early previews” of two of the models in the series. People with ChatGPT Plus or Teams accounts can access “o1-preview” by choosing it in a drop down menu within the chatbot. They can also choose “o1-mini,” which is faster and good at STEM questions, OpenAI says.

Developers and researchers can access the models within ChatGPT and via an application programming interface.

OpenAI says the new models won’t initially be able to access the internet. Users won’t be able to upload images or files to the models. OpenAI says it’s beefed up the safety features around the models, and has informed federal authorities about the more capable models.