- | 8:00 am

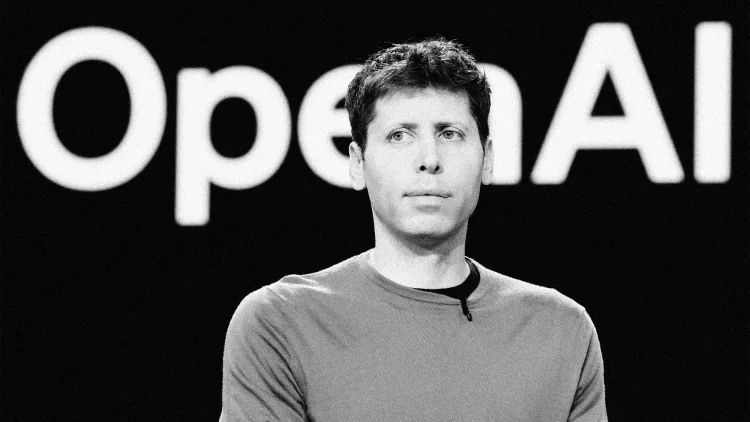

The problem with Sam Altman’s vision for a ‘shared prosperity’ from AI

The OpenAI CEO published a blog post this week heralding the coming of an AI-driven abundance for all, but not everyone’s buying it.

Sam Altman may be right that a bright AI future is coming, but the benefits may not be evenly distributed.

In a rare post on his personal blog on Monday, the OpenAI CEO heralded the coming of an AI-induced “Intelligence Age.” We’re verging on this new prosperity because “deep learning worked,” he writes. And OpenAI proved that by training large language models with more and more computing power you get predictably smarter AI.

With these new abilities, we can have shared prosperity to a degree that seems unimaginable today,” he writes. According to Altman’s vision, we’ll all have our team of virtual experts, personalized tutors for any subject, personalized healthcare valets, and the ability to create any kind of software we can imagine. “With these new abilities, we can have shared prosperity to a degree that seems unimaginable today,” he writes. And most amazing of all: This utopia can start in just “a few thousand days.”

Sure, AI might accelerate human progress for the greater good. But it seems more likely to simply concentrate unprecedented intelligence in the hands of the few who have the resources and skill sets to apply it.

Altman, for his part, suggests that ensuring even distribution is just a matter of producing enough computing power, computer chips, and energy. “If we don’t build enough infrastructure, AI will be a very limited resource that wars get fought over and that becomes mostly a tool for rich people,” he writes.

But as several people have pointed out on X, OpenAI itself is a purveyor of “closed AI.” That is, the company doesn’t open-source its models so that other developers can modify or customize them for specific use cases, or host them within their own domains for data security. That’s partly because building huge frontier models is extremely costly, and the company and its investors have interest in making sure that the research is protected as intellectual property.

People in the open-source community, meanwhile, believe that AI models can be made better, safer, and more equitable when its raw materials—the models, parameters, code, training data, etc.—are put in the hands of a lot of people throughout the ecosystem. And many of the AI models in production over the next decades will indeed be open-source, but most of today’s largest and highest-performing frontier models are closed. Altman, as a member of OpenAI’s board, was the driving force behind tightly controlling access to the models, and focusing the company on monetizing them. His approach doesn’t square with his company’s original “AI for all” ethos.

“I’m a firm believer that closed source would do net [sic] more harm than good overall, in the long term,” Hugging Face engineer Vaibhav Srivastav posted on X. “Concentration of intelligence is not the way forward!”

The benefits of the Intelligence Age are more likely to gradually “trickle down” from well-monied corporations to the rest of us. Large tech companies developing and selling AI will benefit, infrastructure companies such as Nvidia will benefit, and large corporations of all kinds will benefit by replacing human information workers with less expensive AI. A broad elevation of our standard of living may be a macro effect that comes much later.