- | 9:00 am

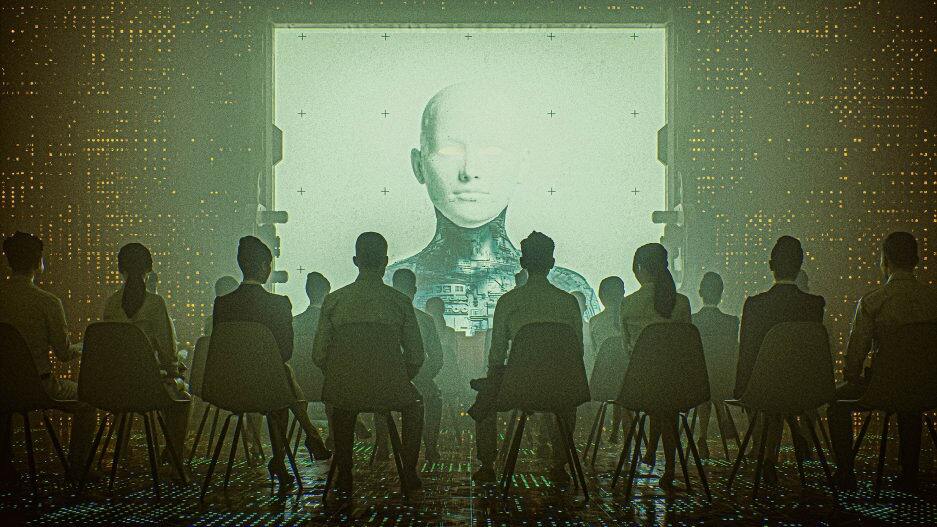

Your next job interview could be with a bot

Employers say AI makes hiring more efficient and less painful for workers, but critics caution that bias risk remains.

If you soon find yourself on the job hunt, there’s a growing chance you’ll be sitting down with a bot for an interview.

AI is increasingly involved in the application process at companies ranging from fast food restaurants to software startups, helping to screen résumés, manage scheduling, and conduct interviews. Employers say the technology is a near necessity in a competitive landscape where job postings can see hundreds or even thousands of applicants who are expecting responses at internet speed.

In the case of retail and restaurant jobs, leaving applicants with a good post-application impression—regardless of whether they ultimately get the position—is particularly important, because those potential hires are also potential customers, says Barb Hyman, founder and CEO of the Australian startup Sapia.ai.

“They’re going to reject hundreds of thousands, maybe millions, but they don’t want to lose them,” she says, “and they want them to feel that they got a fair go.”

Sapia’s core offering is an AI chatbot that conducts brief, first round job interviews through a text interface, which Hyman says can be lower-stress and more convenient for job seekers. Applicants give short answers, generally 50 to 150 words, to questions about their skills and experiences. The AI system maps those answers to particular personality traits, like humility, critical thinking, and communication skills, and assigns applicants a personality profile (“Change is something that excites you rather than threatens you,” was one insight I received during a demo session). Meanwhile, employers get a shortlist of matching candidates.

“I didn’t want to have a huge team of people just doing really mundane screening of entry level roles,” says Rose Phillips, head of partner resources at Starbucks Australia, which has been using Sapia to hire for in-store positions since June. “The other reason was to try and improve the quality of applicants that we were referring to our store managers, [since] they don’t have a huge amount of time to spend on recruitment.”

Sapia’s competitors offer AI for any number of stages of the hiring process. HireVue , for example, uses AI to assist in recorded video interviews, while Moonhub’s AI scours the internet for potential job candidates whom traditional recruiters might otherwise miss. Other companies use AI in more limited ways, like offering chatbots that can collect basic factual information from applicants and answer questions about the application process.

AI hiring companies generally claim that in addition to being efficient, their software is trained to avoid bias based on race, gender, and other protected classes. But critics caution that AI can pick up the existing prejudices of the corporate world. An experimental AI recruiting system at Amazon several years ago was famously abandoned after reportedly discriminating against women based on keywords more common in women’s résumés, and stories of AI bias in other domains are almost too numerous to list. Simply teach an AI system to predict who will fare well in an already biased system, and you’ll likely build a bigoted bot.

“While AI can be designed to mitigate bias, I don’t even know if that’s the norm—I would say that’s not the norm, necessarily,” says Frida Polli, the cofounder and former CEO of Pymetrics, who last year coauthored a paper on using AI to fight hiring bias. “And so we do have to be wary of the fact that AI can also mirror and magnify human bias.” (Polli is no longer affiliated with Pymetrics, and the company didn’t respond to inquiries from Fast Company.)

In the United States, assessing hiring tools for bias typically includes looking at whether they produce a “disparate impact” on particular groups, like certain genders or races. An Equal Employment Opportunity Commission (EEOC) guideline known as the four-fifths rule holds that a job-related assessment should be considered suspect if the passing rate of one group is less than 80% of the best performing group.

But scholars have long said the four-fifths’ threshold, which has been in place since the 1970s, feels more or less arbitrary, and as Center for Democracy and Technology attorney Matt Scherer points out, it’s possible for an AI screening system to pass the disparate impact test in ways that aren’t actually useful. That could mean shortlisting roughly equal numbers of people from different races or genders while still doing a poor job of evaluating candidates besides, say, white men.

“They’ll come up with a tool that selects or scores people at roughly the same rate across demographic groups,” says Scherer. “The problem is that that approach doesn’t necessarily get you, for example, the best women candidates if you’re in a traditionally male-dominated job. Instead, what it’ll give you is female candidates who share the most traits with male candidates.”

Federal law generally doesn’t require companies to disclose AI systems they’re using, or AI hiring vendors to share information about how their systems work or what sort of antibias testing they’ve done. That means it’s often difficult for job applicants to know much about systems in use at particular companies and how they make decisions. In some cases, where AI is used to review résumés or recorded video interviews, applicants may not even know it’s being used at all.

A New York City law that attempts to remedy that, known as Local Law 144, went into effect last year, but experts say so far the impact has been limited. The law requires NYC employers who use an AI tool that “substantially assists or replaces discretionary decision-making” in employment decisions to commission an annual independent bias audit, publish the results, and notify local job applicants that the tool is in use. But a study published in January identified only 18 employers that posted audit reports, which the study authors say may be due to a loophole enabling employers to determine whether AI is sufficiently involved in decision-making to trigger the law’s requirements.

And since the law doesn’t create a central repository of audit reports, it’s effectively impossible to compile a list of employers creating them under the law other than by checking their websites one by one.

Even auditors can have difficulty accessing details needed to understand how well AI systems work, according to a forthcoming follow-up study. That’s because the audit requirement doesn’t apply to the AI companies that operate the systems and have data about how they work, only to the NYC employers who use their services, perhaps because they’re more cleanly under City Council jurisdiction. “Really almost nowhere else in the digital economy do we put regulatory obligations on the end user,” says Jacob Metcalf, a researcher at Data & Society and one of the authors of both studies.

AI companies would point out that they do take steps to ensure applicants are comfortable with the experience. Sapia shares its personality profiles, video interview firms often let applicants rehearse and reshoot video until they’re comfortable with it, and HireVue in 2021 announced it had eliminated a controversial feature that visually analyzed applicant video. That made job seekers self-conscious about facial movements and wary of discrimination based on attributes like skin color, says Lindsey Zuloaga, chief data scientist at HireVue, and the company found it added little useful data on top of analyzing what applicants were saying.

“At some point, we decided the natural language processing piece is just getting so much more powerful,” says Zuloaga. “Let’s just drop the rest of it.”

But a Pew Research Center survey released last year found many Americans are generally wary of AI hiring tech, with 71% opposed to AI making a final hiring decision and 41% opposed to AI reviewing job applications at all. Some 66% of U.S. adults say they wouldn’t want to apply to a job with an employer using AI “to help in hiring decisions,” according to Pew, with some respondents concerned about bias and the general limitations of AI to understand a job candidate the way a human would.

Of course, dissatisfaction with the job application process didn’t begin with AI. Job seekers have for years complained about hiring bias, impersonal application processes, recruiters overly reliant on mechanistic buzzwood matching, and unsettling personality tests. Indeed, says Scherer, part of the reason it can be hard to automate employment decisions is that unlike other applications of machine learning like email filtering, where people generally agree which messages are spam, there’s nothing like a universal consensus about how to evaluate workers or what makes a good employee. “There isn’t usually massive agreement across lots of different managers and recruiters on exactly how good or bad each employee is at their job,” he says.

And despite optimism among some of the Pew survey participants about human understanding, bias by human hiring staff is well-documented. Hiring staff have a tendency to look for candidates that look like themselves, and people’s gut instincts are often more flawed than they realize. Oft-cited research from the hiring site Ladders suggests human recruiters screening résumés often spend only six or seven seconds per candidate, which Polli says makes skewed decision making essentially inevitable without the aid of an automated system carefully designed to fight bias.

“It’s not to say that the human mind can never make unbiased decisions,” says Polli. “It’s just not going to do it in this standard résumé review process that is six seconds long.”

In theory, that could be addressed by more rigorous human screening, with trained evaluators carefully weighing each applicant’s pluses and minuses. Scherer suggests human evaluation could be coupled with AI tools limited to providing candidates with basic information, verifying they meet minimum job requirements, and perhaps suggesting candidates that might be worthy of a second look.

But at the moment, when employers looking to ramp up staffing seem naturally wary of assigning the workers they do have to time-consuming candidate screening, dealing with AI seems increasingly inevitable for those looking for many jobs.