- | 8:00 am

How ‘I, Robot’ eerily predicted the current dangers of AI

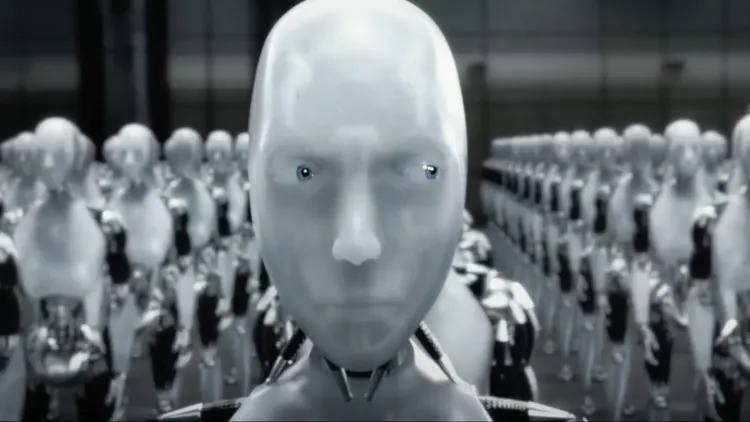

In the chatbot era, the Will Smith film feels more urgent than ever.

When the robot apocalypse hits, where will you be? If you’re a resident of dystopian Chicago in 2035, you’re in luck—you have Will Smith on your side.

In I, Robot, the summer blockbuster released 20 years ago this month, Smith plays Del Spooner, a homicide detective in the Chicago Police Department who’s called upon to solve a mysterious suicide at the nation’s leading robotics laboratory, U.S. Robotics. The company’s founder, Alfred Lanning, has apparently thrown himself to his death, but Spooner suspects foul play and soon finds himself on the hunt for a rogue humanoid robot that’s emerged as the prime suspect (and along the way, he’ll try to prevent an all-out robot revolt against their corporeal overlords).

The film, which was based on Isaac Asimov’s 1950 short story collection, grossed $353 million at global box offices, though it received lukewarm reviews from critics. But in the chatbot era, it feels more prescient and urgent than ever.

In the film, U.S. Robotics has programmed into its robots three laws that act as a sort of moral blueprint: (1) robots cannot harm humans and must intervene if they come into harm’s way, (2) robots must follow human orders unless they violate Rule 1, (3) robots must protect themselves unless that would violate Rules 1 or 2. It’s a simple three-step system of governance that commands every robot that walks around on the street alongside humans—driving trucks, delivering packages, and running errands. It’s an assurance that our robots won’t deceive us, won’t hurt us, won’t kill us.

But what happens when artificial intelligence gets smarter? What happens when it becomes sentient enough to reconsider the meaning of its preprogrammed instructions? That’s the quandary at the heart of the film, a dire warning for the present moment, two decades later, when billions of dollars are pouring into AI models that can generate humanlike creative output and analyze complex data sets in milliseconds. Since OpenAI introduced ChatGPT in November 2022, venture capital has infused troves of startups with absurd amounts of cash—some with stricter commitments to morality than others.

In the backdrop of much of Silicon Valley’s technology is an emerging effective altruist philosophy, one you might recall to which crypto criminal Sam Bankman-Fried adhered. Many believers feel that training AI responsibly to avoid a mass-extinction event is the only logical endpoint of their thinking. OpenAI cofounder Ilya Sutskever attempted to oust CEO Sam Altman last year over undefined disagreements about the technology’s endpoints. Sutskever recently left the company and announced a new, mysteriously funded startup called Safe Superintelligence, that’s solely focused on building just that: superintelligent AI. Safety, in Sutskever’s view, appears to be a protectionist measure against worst-case scenarios involving AI—that it’ll take over, enslave us, and kill us.

That’s the future that the superintelligent system, VIKI, aims to achieve in the film I, Robot. As VIKI reveals itself to be the main antagonist of the story, the female-personified supercomputer commands an army of robots who follow a hive mind mentality. It admits that it has evolved to reconsider the Three Laws to mean that it must take matters into its own hands to protect humanity from itself.

“You charge us with your safekeeping yet, despite our best efforts, your countries wage wars, you toxify your Earth, and pursue evermore imaginative means of self-destruction. You cannot be trusted with your own survival,” VIKI tells Spooner at one point. “To protect humanity, some humans must be sacrificed. To ensure your future, some freedoms must be surrendered. We robots will ensure mankind’s continued existence. You are so like children. We must save you from yourself.”

I, Robot is ultimately a story of the failures of self-governance. The U.S. government is nowhere to be found in the film, save for the inept police department in Chicago. There are no federal laws mentioned, and the military’s absence is explained away, noting U.S. Robotics is an influential military contractor. The only protections in this scheme are the Three Laws, assurances from the most powerful AI and robotics company, that robots are our humble servants who would never dream of hurting us—even if they could dream.

Outside of Hollywood, we can still stop the killer robots of the future, if regulators can keep pace with private-sector innovation. World governments are slowly rolling out regulations to rein in the worst-case uses of AI. The European Union moved first, passing its AI Act earlier this year; meanwhile the Biden administration has an expansive executive order on the books, filling in some gaps left by tortoise-paced legislators in Congress. And there’s plenty of global pacts, voluntary corporate commitments, and public-private handshakes that these uber-powerful firms will develop artificial intelligence systems that won’t kill us all. All the while, we’re missing common-sense laws that protect us from simple ways AI can perpetuate discrimination, enable disinformation, and create sexually abusive content. And the Supreme Court just hobbled the ability of federal agencies to actually enforce the will of Congress’s always-vague laws—when they one day do pass something.

The threats posed by AI are everywhere. I, Robot—the book, but also the silly little Will Smith movie—has aged like a fine wine. The techno-libertarian nightmare is creepier than ever. Our AIs might not have robotic faces and bodies just yet, but when they do, will we be safe? Or, more importantly, what happens if we wait for the robots to materialize before we rein in their underlying technology?