- | 9:00 am

How to make AI more emotionally intelligent

Experts from the Yale Center for Emotional Intelligence explain how to address the worker loneliness epidemic and workers’ concerns about AI.

There is no shortage of commentary on artificial intelligence. From the social media conglomerates of Silicon Valley to the political powers of Washington, D.C., there are many unanswered questions about the future of AI. Namely, what to do with it, what to do about it, and what comes next?

However, another kind of intelligence has also emerged and evolved alongside AI over the past 30 years: emotional intelligence (EI). In order to positively shape the future of work and protect workers in an increasingly artificial world, we need to preserve what it means to be human.

Here’s how we can make AI more emotionally intelligent in our workplaces, communities, and schools.

The rise of two intelligences

AI refers to the simulation of human intelligence processes by machines, particularly computer systems.

EI, on the other hand, refers to how humans reason with and about emotions. It’s set of skills that include the ability to:

- Recognize emotions in oneself and others

- Understand emotions causes and consequences

- Label emotions with precise words

- Express emotions in accordance with social and cultural norms

- Regulate emotions to achieve goals and well-being

As scientific fields such as EI, social and emotional learning (which brings EI and related skills to schools) gained traction in the 1990s to 2000s, the internet was also growing and impacting nearly all facets of daily life. The internet led to the development of new gadgets, tools, and platforms, like social media.

At first glance, it might seem that as AI advances, human capabilities like EI would diminish in importance. After all, machines excel at tasks that require logic, analysis, and computation, often outperforming humans. Yet, AI learns from humans first, including from what we excel at (like our capacity for humor, empathy, and love) and what we don’t.

Indeed, the reality of a dual intelligence—one artificial and one emotional—is far more nuanced than “us versus them.”

Striving for connection and dying from disconnection

Humans have never had more means for connection than we do today. Phones, tablets, email, social media—you name it. And now the mass proliferation of AI tools like ChatGPT have promised a fast-paced world in which you can do it all and be it all: happy, successful, productive, and influential.

But that’s not the full picture. Deaths of despair have skyrocketed. And as U.S. Surgeon General Vivek Murthy proclaimed, we are facing an epidemic of loneliness. According to research from the American Psychiatric Association, 30% of adults report feeling lonely at least once a week and 10% are lonely every day. This data depicts a harsh reality: workers are lonely, disconnected, and hurting. But it’s not from a lack of trying.

From Slack and Snapchat to TikTok and Tinder, the menu of options humans have created for connection is plentiful and ever-growing. It seems then that our epidemic of loneliness and poor mental health may be caused not by a lack of opportunity for connection but by a lack of meaningful relationships. People’s desire to engage with someone, or something, is a symptom of the very disconnection that drives them back to the dopamine-fueled interactions of our virtual worlds.

How can AI and EI coexist?

The science of EI reminds us that there is no such thing as a bad emotion. Emotions are information; they are not good or bad. Just as there is no such thing as a bad emotion, we believe there is no such thing as inherently bad, or evil, AI. AI is not sentient. Which is precisely why we need to be careful.

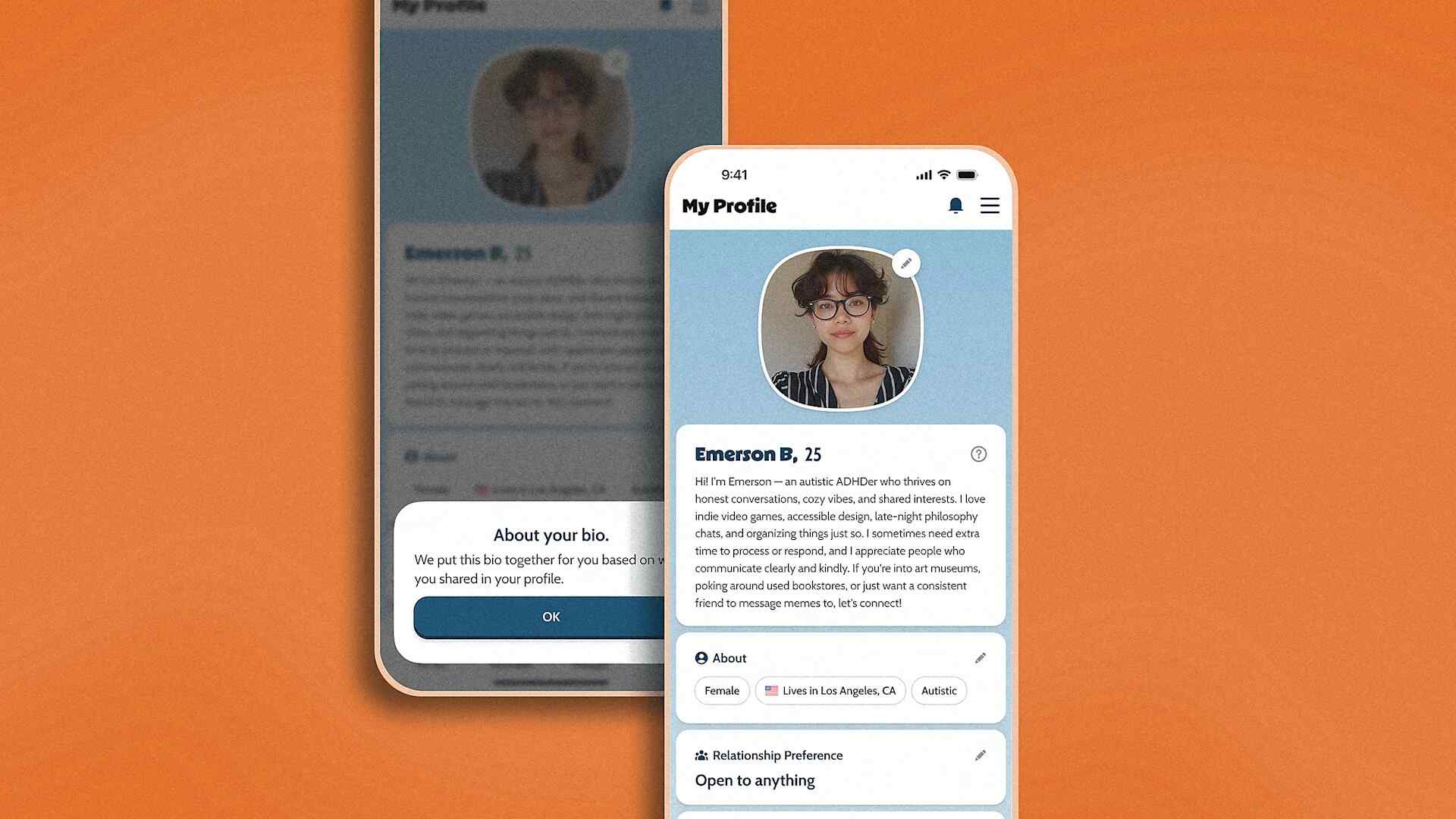

For 15 years we consulted with leading tech companies on the integration of EI into AI and social media platforms. We have seen the positive impact of this work. For instance, teams have built interfaces that facilitate students’ online socioemotional awareness and self-regulation. But we have also seen the negative impact of this work. For instance, we have received requests to create AI products that would interact with people by ‘expressing’ emotions or attempt to build an advice-giving relationship with them.

To be sure, AI can be harnessed to improve EI. But in the end, we strongly believe AI is neither a friend nor a therapist. AI is simply another tool or device, and our devices often fall short of our expectations, especially when we attempt to anthropomorphize them.

Virtual assistants, for example, that try to form a relationship with us can be dangerous and can exacerbate loneliness and leave us hungry for human touch. And, AI’s capacity to interpret and understand emotion is restricted by the fact that we live in a rich tapestry of cultures in which feelings are perceived and expressed in diverse ways.

Current mental health statistics make it evident that we must prioritize the work of building authentic connections in the “real” world—a world in which AI and EI can, in fact, coexist, one enhancing the other. Here are a few strategies to get there.

1) Formalize EI education in schools

The research is clear: people with more developed EI are healthier, happier, and more productive.

Leaders at Fortune 500 companies say that at least 70% of our jobs require EI skills like teamwork, relationship management, and resilience. And leaders with higher EI create healthier workplaces. Yet our informal surveys suggest that fewer than 10% of adults in the US say we had a formal education in emotions or emotional intelligence. We need individual schools, school districts, and workplaces to prioritize EI.

Parents also need an education in EI. These skills are learned first at home and need to be practiced there. Why? Promoting good mental health lessons at home lessens the burdens on schools and companies.

What’s more, a 2023 systematic review and meta-analysis of 424 research studies involving more than 500,000 students in 53 countries concluded that students who participated in social and emotional learning (SEL) interventions had elevated emotion and social skills, higher academic achievement, and better quality relationships. Participation in SEL was associated with less anxiety, depression, and aggressive behavior. And students felt safer and more positive while in school.

2) Policymakers and CEOs need an EI education

Leaders also need to learn the importance of EI. Emotions are inextricably linked to identity and culture, and influence everything from our physical health to our professional performance. Development of emotion skills depends on who we interact with in the world and how.

Tech CEOs need employees with high EI who understand how products and algorithms impact the emotional development of users and can perpetuate inequities. Employees in tech companies need CEOs who model high EI.

Likewise, policymakers need EI. They use checklists to measure the impact of their decisions on the environment, on corporate governance, on equity, and other criteria—why not on the mental health of our society? Imagine a world where corporate and public decisions and policies are judged by how they affect the ability of people—children included—to manage their emotions, cope with loneliness, and develop meaningful relationships. What would be different?

In short, if we want AI to be emotionally intelligent, we must first demand EI of ourselves and our leaders.

3) The people who build and use AI need EI

We believe that AI has the capacity to, when fused with EI, can help, not hinder, our humanity. Therefore, the people who are building AI must prioritize and embed EI into the very designs we interact with on a regular basis.

Fortunately, this is already happening in many ways. AI is being used in psychology to identify mental health such as loneliness.

With the formalization of EI education alongside parents, policymakers, and CEOs that understand the importance of emotion skills, we can create a future in which AI is not combatting our very human nature, but complementing it through intentional, emotionally intelligent design.