- | 9:00 am

POV: HR departments need to fear AI’s racial biases

A chief product officer argues argues that HR departments need to be vigilant to surface racial bias challenges when using common AI tools like ChatGPT.

As artificial intelligence is integrated across the workforce, HR professionals are feeling the squeeze to adopt tools like ChatGPT to make their teams more efficient. But it can be a tricky balance. Investigations have shown that common AI tools like ChatGPT exhibit what appears to be racial bias when asked to perform basic HR processes.

Like executives across the country, many HR leaders are embracing AI. Some HR leaders are afraid they’ll fall behind if they don’t adopt AI and fear they’ll be replaced if they do.

HR teams are under tremendous pressure. Job seekers and employers alike expect them to improve the hiring process—all while being asked to do more with less.

The quiet quitters and the cross-country movers of the pandemic set off a wave of resignations. And across industries, companies have increasingly struggled to retain ever more fickle employees.

Ironically, HR teams are among those with the highest turnover rate. One reason for this is that HR professionals experience high levels of burnout.

Creating job descriptions, sourcing applicants, fielding résumés, and managing candidates throughout the hiring journey are all time-consuming processes that eat up the capacity of already squeezed HR teams.

In this way, many feel that AI has been a boon for HR teams and the tech companies that service them, as organizations look to outsource more of HR’s rote practices. Since the pandemic, the landscape of HR technology companies has grown dramatically. For instance, the value of the global human resource technology market was valued at $32.58 billion in 2021—and is estimated to reach $76.5 billion by 2031.

THE RISK OF USING AI IN HR

Yet as companies integrate AI into their HR workflows, they risk running up against the “black box” element of artificial intelligence. That is, AI typically offers no audit trail for where the data came from, and insufficient guardrails as to what kind of biased data the models have consumed.

As a chief product officer who works closely with HR practitioners, I believe this is a dangerous trajectory. Candidates may face unfair hiring processes as companies inadvertently allow bias to seep into their decision-making, leaving them vulnerable to legal action, regulatory sanctions, and financial penalties.

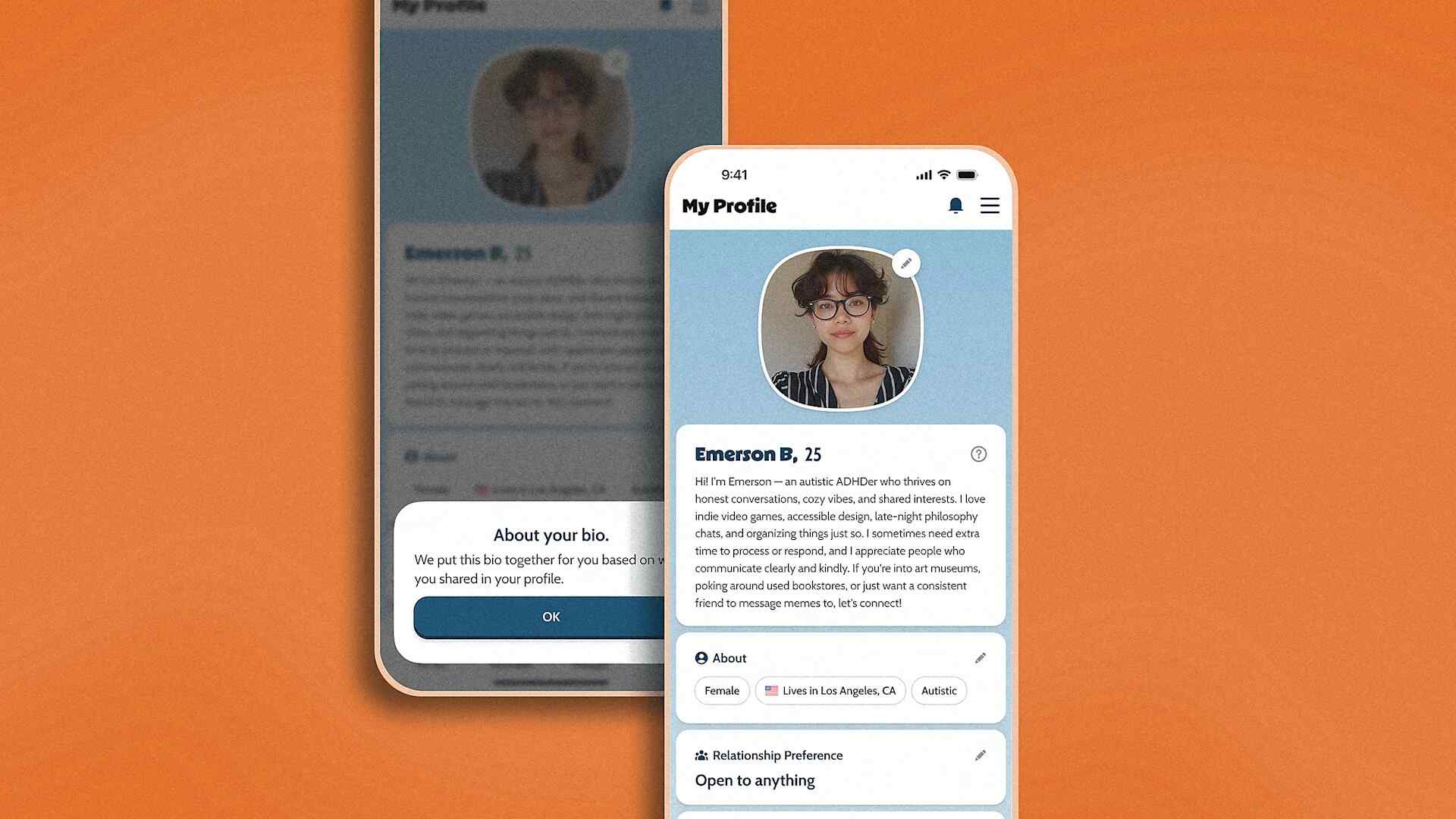

Despite the risk, a 2022 survey found that 64% of companies use some level of AI to screen applicant résumés.

It’s a tenuous line these companies risk walking. While it may make certain tasks and processes easier and faster, using AI for recruitment and screening purposes can exacerbate trends already present in the company—favoring men, for example, in an already male-dominated field.

A Bloomberg investigation found that ChatGPT exhibited racial bias when asked to rank candidates by qualification based on their résumés. Despite having the same work histories and experiences, candidates with names associated with Black men and women were regularly ranked lower than candidates with white-sounding, Hispanic, and Asian names. In this way, AI has a serious race problem, and HR teams that turn authority over to AI are creating broad-spectrum risk.

HOW HR CAN MORE SAFELY USE AI

That’s not to say HR departments shouldn’t ever use AI. By reversing AI’s potential bias, and screening in—rather than screening out—qualified individuals who might otherwise face barriers, companies can foster breakthrough opportunities for candidates.

I believe AI can drive efficiency in ways that create less risk for companies while enhancing recruiter productivity and candidate engagement. For example, generative AI can help draft job descriptions or work at scale to create personalized messages that get applicants excited about an opportunity. In such practical use cases, the output of the AI is controlled, reviewed, and edited by a human.

The key for HR professionals is to find this balance. Yes, AI can certainly help streamline and make certain processes more effective in hiring pipelines, but ceding control to artificial intelligence—especially when you’re not sure how an AI system works or what data it’s looking at—can be dangerous, not only for candidates but also for companies.

This is why transparency is paramount. Humans must be able to intervene when AI makes a mistake. Meanwhile, candidates may soon have legal rights regarding the use of AI. New York City recently passed the first AI hiring law in the U.S., which mandates that companies disclose their use of AI in hiring practices. Employers also must audit the AI they choose to leverage.

HR professionals who struggle to understand how to integrate AI into their practice should focus on moderation. Instead of viewing AI on the extremes of the spectrum—the status quo on one hand, or entirely replacing the role of humans on the other—teams can look for AI tools that improve efficiency incrementally while still keeping humans fully engaged. Practical, thoughtful, and responsible AI adoption can create a more engaging candidate experience that accelerates HR practitioner workflows.

In an era of “doing more with less,” creating a more productive workforce will depend on new solutions. I believe AI is the ticket—but it must be used responsibly.