- | 9:00 am

ChatGPT can leak sensitive data. How do companies protect customer data?

With the right mentality, education, and technology, businesses can create a safe environment, say experts.

Last year, when ChatGPT took the world by storm, many flooded OpenAI’s website to test the generative AI. Tech nerds used it to measure our distance from the singularity; students were using it, teachers fretted to write essays. And companies kicked off a frantic race to integrate ChatGPT.

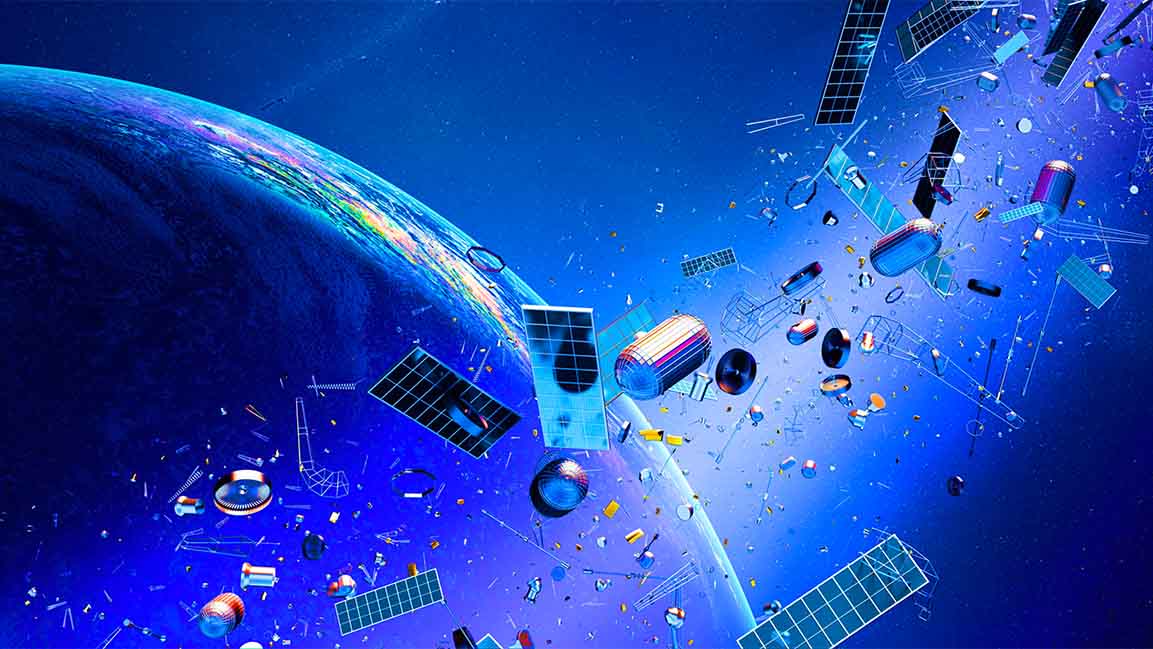

But pushing those models out into the wild led to security lapses and data leaks. And the leaks are what companies consider most damaging. It often results in multimillion-dollar lawsuits or rivals discovering trade secrets.

In March, OpenAI confirmed that a chatbot’s source code bug caused a data leak – chat history, names, email addresses, payment addresses, and the last four digits of credit cards were exposed to other users.

Apple restricted employees from using ChatGPT over the fear of data leaks. And Samsung banned ChatGPT from its offices after employees uploaded proprietary source codes to the public platform.

Apple and Samsung are far from the only companies instituting such a ban. Others include JP Morgan, Verizon, and Amazon.

FACILITATE THE CREATION OF MALICIOUS CODE

“ChatGPT can gather and keep whatever data a user enters, and security issues emerge. Giving access to confidential company information to ChatGPT poses a potential danger of information leakage, privacy infringement, and hacking that could jeopardize the company’s legal standing,” says Maher Yamout, Senior Security Researcher, Global Research & Analysis Team at Kaspersky.

While ChatGPT itself is not dangerous, in the wrong hands, it potentially facilitates the creation of malicious code and, at first glance, well-worded phishing emails.

“During the introduction of the textbot, the Internet was flooded with examples of “malware” written by the platform; it has since been severely limited if not completely blocked,” says Martin Holste, CTO, Cloud at Trellix.

“A well-experienced attacker understands the footprint and capabilities of his victim, which is not the case with ChatGPT. In several test cases, the malware created by ChatGPT was not functional or was immediately detected by the security solutions such as Trellix, which shows that it lacks uniqueness and creativity, which are necessary for today’s evolving threat landscape,” he adds.

Since ChatGPT is good at writing code snippets, it allows an attacker to code exploits more quickly or in a coding language with which the attacker is not proficient. “ChatGPT is also good at summarizing complex schemata, which attackers can use to analyze configurations to move laterally inside an environment,” says Holste.

As ChatGPT and generative AI grows in popularity in the Middle East, the big question is: Is it going to be safe for businesses?

“Yes, provided we are doing all the right things to protect sensitive data. It is imperative that organizations actively manage the use of ChatGPT and other generative AI tools,” says Steve Foster, Head of Solutions Engineering, MEA at Netskope.

Threat actors are weaponizing the interest in ChatGPT with malicious extensions masquerading as ChatGPT extensions, cropping up faster than they can be taken down.

If you have searched for ChatGPT browser extensions, you will be hit with lists of “the most useful ChatGPT extensions you have never heard of” or YouTube videos promising to show you which extensions will change your life.

“There’s an increase in plug-ins and software extensions that connect with ChatGPT to leverage the power of AI for many use cases. In the same way that a computer is infected with malware from a phishing website, a breach of privacy can pose a serious threat to the unattended use of add-ons and extensions,” says Yamout.

While these application programming interfaces (APIs) make it easy for developers to build plug-ins and browser extensions offering a range of tools and services to enhance the browsing experience by augmenting or altering interactions with the web, it is becoming a “wild west.”

“In essence, the rights of the extension – found in the small print – can expose the user and the user’s organization to browser and device manipulation and data ownership issues. For example, some extensions can access your camera and microphone, while others claim rights over any data inputted within the instructional request,” says Foster.

Focusing on whether these extensions can be taken down as fast as they crop up is not the key. Instead, Foster says, we should look at ways that organizations can set up security policies that give generalized restrictions over what AI-based apps can be accessed, in what way, and using what data. “AI apps are no different to any other cloud app in this regard – if you focus on protecting your data from exfiltration and infrastructure from infiltration, it can prove to secure against all comers,” explains Foster.

COUNTERMEASURES FOR ORGANIZATIONS

With the increasing use of ChatGPT, organizations can take measures to safeguard the security of their sensitive data. And this is possible with technologies already in use within organizations today, so AI shouldn’t seem like a fresh challenge.

“Firstly, deploy tech on endpoints that can control what data is put into ChatGPT; get visibility into all important business applications and infrastructure and this can be done by collecting activity and audit logs from devices and SaaS applications and making it useful to security staff and AI, and give your security staff tools that can leverage AI for generating remediation actions,” says Holste.

According to Foster, security teams need visibility, using automated tools that continuously monitor what applications users attempt to access and in what way. “Organizations can use this visibility to understand not just a potentially risky activity but also positive or innovative use cases they may be able to build on securely.”

“With the right mentality, education, and technology, organizations can create a safe environment for users to take advantage of the many benefits of these generative AI applications,” adds Foster.

CAN REGULATION CONTROL MISUSE?

Imposing new laws and regulations on SaaS applications is an approach that is fraught with challenges as most nations are reluctant to be hasty to block technology wholesale, and consumers are well versed in the methods for working around national borders when accessing internet services.

“We are more likely to see existing data protection laws explicitly applied to ChatGPT and other generative AI applications, reiterating the need for these new applications to conform to the GDPR and KSA’s PDPL,” says Foster.

And while regulation can, in theory, help control misuse of tech like ChatGPT and effectively govern what businesses can do with LLM technology, malicious actors are not bound by any of them.

“Most misuses are already covered under existing regulation and libel laws, and we will need to see more explicit regulation around AI misuse. But the bad guys will not play by the rules, so regulation will not affect AI-enhanced cyber-crime,” says Holste.

Although we are unlikely to see many states specifically regulating ChatGPT, it doesn’t mean there isn’t room for better policies enacted by individual organizations. Foster says, “The compliance requirements of existing data protection legislation and regulations will motivate many organizations to act responsibly in applying robust policies and controls.”

Discussions about potential accountability mechanisms and growing regulatory measures for ChatGPT, which has grown to be the fastest-growing consumer application in history with more than 100 million monthly active users, especially about its impact on security, is gain momentum, experts warn it’s crucial to find the appropriate balance between free-use and regulated use.

“Regulating technology too strictly might impede innovation and keep it from developing to its full potential. On the other hand, a lack of regulation might result in technological misuse,” says Yamout.