- | 4:00 pm

These 3 companies show why the next AI wave won’t revolve around chatbots

Companies like Adept and Navan are racing to launch smart assistants, agents, and visual systems that are even more powerful than classic chatbots.

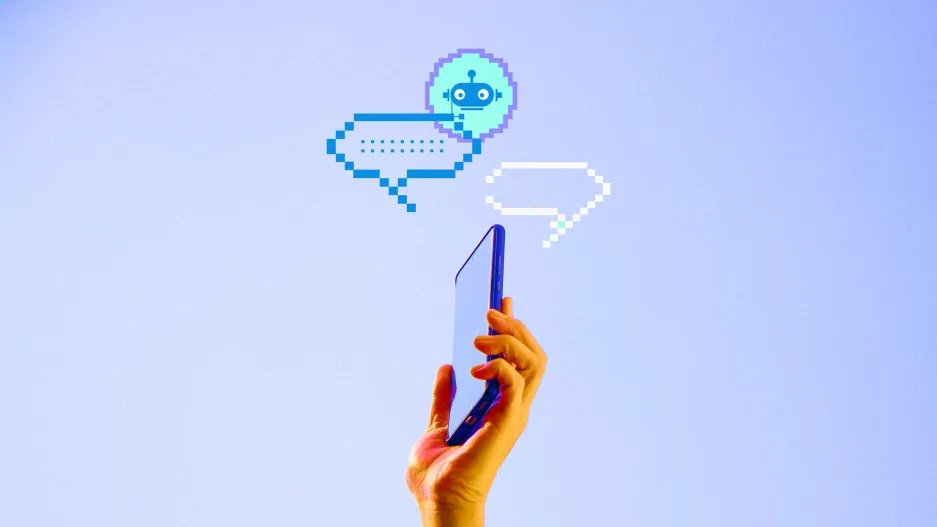

For all the considerable—and, yes, over the top—attention that artificial intelligence has received since ChatGPT first launched in 2022, we still mostly interact with this new form of computing in an old school way: We type, the chatbot answers.

Still, AI chatbots have become so immensely popular—used by something like one-third of Americans, per one survey last summer—that there’s already talk of a bot bubble.

But chatbots may not be the future—and may not even be the AI form with which most of us interact. The AI industry is racing to launch a new round of agents, assistants, and multimodal systems that sit in the background of your daily life and don’t rely on a manual, often frustrating back-and-forth with a bot. The next wave of AI—which both does more and knows more about you than its chatbot forebears—will arguably be less visible in our lives and less eerily human-seeming than a chatbot.

That isn’t to say chatbots will disappear. Chatbots are like a floor for AI’s usefulness, says Amelia Wattenberger, principal research engineer on the GitHub Next team, who wrote a recent essay making the case against chatbots. For most tasks chatbots are just not useful enough, she adds. “They’re generalists not specialists.”

But for assistants or agents to take off—let alone take over tasks for us—we’ll need new ways of imagining and designing software, and a new way of thinking about how it fits into our life. (No one wants an assistant that’s always bugging you.) “Interfaces are going to change very drastically,” says Wattenberger, who’s worked on prototyping new ways of adding AI to web applications. “Designing for them isn’t going to involve existing paradigms.”

Here are three ways the AI world is evolving beyond bots.

AN AI FOR DIGITAL DRUDGERY

Forget artificial general intelligence, if you talk to enough people in the AI world, they’ll tell you that agents are the technology’s next big test. Adept, which last year announced a $350 million Series B round, is building an AI agent with a lofty goal: It wants to build software that can do anything a human can do on a computer.

Turn on Adept—it can function as a browser extension—and its multimodal AI model will monitor pixels on your computer as you navigate between various software programs. Crucially, it’ll learn from your behavior at work—the specific way you pay invoices, or how you create a sales lead, for example—and can then mimic those tasks automatically. Call it software for your software. Or as Basil Safwat, Adept’s head of design, puts it, “a tool for tools.” (In November, Adept launched a demo version of its tools, and expects to launch more agent products this year.)

Adept has spent months observing the behavior of enterprise software users with the hope of helping to move things just a little bit faster. Many of us could use the help—the average office worker uses 11 different software applications, per Gartner. “When you actually analyze what you need to do to create a spreadsheet or set up a meeting, it’s actually just a ton of clicking,” says Safwat.

Adept users can also customize “workflows” of everyday tasks—a key function, given that our computer usage is still highly individualized. For Safwat, that means building an AI agent that can both sit in the background as you work and take the wheel when asked. Safwat’s team has designed Adept to balance generative AI flourishes with more traditional, deterministic software interfaces. He believes AI is moving the world toward a more open world of software, where interfaces morph depending on a user’s needs. “One of the company’s core principles is augmentation over automation,” Safwat says. “If we’re doing it right, it should feel like you had an extra super power you didn’t have before.”

AN AI THAT DOES A C-SUITE JOB

In February of 2023, just months after ChatGPT’s public debut, the corporate expense travel management company Navan launched a chatbot named Ava to help customers book travel, get restaurant recommendations and determine a trip’s carbon footprint. But Ilan Twig, Navan’s chief technology officer and co-founder, knew the company couldn’t stop there. In his view, AI was progressing far too fast.

He started playing around with the idea of combining two large language models (LLMs), one to gather travel data and the other to analyze company spending. What if those two LLMs had a kind of conversation? And what if they instructed one of those LLMs to act as if it were a CFO who needed to prepare a board presentation on how to save money on corporate travel?

That prompt generated shockingly good analysis. “The level of questions that it asked blew me away,” says Twig.

By May of 2023, Navan had added the ability for Ava to analyze a company’s travel spending and proactively suggest ways to save millions. This went beyond a chatbot; it was a system that could, in minutes, produce the kinds of reports and charts that used to take hours. Twig had come up with a way of doing part of a CFO’s job.

That intimate look at a company’s data is very much a selling point for Navan,which is rumored to be considering an IPO this year. The company says it does not train its models on proprietary customer data; instead, it uses travel agent information and public databases to refine its AI system. But Navan has a unique window into corporate spending and travel industry data that Twig believes will give it an edge. “In the next few years, a product’s moat, or its level of complexity, will directly correlate to the unique data it possesses,” Twig says.

Beyond chatbots, Twig imagines Navan developing AI interfaces that change, or even disappear, based on a corporate traveler’s location or needs, maybe even before they know them. “My goal as the CTO is to keep exploring and keep pushing the boundaries of these technologies,” says Twig.

AN AI THAT SEES THE WORLD

In January of 2023, Mike Buckley cold-called OpenAI in the hope that it could help his users. By his own admission, Buckley, the CEO of Be My Eyes, an app which helps the visually impaired navigate the world, didn’t know anything about AI. And he wasn’t expecting the biggest player in AI to get back to him.

“They not only took the call,” Buckley says, “but they said ‘Hey Mike, can you keep a secret? We’re going to launch a visual interpretation model. Would you want to partner with us?’”

That partnership resulted in “Be My AI,” which launched in August in closed beta, a service that both Buckley and his users rave about. Users can upload pictures of their world and ChatGPT will analyze them. Then an audio voice describes what’s in the picture. (It can describe a painting in a museum or read a menu, for example.) In one demo, a blind woman used Be My AI to find which treadmills were open at a gym. After uploading a series of pictures, an AI voice guided the woman to the treadmill.

The feedback Buckley got from his community was shocking. “I hate hyperbole,” he says, “but when you talk to people who are blind, they use phrases like life-changing and transformative. They say ‘I have my independence back.’”

Be My Eyes launched in 2015 as a free mobile app that allowed blind and low-vision people to navigate the world. Users connect their phone camera to a live chat and a volunteer helps users do things like read a birthday card or a grocery list. The company, Buckley says, was “a merger of technology and human kindness.” It now has some 600,000 users, 7.2 million volunteers and operates in 150 countries.

Buckley was convinced AI could help even more visually impaired people. During the early phase of their partnership, Buckley says his company supplied OpenAI with 19,000 beta testers who gave the company something they couldn’t elsewhere: real feedback from the blind and low-sighted community. Hallucinations happened, particularly in reading buttons, Buckley says, but they’ve largely resolved since then.

Also in September, OpenAI announced expanded image analyzing capabilities. It’s one of a group of big AI companies that are racing to improve their image analysis features, which in time may prove to be more impactful than image generation. Buckley says he was happy to help OpenAI train its model and credits the company for making blind and low-vision people central to the design process of Be My Eyes.

There are now over 1.1 million Be My AI sessions per month, Buckley says, and the company is seeing strong growth in Brazil, India, Pakistan, Nigeria, and Morocco.

But for Buckley, the real promise of AI will be in the way that it might someday connect directly to the real world in something like real time. At a minimum, that would transform the lives of millions of visually impaired people, he says. “Think about the next iteration of this: interpreting live video,” he adds.

In this version of the industry’s future, whether in apps like Be My AI or in the nascent wearables category, AI would act as an interpretive layer for the world. And it would put chatbots out of a job.