- | 8:00 am

Can AI select better leaders? It’s complicated

Algorithms have the potential to do what humans cannot. But we must proceed with caution.

Leadership matters. Everything humanity has ever accomplished is the result of people working together, which is only possible if someone is in charge of coordinating collective human activity.

Throughout our evolutionary history, it was relatively straightforward to pick the right leaders. For example, our hunter-gatherer ancestors lived in small groups, where everybody knew each other very well. Leadership talent was simply determined by a relatively small set of observable skills, such as physical strength, speed, dexterity, and courage. And while integrity mattered as much as today, it was far harder to fake. If you were a dishonest crook, everybody would know, so the chances of your leading a group were extremely slim.

Fast forward to our present times, and the following challenge arises: Although leadership abilities are far more varied, complex, and hard to observe, we still play it by ear. We evolved to rely on our instincts and decide whether we can trust someone based on our gut feeling, and observable behaviors.

Now that leadership potential encompasses a wide range of non-observable intellectual skills, such as curiosity, empathy, emotional intelligence, and learning ability, not to mention advanced technical expertise, we must rely on tools and data to measure people’s leadership potential.

This is where algorithms have tremendous potential. Fueled with large datasets on what leaders have done and achieved in the past, they can help us make better inferences about candidates’ potential. If this sounds too vague or opaque, think of it this way: Algorithms are essentially recipes, which are partly created by computers.

Imagine we have a dataset comprising 1,000 leaders, who are ranked or rated according to their actual performance. Imagine that we also have tons of data on who they are, what their values, skills, and personalities are, as well as hundreds of other variables. Artificial intelligence (AI) can be used to detect to learn why some leaders are more effective than others, and in turn create algorithms that reverse-engineer the formula for competent leadership.

The next step is to evaluate whether candidates resemble successful candidates, which is no different from how Netflix or Spotify suggest new movies or songs based on whether they resemble our past choices.

There are important differences that ought to be acknowledged in order to avoid inaccuracies and unfairness.

HOW RELIABLE IS THE HISTORICAL DATA ON LEADERS’ PERFORMANCE?

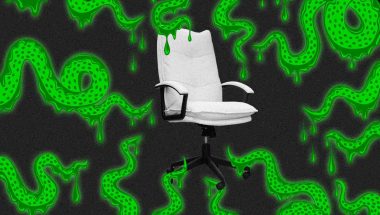

Since leaders’ performance is rarely evaluated objectively, there is always the risk that their evaluations are contaminated with subjective opinions, including prejudice and politics. It is one thing to have humans teach algorithms that an object on the road is a tree or traffic light, and another to teach them that someone is a good or bad leader.

And yet, algorithms will treat any classification as fact or truth, which risks not just replicating, but also augmenting biases. For example, if people are more likely to be designated as high performing leaders when they are male, White, and middle aged, algorithms will recommend that middle aged White males are selected and promoted into leadership roles, but will this increase or decrease the performance of the organization?

HOW WOULD THE ALGORITHM IMPACT DIVERSITY AND INCLUSION INITIATIVES?

If we train algorithms to predict someone’s likelihood of becoming a leader, they will give us more of what we have, and optimize for “sameness” rather than diversity. This is true of most selection tools. If there is a formula for “what good looks like,” it is logical that selected candidates become more homogeneous.

This issue can be addressed by focusing on teams rather than individuals. Great teams are always a mix of people who differ both intellectually and from a mindset perspective, just as they differ in expertise or hard skills. In this way, algorithms could be trained to detect existing gaps in a team, and hire for diversity.

EVEN IF WE CAN PREDICT FUTURE LEADERSHIP PERFORMANCE, CAN WE ALSO EXPLAIN IT?

One of the key foundations for ethical AI and algorithmic responsibility is to understand why a prediction occurs. It is not sufficient to automate decisions, even if they increase the accuracy or success rate vis-à-vis human decisions. It is also necessary to explain why an algorithm selected someone (or not).

This is actually one of the big advantages that computer algorithms could have over humans. When humans make decisions, it is never quite possible to reverse engineer them, to understand the why. No matter what people tell you, we never know why they liked or disliked a candidate. The main reason is that humans lie to themselves all the time. For example, nobody wants to think of themselves as racist or sexist, so people will go to great lengths to explain that their views on someone were not based on their race or sex.

WHAT IS THE BEST WAY TO LEVERAGE THE COMBINED POWER OF COMPUTER ALGORITHMS AND HUMAN EXPERTISE?

Until algorithms achieve near-perfect degrees of accuracy (and explainability), it is wise to keep humans in the loop. It is likely that in the short-term future we will continue to see what we see today, namely humans making better decisions when supported by data-driven algorithms, and vice-versa. Algorithms will be more valuable if they can be augmented by human expertise.

In a way, this has always been the case. Experts can make intuitive decisions because of their expertise and experience has made their intuition data-driven. This also puts pressure on humans to add value beyond what AI and algorithms can do. For instance, when algorithms are able to detect 80% of the qualities that make leaders more effective, the remaining 20%, which may focus on specific cultural or contextual matters, as well as what key stakeholders want and need, may be the remit of humans.

DO WE HAVE A PLAN TO TEST WHETHER ALGORITHMS HAVE IMPROVED THINGS?

All innovation is hit-and-miss. If you know it’s going to work, then it’s not innovation. The development of algorithms that improve our decision-making is no exception. The key to this development is not to get it right, but to find better ways of being wrong to improve the status-quo. This requires an experimental mindset, as well as a great deal of curiosity, humility, and the integrity to accept when things went wrong, and retract when they aren’t working.

Ultimately, algorithms are a human invention. They are the product of our ingenuity, and another example of our ability to create tech tools for doing more with less to improve in an area of life. Since selecting the right leaders is a fundamental requirement for creating better teams, organizations, and societies, one would hope that we can be open-minded enough to experiment in this area, not least in light of our dismal record for selecting and electing leaders.