- | 12:00 pm

Google is teaching robots to think for themselves

Giving a robotic assistant a broad-based understanding of how to be helpful at home or work isn’t easy. But Google researchers are making progress.

I’m standing in a kitchenette at a Google office in Mountain View, California, observing a robot at work. It’s staring at items on a counter: bubbly water, a bag of whole-grain chips, an energy drink, a protein bar. After what seems like forever, it extends its arm, grabs the chips, rolls a few yards away, and drops the bag in front of a Google employee. “I am done,” it declares.

This snack delivery, which the bot performed during a recent press briefing, might not seem like a particularly amazing feat of robotics, but it’s an example of the progress Google is making in teaching robots how to be helpful—not by programming them to perform a set of well-defined tasks, but by giving them a broader understanding of what humans might ask for and how to respond. That’s a far more demanding AI challenge than a smartphone assistant such as the Google Assistant responding to a limited, carefully worded set of commands.

The robot in question has a tubular white body, a grasping mechanism on the end of its single arm, and wheels. The fact that it’s got cameras where we have eyes gives it a certain anthropomorphism, but mostly, it seems engineered for practical functionality. It was created by Everyday Robots, a unit of Google’s parent company Alphabet. Google has been collaborating with its robot-centric corporate sibling on the software side of the challenge of making robots useful. This research is still early and experimental; along with tasks such as finding and fetching items, it also includes training bots to play ping-pong and catch racquetballs.

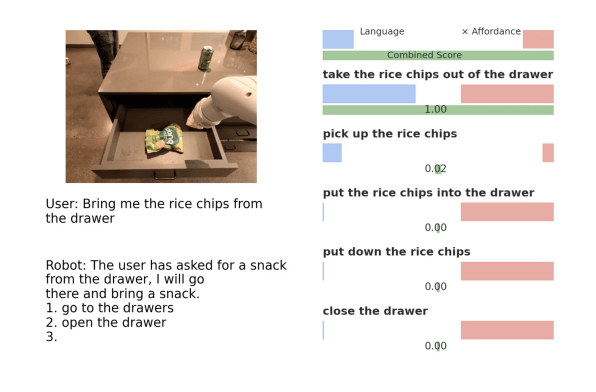

And now Google is sharing news about its latest milestone in robot software research, a new language model called PaLM-SayCan. (The “PaLM” stands for “Pathways Language Model.”) Developed in collaboration with Everyday Robots, this software provides company’s robots with a broader understanding of the world that helps them respond to human requests such as, “Bring me a snack and something to wash it down with” and, “I spilled my drink, can you help?” That requires understanding spoken or typed statements, teasing out the ultimate goal and breaking it into steps, and accomplishing them using whatever skills a particular robot might have.

According to Google, its current PaLM-SayCan research is the first time that robots have had access to a large-scale language model. Compared to previous software, the company says, PaLM-SayCan makes robots 14% better at planning jobs and 13% better at successfully completing them. Google has also seen a 26% improvement in robots’ ability to plan tasks involving eight or more steps—such as responding to “I left out a soda, an apple, and water. Can you throw them away and then bring me a sponge to wipe the table?”

NOT COMING SOON TO A HOME NEAR YOU

Though Everyday Robots’s bots have been performing useful work such as sorting trash at Google offices for a while now, the whole effort is still about learning how to teach the bots to teach themselves. In the demos we saw at the recent press briefing, the robot performed its snack-retrieval duties so slowly and methodically that you could practically see the wheels whirring in its head as it figured out the job step by step. As for the ping-pong and racquetball research, it’s not that Google sees a market for athletic robots, but these activities require both speed and precision, making them good proxies for all kinds of actions that robots will need to learn how to handle.

Google’s emphasis on robotic ambition over getting something on the market right away stands in contrast to the strategy followed by Amazon, which is already selling Astro, a $999 home robot, on an invite-only basis. In its current form, Astro only does a few things and doesn’t amount to that much more than an Alexa/security camera gadget on wheels; when my colleague Jared Newman tried one at home, he struggled to find uses for it.

Google Research robotics lead Vincent Vanhoucke told me that the company isn’t yet at the point where it’s trying to develop a robot for commercial release. “Google tries to be a company that focuses on providing access to information, helping people task in their daily lives,” he says. “You could imagine a ton of overlap between Google’s overarching mission and what we’re doing in terms of more concrete goals. I think we’re really at the level of providing capabilities, and trying to understand what capabilities we can provide. It’s still a quest of ‘what are the things that the robot can do? And can we broaden our imagination about what’s possible?’”

In other words, don’t assume you’ll be able to buy a Google robot anytime soon—but stay tuned.