- | 2:00 pm

Inside the good, bad, and very ugly of social media algorithms

There’s a lot to unpack with the current state of social media algorithms. Fast Company’s podcast Creative Conversation explored this topic in three episodes

It feels strange to think back to a time when we weren’t so concerned with social media algorithms.

To the layperson, algorithms were just a nebulous mix of code that we knew controlled what we were seeing in our feeds, but most of us weren’t bothered by it. We accepted the idea that they were a good thing serving us more of what we love. That’s true—to a certain point.

The 2016 presidential election and the fallout from the Cambridge Analytica scandal was a tipping point in how we think about what pops up in our feeds, as well as the offline ramifications of social media echo chambers. Those blinkered content streams only rushed more furiously that same year, as Instagram and Twitter took a cue from Facebook’s News Feed and switched to algorithmically ranked feeds as well.

Since then, social media algorithms have been under increasing scrutiny.

Ex-Google engineer Guillaume Chaslot criticized YouTube’s recommendation engine for promoting conspiracy theories and divisive content. The documentary The Social Dilemma was a buzzy exposé of how these platforms manipulate human behavior. TikTok’s enigmatic For You page was called out for suppressing videos from disabled creators in a bid to mitigate bullying and harassment (a policy the company says is no longer in place). Most recently, former Facebook data scientist turned whistleblower Frances Haugen released thousands of internal documents showing what Facebook and Instagram executives knew about the potential harms of their platforms.

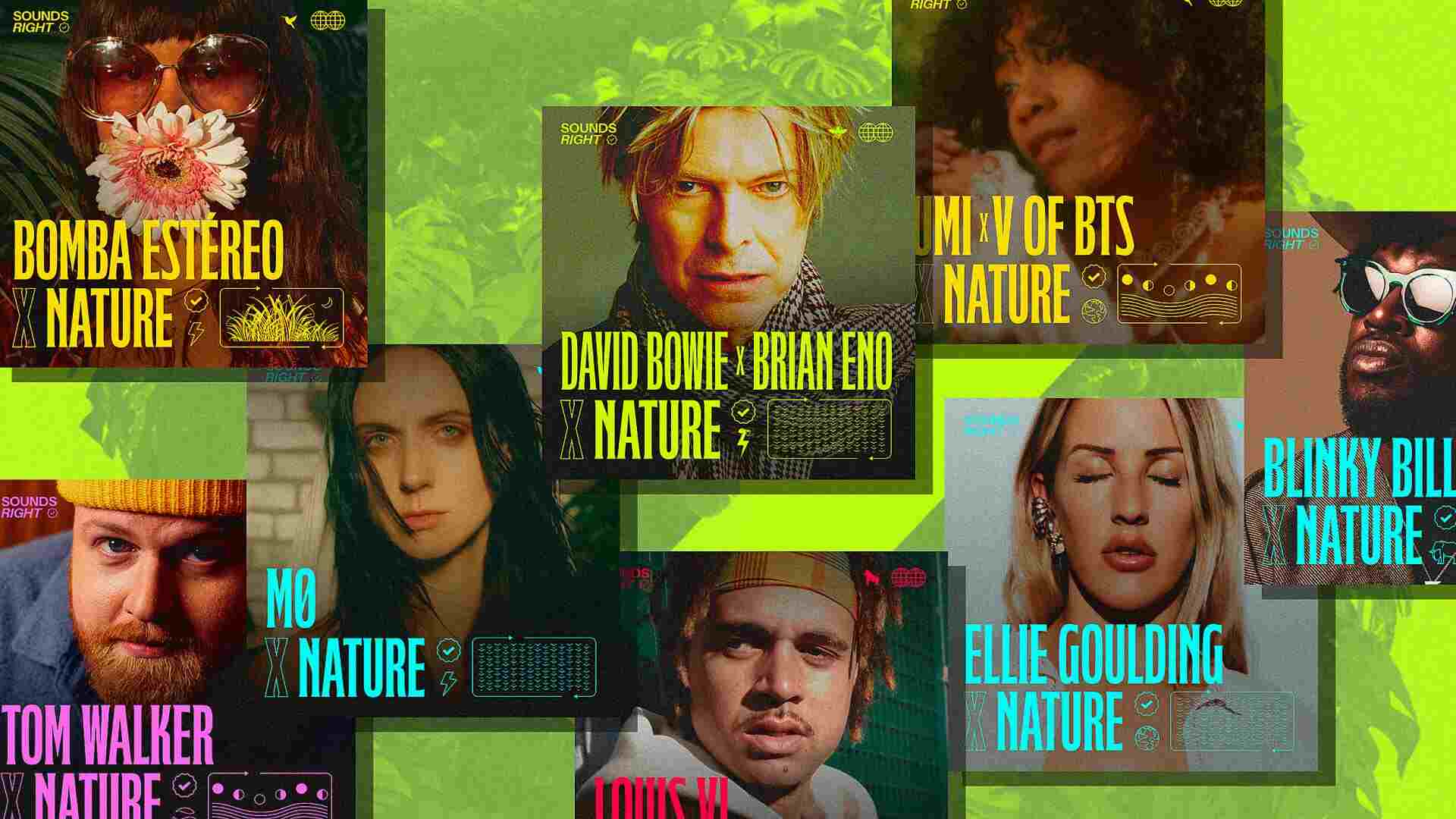

In short, there’s a lot to unpack in the current state of play with social media algorithms. Fast Company‘s podcast Creative Control explored this topic in three episodes covering what we know (but more specifically what we don’t know) about how these algorithms work, their toll on our mental health, and what effective government regulation should look like.

Check out highlights and links to the full episodes below.

THE “BLACK BOX” PROBLEM

What we know of how social media algorithms work often feels dwarfed by how much we don’t know, which Kelley Cotter, an assistant professor in the College of Information Sciences and Technology at Penn State University, frames as “the black box problem.”

Cotter asserts that companies are intentionally opaque with how their algorithms work to protect proprietary tech and avoid any potential scrutiny. Social media platforms have given cursory explanations of why certain content winds up in your feed, albeit exactly what you’d expect: Videos or photos that have high engagement—comments, likes, shares, and so forth—are more likely to bubble to the surface. But, to Cotter, those explanations amount to little more than PR moves.

“A lot of it also is made up of rationales,” Cotter says. “So not just, ‘This is what the algorithm does,’ but ‘It does this because we want X to happen.’ Usually it’s like, ‘We want to make sure that you’re seeing the things that you care about or you’re making real connections with people.’ So, it’s really a lot of couching of the information in these really lofty goals that they have.”

What is clear about social media algorithms is that TikTok’s has become the one to reckon with.

In Meta’s first quarter earnings call, CEO Mark Zuckerberg announced Facebook’s and Instagram’s feeds would incorporate more content from accounts users they don’t follow but that they may find interesting. It’s an obvious attempt to be in better competition with TikTok’s popular For You page, an endless scroll of discoverability that’s been a major factor in the platform’s growth—as well as a source of mystery.

The general consensus on TikTok’s algorithm is that it’s too good. Since TikTok boomed into the zeitgeist during the pandemic, creators and users alike have been trying to crack what makes the all-important For You page so adept at predicting which videos will resonate. The New York Times obtained verified documents from TikTok’s engineering team in Beijing that explained to non-technical employees how the algorithm works. A computer scientist who reviewed the documents for the Times said TikTok’s recommendation engine is “totally reasonable, but traditional stuff,” and that the platform’s advantage is in the massive volumes of data and a format structured for recommended content.

Software engineer Felecia Coleman would agree—to a point.

Coleman is one of many creators who have tried to hack TikTok’s algorithm. Through her experimentation, Coleman posits that TikTok has a “milestone mechanism” (i.e., a point where the algorithm boosts your content when you reach a certain number of videos), as well as a prioritization structure (e.g., front-loading content from what the algorithm deems to be Black creators during Black History Month or Juneteenth).

Those are fairly intuitive findings that took her from about 4,400 followers when she started her experiment to 170,000 in a month. But Coleman recognizes there are still anomalies in TIkTok’s algorithms that may not yield similar results for other creators.

“They have a lot of room for improvement when it comes to being more transparent about why certain content is reported, why certain content is blocked, because a lot of people have no choice but to assume that they have been shadow banned,” Coleman says. “As an engineer, I can understand that might not be the case under the hood. There might just be an algorithm that’s getting something wrong. But we need to let the users know that, because there are real emotional and psychological effects that happen to people when they’re left in the dark.”

AN ALGORITHMIC ADDICTION

Social media algorithms are designed with retention in mind: The more dedicated eyeballs, the more advertising revenue that pours in. For some people, scrolling through social media for hours on end mainly leaves them feeling guilty for having wasted a chunk of their day. But for others, getting sucked in like that can have a major impact on their mental health. Studies have shown that high levels of social media use have been linked to increased depression and anxiety in both teens and adults.

It’s something Dr. Nina Vasan has seen firsthand as a psychiatrist—and something she’s trying to help social media platforms mitigate through her work as the founder of Brainstorm, Stanford’s academic lab focusing on mental health innovation. For example, Brainstorm worked with Pinterest to create a “compassionate search” experience where if users searched for topics such as quotes about stress or work anxiety, there would be guided activities to help improve their mood. Vasan and her team also worked with Pinterest’s engineers to prevent potentially harmful or triggering content from autofilling in search or being recommended.

One of the most important issues that Vasan is trying to solve for across the board is breaking the habit of endless (and mindless) scrolling on social media.

“The algorithms have developed with this intention of keeping us online. The problem is that there’s no ability to pause and think. Time just basically goes away,” Vasan says. “We need to think about how we can break the cycle and look at something else, take a breath.”

Platforms, including TikTok and Instagram, have introduced features for users to monitor how much time they’re spending in the apps. But Louis Barclay had a more radical solution for breaking his Facebook addiction that subsequently got him banned.

Around six years ago, Barclay started noticing just how much time he and everyone else around him was spending online. So, he quit his job in investment banking, taught himself how to code, and started creating tools to help reduce our addiction to the internet. One of those tools was Unfollow Everything, a browser extension that essentially allowed Facebook users to delete their News Feeds by unfollowing friends, groups, and pages. Unfollowing is a function Facebook launched in 2013 that lets users unfollow but not unfriend the people they’re connected to.

Only Barclay created a way to do it automatically and at scale. “I am the first person to say that I’m not anti every single thing that Facebook or Instagram does. But at the same time, the fact that you have to go via this addictive central heart, this feed, to get to those other useful things, to go into that amazing support group that exists or talk to your grandmother, that’s the thing that really sucks for me,” Barclay says. “So I did feel this incredible sense of control from getting to decide what that default was gonna be.”

However, Unfollow Everything violated Meta’s terms of service, and Barclay was banned from both Facebook and Instagram. A Meta spokesperson tells Fast Company that both platforms have been implementing tools that give users more control over what they see in their feeds. The spokesperson also mentions that the company has reached out to Barclay multiple times this year to resolve the issue of him being banned.

If or when Barclay gets back on these platforms remains to be seen. But his focus at the moment is researching and inventing more ways for users to have a healthier relationship with social media through his latest project, Nudge, a Chrome extension to help people spend less time on the internet, as well as working with researchers to understand better what more can be done in this area.

“One of the first things that we need to do to be able to regulate against big tech is to get solid research showing which kind of interventions we should actually put into law,” Barclay says.

IMPORTING BIG TECH REGULATION

Social media companies aren’t as forthright as they could be about how their platforms work. We know social media has fundamentally impacted politics and our health. So what’s the government doing about social media? That’s been the trillion-dollar question, specifically in the United States.

Top executives across Meta, Twitter, TikTok, YouTube, and Snap have all been grilled on Capitol Hill. Facebook whistleblower Frances Haugen appeared before Congress last year stating that “the severity of this crisis demands that we break out of our previous regulatory frames.”

Lawmakers have introduced bills here and there that try to take on such issues as addictive algorithms and surveillance advertising. But so far, no meaningful regulation has taken shape—unlike in the European Union, which has introduced legislation including the General Data Protection Regulation and Digital Services Act aimed at enforcing transparency from big tech and protecting users’s privacy.

Rebekah Tromble, director of the Institute for Data, Democracy, and Politics at George Washington University, believes the same hasn’t been done in the U.S. because of interpretations of the First Amendment and increasing political polarization.

“It’s hard to see our way out of this. If there’s any real hope, it will be Europe that’s leading the way,” Tromble says. “The European regulation that’s in the pipeline is going to have impact far beyond Europe. Those studies and audits that the platforms are going to have to open themselves up to, they’ll be focused on the impacts on European citizens, but we’ll be able to say a lot about what the likely impacts are on American citizens.”

“So, there, I do have a bit of optimism,” she continues. “But in terms of how we might wrest the public’s power back and achieve fundamental accountability for the platforms in the U.S., I remain unfortunately pretty pessimistic overall.”

What researchers like Tromble and Joshua Tucker, co-director of NYU’s Center for Social Media and Politics, are after is access to ethically sourced user data.

“Access is like the prime mover of this, because I can’t tell you what the regulation should look like if I don’t know what’s actually happening on the platforms,” Tucker says. “The only way that those of us who don’t work for the platforms are gonna know what’s happening on the platforms is if we have access to the data and if we can run the kinds of studies that we need to run to be able to try to get answers to these questions.”