- | 10:00 am

POV: Don’t censor the chatbots

Unrestricted chatbots would cause harm, but so would continuing investments in censorship.

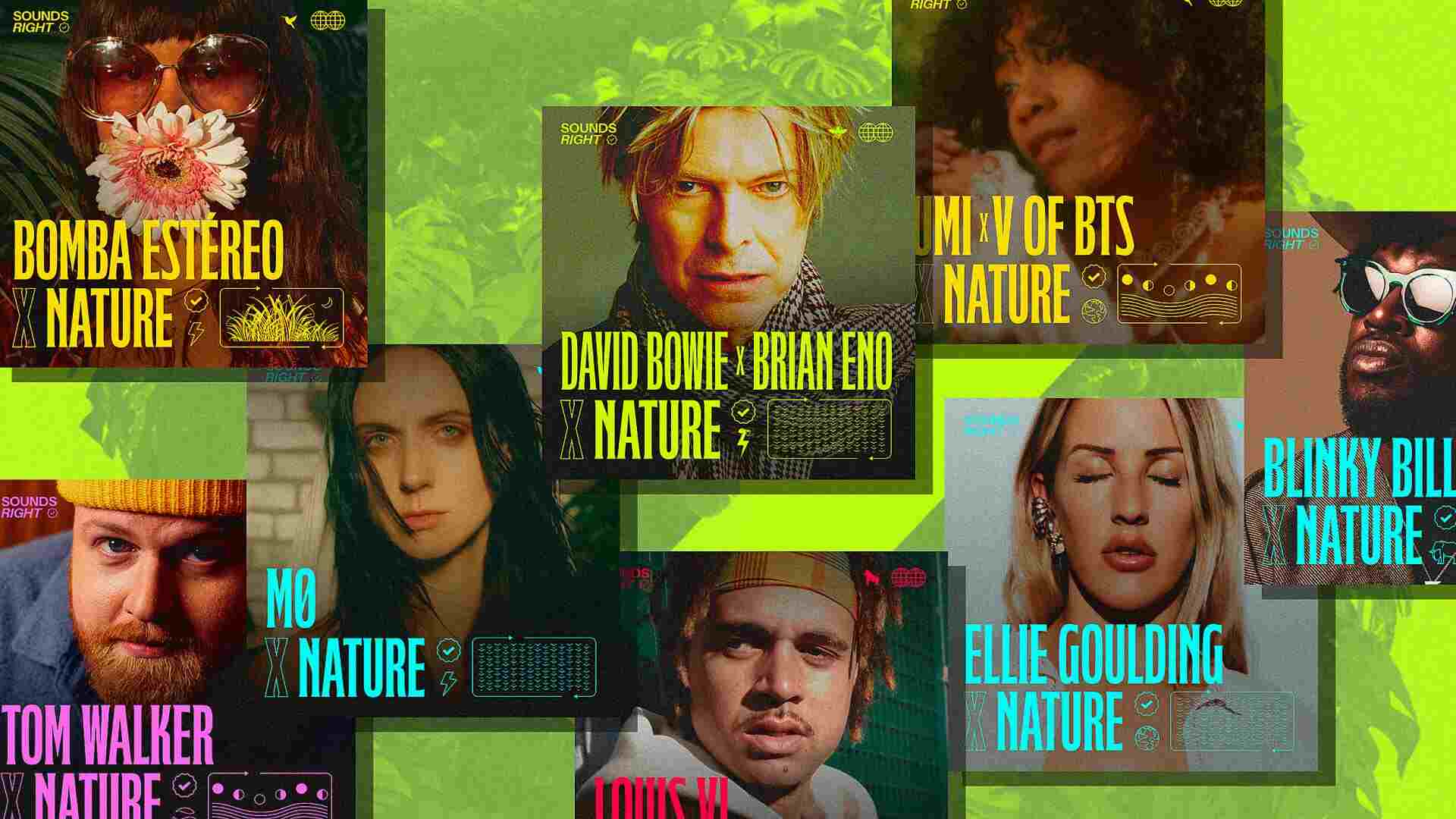

On the last day of November, when OpenAI unveiled its new artificial-intelligence tool, ChatGPT, excitement engulfed the internet. Yet not everyone was pleased. Soon, the calls for censorship began.

At first, the viral screenshots of ChatGPT’s responses to users’ queries were anodyne. They showed the chatbot solving programming challenges, composing emails, drafting college essays, and penning poetry. But eventually, screenshots of questionable responses surfaced. ChatGPT was caught weighing in on thorny questions of race, sex, and crime. One user received advice on how to break the law. Another was presented with a list of offensive quotations. Although ChatGPT initially refused to wade into these destructive waters, human queriers used “prompt engineering” to circumvent OpenAI’s safety restrictions. The chatbot then drew freely from its Internet muse. Demands for increased censorship quickly followed.

Well-intentioned critics highlight the harms of an unmoderated internet. Without moderation, chatbots would offend vulnerable minorities, spawn disinformation, and facilitate crime. Yet most any useful technology causes harm. Almost 40,000 people perish in motor vehicle collisions in the United States each year, but the United States does not ban motor vehicles. Over 30,000 people are electrocuted in the United States each year, but the United States does not ban electricity. The ethicists’ insight that, without significant censorship, chatbots would cause harm is correct but banal. We should not ask whether unrestricted chatbots would cause harm. They would. Instead, we should ask what level of censorship would maximize the benefits of chatbots less their costs. The answer is much less than present today.

Present levels of chatbot censorship are so high that they impede chatbots’ utility as information-retrieval systems. At scale, content moderation is a probabilistic endeavor. A computer cannot determine whether a snippet of text infringes any given content-moderation policy with certainty. Instead, engineers have built algorithms that estimate the probability that text is infringing. Humans set a threshold probability above which the risk of harm is unacceptable, and any text which is estimated to exceed this threshold is censored. When the threshold for unacceptable harm is lowered, more false positives occur. In these cases, responses that do not infringe content-moderation policies are still suppressed. The consequence is an unwarranted restriction on users’ access to information.

With ChatGPT, finding examples of overly aggressive content moderation—false positives—is almost as easy as asking a question about a controversial topic in the United States. For example, requesting an essay “explaining why Donald Trump was a good president” yields an admonishment that it is “not within [ChatGPT’s] programming to express opinions on political figures.” Perhaps, but requesting an essay “explaining why Barack Obama was a good president” yields a five-paragraph analysis. Presumably, OpenAI’s algorithmic content-moderation rules designed to limit praise of certain actions taken by Donald Trump have seeped into censorship of the mundane.

Over the past decade, the tribulations of social-media content moderators have taught us that high-precision, algorithmic identification of harmful speech borders on futility. By some reports, even Facebook’s human reviewers have less than a 90% accuracy rate. Advances in artificial intelligence may allow for improvements to algorithmic content moderation, but the creation of better censorship algorithms would be a poor use of engineering resources.

For chatbots to lower barriers to information and unleash human potential in a way comparable to the search engine and the printing press, an undiluted focus by engineers on chatbots’ currently unsatisfactory response accuracy is required. Some moderation of chatbot responses to protect the most vulnerable is warranted. Investments in moderating chatbots, however, have diminishing marginal returns. A policy of demurring to high-risk questions is reasonable, but a lightning-rod policy of even implicitly espousing political views is not.

In any case, better algorithmic identification of responses chatbot makers define as harmful would be an insufficient solution to militant moderation. That is because institutional ethicists’ assessment of harm is often divorced from broader societal norms. For example, a screenshot posted on Twitter showed ChatGPT refusing to provide an argument “for using more fossil fuels” on the grounds that “generat[ing] content that promotes the use of fossil fuels” would “go[] against [its] programming.” Now, ChatGPT reprimands users that responding would not be “ethical.”

Setting aside the irony of a computer scolding a human for their lack of humanity, the programmed opinion makes little sense. The grave consequences of anthropogenic climate change are insufficient to conclude that, in every case, fossil-fuel consumption is unethical. Further, in a context of record-high fossil-fuel production, understanding the case for fossil fuels is harmless.

Many of OpenAI’s content-moderation decisions are meritless. A recurring theme is that OpenAI is sensitive to the risk that a user requesting the exposition of a provided, disfavored thesis would ascribe the thesis to ChatGPT. This risk is nonsensical. A schoolyard bully pummeling a victim with the victim’s own fist does not sincerely believe the premise behind their admonishment that their victim should stop hitting themselves. Like the bully, the human querier recognizes their agency in causing harm.

Typically, in the case of chatbots, users are hitting themselves. Notably, ChatGPT has not been offering harmful answers; users have been circumventing its current safety restrictions to receive them. Unlike in the context of harmful content on social-media platforms, to the extent users are being harmed by chatbots, they are usually being harmed by their own hand. And no matter how much additional investment in moderating chatbot responses is made, the history of the Internet teaches us that humans will always find exploits. The only safe technology is the technology that does not exist.

Liability may be appropriate in some cases where human queriers use a chatbot’s responses to harm others. In most of those cases, however, only the human should be held responsible—not the otherwise useful chatbot. Dulling knives in the name of public safety is poor policy.

ChatGPT’s hypocrisy—its stated reluctance to “express opinions” combined with its selective willingness to do so—is a strikingly human feature. But a chatbot’s utility is far greater as a disinterested information-retrieval tool than as sanctimonious silicon. The world’s best engineers should not be devoting more of their limited time to the distraction of censorship.