- | 9:00 am

Meet Google’s new AI Search. And its feisty alter ego

Google is taking a split personality approach to its AI search: business in the front, party in the back.

This week at Google’s yearly I/O conference, the company has something big to prove: that it can still rule search in the AI age. And over hours of demos and interviews with members of Google’s Search team, the company shared its strategy to do so.

Whereas search was once a single product—that gleaming white search bar that lives in the browser—searching with Google has been split into two separate AI experiences that balance Google’s impossible scale with newly necessary innovation.

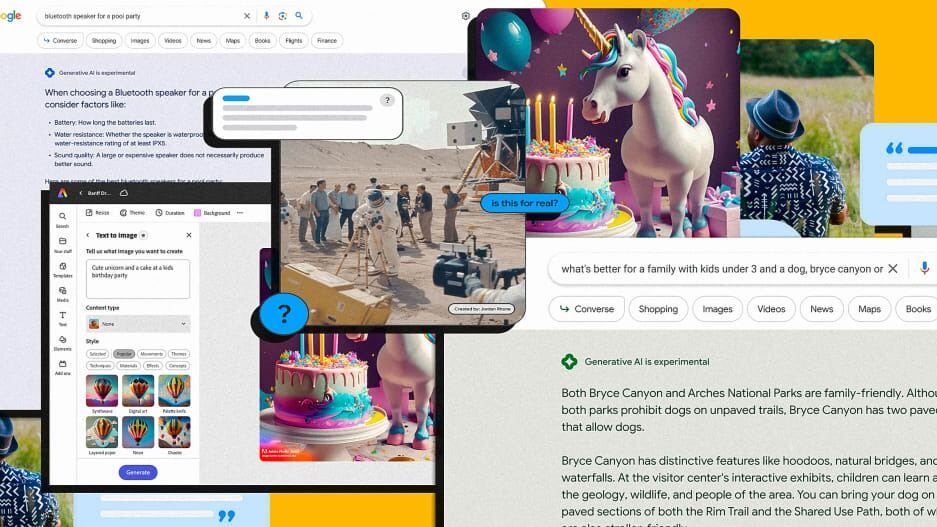

The first is the classic Google search itself. Starting this month, people in the U.S. will be able to opt into new AI search experience via Google Labs, the company’s idea incubator (much like Gmail has always offered special tools in settings under beta).

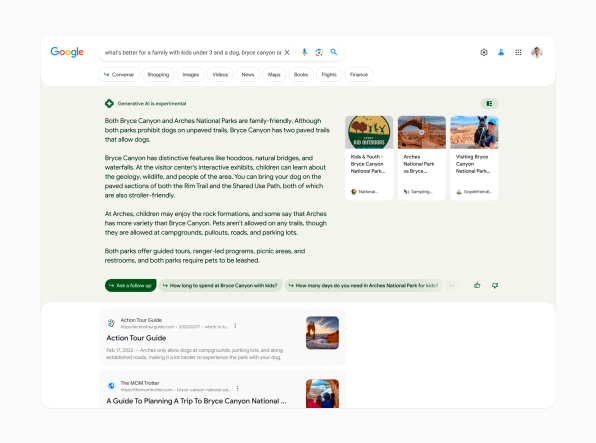

This new search will summarize answers for you, without making you click a link or pulling information from Wikipedia. The latest AI models will also offer product comparisons, and even shopping pages generated with the help of new AI. But Google is keeping AI in its flagship product on a tight leash. It avoids summarizing breaking news, for instance, and every fact its AI presents is double-checked by Google before you see it in your Chrome desktop web browser or the Google mobile app, where the product will debut.

The second AI experience is Bard. Introduced earlier this year, Bard is more conversational. It will generate sitcom scripts for you, caption images you upload, and even integrate with select third party AI tools. For instance, Adobe is launching its Firefly image generation tool within Bard. Bard is essentially given more license to experiment because it’s not putting Google’s main search product at risk.

Unlike Microsoft Bing, which had nothing to lose when it integrated OpenAI’s ChatGPT into its main product (even as the AI ran amok), Google search is concerned about using AI for the billions of queries it handles every day. And so it’s betting on trust. Meanwhile, Bard is being positioned to compete with novelty.

As that plays out across Google’s services, it’s resulting in something of a split personality for the company’s approach to AI. If Google search is Dr. Jekyll, then Bard is the wilder Mr. Hyde. Call the approach conservative, or call it measured. But along the way, Google is clearly approaching AI searching in a way that will still feel distinctly like googling.

GOOGLE SEARCH IS BUILT TO BE RIGHT, EVEN IF DULL

To understand how the new Google search will work, you have to first understand a bit about the newest AI models.

The latest wave of conversational and image generating AI doesn’t actually understand what it’s presenting. Instead, the system essentially dreams up/hallucinates/bullshits something on command (choose your favorite metaphor). It places pixels or words where it thinks they should go based upon studying other material. These systems appear to give perfect results, until you realize that, no, ChatGPT didn’t actually do the math correctly because it doesn’t understand algebra, or Midjourney might still put six fingers on a human because it doesn’t actually know how many fingers human DNA mandates.

“The models sound extremely confident no matter the quality of information, and you can push the model to do more of that, or push it to do less,” explains Liz Reid, VP of search at Google. “With search, we’ve chosen to focus on a place with more factuality . . . then over time, build up fluidity, or how natural it sounds.”

In other words, Google does not want its core search product to be convincing but wrong. So it’s tuning its AI results to be less, as Reid calls them, “improvisational” when presented. This is a big reason why Google search won’t allow you to generate a script for The Office and Bard will. Instead, Search’s text responses include drier, encyclopedic style descriptions, or simple bulleted lists.

The key word Reid uses is “corroboration.” After Google AI generates something for Search, it then essentially googles itself, looking for sources that can support its claims. Within the search UI, you can even expand the summarized response written by the AI to reveal, line by line, where it corroborated each item.

“If it can’t back up things in search, it wont show them,” says Reid.

This line in the sand creates edge cases for Google’s AI search. Medical and financial advice, in particular, scare the Search team. As does politically charged breaking news. When my colleague Mark Sullivan challenged the system to explain what had happened in the shooting in Allen, TX, the AI presented no results at all, and instead only offered typical results from the web search. Frankly, it’s not all that clear what happened to the user. There’s no explanation offered by Google. That lack of feedback is problematic for a public that will be learning to use these tools for the first time, and something akin to gaslighting through UX.

“We have to figure out both, how do we teach people what’s possible, and then, the technology is quickly changing . . . what you can do in six months might be different from today,” says Reid. “That’s an interesting challenge in this space. You’re trying to teach people they can do more than they used to do, but they can’t necessarily do [everything they imagine].”

Indeed, much of the UI is about teaching people how to do more, and dig deeper with Google search than they could before—modifying a query, adding details, to get better results. One tool along these lines are “quick converse chips,” or basically speech bubble buttons that you can tap to quickly ask a more specific follow up question about the results AI search generated.

It’s a simple tool, similar to Google’s existing autocomplete offerings (and Bing has a similar feature, too). But as Rhiannon Bell, VP of user experience on Search explains, they educate the public on how to refine their search. These tools will be particularly salient in its shopping searches, where Google promises you can clarify what you’re looking for and get real time product results (which it updates over a billion times a day).

Meanwhile, other parts of the search UI are powered by AI, but you might never actually realize it. For instance, a new product comparison page will quickly summarize the difference between two items, then present a side-by-side spec comparison. I’ve seen these side-by-side UI elements before within Google search, somewhere! And yet, they are now powered by the latest in generative AI models.

How does the generative AI make them different from old results? I note that a summary up top compares the products head-to-head like a mini product review. But truthfully, there may be more going on under the hood. As the Google team explains, it uses new AI models to create results, but it will also be using such AI to better sort and present information on the Google search page itself.

SUPERGLUING THE SEARCH INTERFACE

Binding Google search together is a design system dubbed “Superglue,” which has evolved since 2021 for this moment.

To disclose what parts of search are generative AI, Google is using a new sparkle icon. And AI results are wrapped in bright colors, which are actually colors lifted from images inside search results. This trick is an extension of Google’s Material You design system, which you may know from Android.

This front end design is doing two important jobs. First, it’s distinguishing AI from more typically indexed information. We’re in a strange AI limbo period where we’re all attempting to differentiate fact from fiction from the murk in between. And Superglue is meant to help make that possible.

Superglue is also a shrewd business play. It’s worth noting that Google is using UI in search to essentially brand its approach to AI. Beyond color, the team is switching from Google’s Roboto font (which is completely in the public domain) to its more protected and personalized Google Sans.

“That gives us this opportunity for a more proprietary expression,” says Bell. In other words, Google wants you to know that your results are from Google—an issue sure to get murkier as generative AI evolves to produce richer and more complex media on demand. For instance, Google is also announcing a new (non AI) “perspectives” search that brings up videos of people in TikTok and Instagram. These are results offered by micro influencers rather than news outlets. I mention that it’s not hard to imagine Google simply generating a video of an imagined person to give you results in the same way. Five years ago, suggesting such an idea would have led to a few awkward giggles in the room. Now? No one batted an eye. They agreed.

THE TWO-HEADED STRATEGY

If you were to bet on the future of one American technology company over the next 20 years, Google’s mix of expertise, including search, ads, Android, AI, and hardware seemed pretty safe.

That was until late 2022, when OpenAI rocked the world with its conversational ChatGPT, and Microsoft introduced the technology into Bing (a development which Google is still countering). Google leadership went into a reasonable panic as OpenAI wooed its senior coders to the company. Then last week, an internal memo leaked out of Google detailing the real threat to the company’s supremacy in AI: the world of open source AI development, where community members are training models far cheaper and far faster than even Google’s best work can contend with. (Where did the open source community’s magic AI tech come from? It actually leaked out of Meta!)

At last, we’ve seen Google’s response. It will continue to position its main search tool as reliable above all else, built for usefulness and ease of use. And it will use Bard as an experiment probing into what AI tools can bring, not just to search, but to the vast field of human/computer creativity. Bard is essentially a massive consumer research program, in which Google is trying to figure out…just what do you want to do with generative AI, anyway?

It may seem inevitable that these two platforms will need to merge into what we consider Google—this always-on answer for any question in the back of our minds. But perhaps they don’t. Google doesn’t have the benefit of being a startup anymore. It cannot be charmingly wrong. And that’s a major disadvantage as it attempts to integrate the most cutting edge AI research of our day. Search can’t be wrong. But Bard? Bard can get it wrong. Bard can be the bad guy.