- | 8:00 am

These wild AI-powered glasses can read your own lips

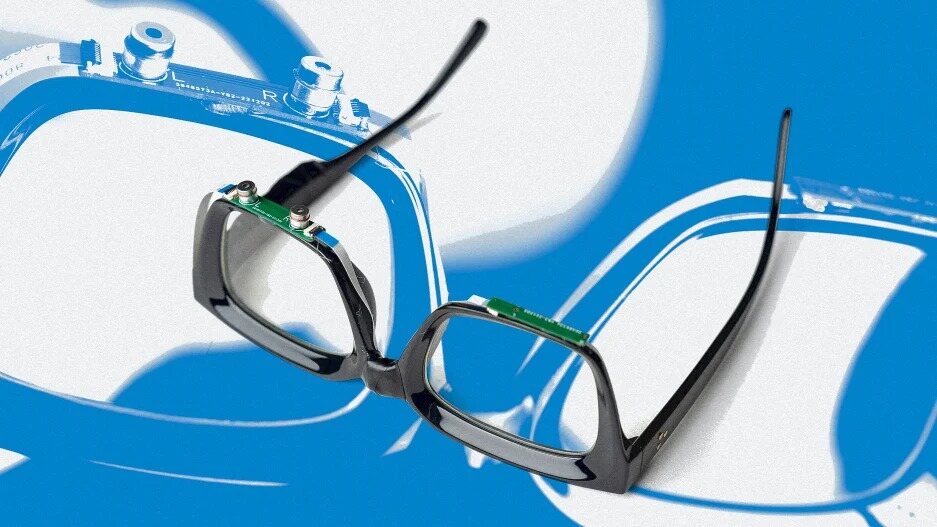

A team of scientists at Cornell’s SciFi Lab has developed a pair of glasses that can read your very own lips, without your having to utter a single sound.

In 1993, Seinfeld based an entire episode on the perils of lipreading, culminating in George’s misinterpreting “sweeping together” for “sleeping together.” Outside of pop culture, the art of lipreading has fascinated psychologists, computer scientists, and forensic experts alike. In most cases, experiments have involved someone reading someone else’s lips—or in the case of lipreading programs like LipNet or Liopa, AI reading a human’s lips through a phone app. But a different kind of experiment is currently unfolding at Cornell’s Smart Computer Interfaces for Future Interactions (SciFi) Lab.

There, a team of scientists has devised a speech-recognition system that can identify up to 31 words in English. But EchoSpeech, as the system is called, isn’t an app—it’s a seemingly standard pair of eyeglasses. As outlined in a new white paper, the glasses (purchased off-the-shelf) can read the user’s very own lips and help those who can’t speak perform basic tasks like unlocking their phone, or asking Siri to crank up the TV volume without having to utter a single sound. It all looks like telekinesis, but the glasses—which are kitted out with two microphones, two speakers, and a microcontroller so small they practically blend in—actually rely on sonar.

Over a thousand species use sonar to hunt and survive. Perhaps the most popular among them is the whale, which can send pulses of sound that bounce off objects in the water then bounce back so the mammal can process those echoes and build a mental picture of its environment, including the size and distance of objects around it.

EchoSpeech works in a similar way, except the system doesn’t focus on distance. Instead, it tracks how sound waves (inaudible to the human ear) travel across your face and how they hit various moving parts of it. The process can be summed up in four key steps. First, the little speakers (located on one side of the glasses) emit sound waves. As the wearer mouths various words, the sound waves travel across their face and hit various “articulators” like the lips, jaw, and cheeks. The mics (located on the other side of the glasses) then collect those sound waves, and the microcontroller processes them together with whatever device the glasses are paired with.

But how does the system know to assign a particular word to a particular facial movement? Here, the researchers used a form of artificial intelligence known as a deep-learning algorithm, which teaches computers to process data the way the human brain does. “Humans are smart. If you train yourself enough, you can look at somebody’s mouth only, without hearing any sound, and you can infer content from their speech,” says the study’s lead author, Ruidong Zhang.

The team used a similar approach, except instead of another human inferring content from your speech, the team used an AI model previously trained to recognize certain words and match them to a corresponding “echo profile” of a person’s face. To train the AI, the team asked 24 people to repeat a set of words while wearing the glasses. They had to repeat the words several times but not consecutively.

For now, EchoSpeech has the vocabulary of a toddler. It can recognize all 10 numerical digits. It can capture directions like “up,” “down,” “left,” and “right,” which Zhang says could be used to draw lines on a computer-aided software. And it can activate voice assistants like Alexa, Google, or Siri, or link to other bluetooth-enabled devices.

A recent test during which the team paired the system with an iPad achieved 95% accuracy, but there’s still work to be done on improving usability. Currently, EchoSpeech has to be trained every time someone new puts on the glasses, which could significantly hinder progress as the system is scaled up, but the team believes that with a large-enough user base, the computer model could eventually gather more data, learn more speech patterns, and apply them to everyone.

Zhang says that growing the system’s vocabulary to 100 or 200 words shouldn’t pose any particular challenges with the current AI, but anything larger than that would require a more advanced AI model, which would piggyback on existing research on speech recognition. This is an important step, considering the team wants to eventually pair the system with a speech synthesizer and help people who can’t speak vocalize sound more naturally and more efficiently.

For now, EchoSpeech is an intriguing proof of concept with tremendous potential for disabled people, but the team doesn’t expect it to be usable for another five years. And that’s just for the English language. “The difficulty is that each language has different sounds,” says Francois Guimbretière, a coauthor of the study, who is French. And different sounds can mean different facial movements. But it also depends on the kinds of languages the AI model is trained on. “There is a push to take care of [other] languages so that not all the technology is focused on English,” says Guimbretière. “Otherwise, all these [other] languages will die.”