- | 8:00 am

Could the AI revolution crash our data centers?

Experts warn that the new gold rush of artificial intelligence could create a capacity shortage at data centers worldwide.

The hundreds of AI tools now flooding the market share at least one thing in common: They all require massive amounts of data to operate. And as usage of those tools increases, the draw on data centers will become ever greater—potentially throwing a hitch into the generative AI revolution.

Beyond that, the increase in demand required by generative AI tools could also have knock-on effects on existing energy infrastructure, pushing electricity demand to the brink and causing a supply crunch that could lead to shortages in data centers worldwide.

Things are already tightening: Demand for data center capacity reached record highs in Europe last year, while net absorption—the sum of space occupied, minus the sum of space that became vacant, over a set period—doubled in North America, from 1.74 gigawatts to 3.45 gigawatts.

“Consumers and businesses are expected to generate twice as much data in the next five years than all the data created over the past decade, and as such we are already seeing demand for storage capacity skyrocket,” says Daniel Thorpe, a data center researcher at the firm JLL.

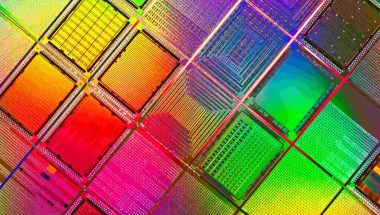

It’s not just that the scale of interest in artificial intelligence is going to stretch data center capacity to the breaking point. The tech used to power the AI systems will also be far more demanding than what data centers are accustomed to. The International Energy Agency’s recent forecast said electricity consumption from the AI, data center, and cryptocurrency sectors could equal all of Japan’s by 2026—and that analysis came before some of the biggest names in the AI chip sector announced their updated technology.

“Nvidia’s latest announcement, that Blackwell-based AI clusters will require 100 kilowatts per rack—a figure 400% to 500% higher than current energy usage levels, only compounds this challenge,” says Alex McMullan, CTO at the storage equipment-maker Pure Storage. “New power-hungry GPUs, housed in newer and larger data centers, will place enormous burdens on existing power supply networks.”

Nvidia in particular is pressing data center capacity to its limit, says Ivo Ivanov, CEO of DE-CIX, one of the world’s largest operators of internet exchanges and data centers. Because it’s projected to manufacture so many AI chips and units, demand for capacity in data centers is likely to rise in large part thanks to Nvidia itself. “We calculate that for the U.S. alone, around 50% additional data center capacity will be needed compared to 2020 levels, and that is just to cater for the server unit shipments projected for 2027,” Ivanov says, adding that the situation is causing “a pressing imperative to mitigate the risks of capacity shortages.”

In part that’s because not only are more data centers needed, but the supporting infrastructure that helps run those data centers needs to be improved to meet AI’s requirements. Existing power connections, cooling infrastructure, and backup generators are designed to work with previous generations of technologies, rather than the AI-ready servers and chips increasingly being deployed, says Matthew Cantwell, director of product development at Colt Data Center Services. “Facilities are now being built and retrofitted to cater to this new power demand; however, AI is developing at such speeds that data center supply is likely to become increasingly constrained.”

JLL’s Thorpe says that the design and speed at which new AI chips run will cause an issue for older data centers. Those chips run significantly hotter—more so than the current, conventional air-cooling solutions used in some data centers can manage. As a result, liquid cooling, where liquid rather than air passes by data center racks in order to reduce heat in a facility, will be required to keep temperatures down. Although liquid cooling is not as common as air cooling, the industry is moving toward it anyway because of its efficiency benefits: It can reduce the power needed for a data center by as much as 90%.

Nevertheless, hope remains for the future. “There are still efficiencies that could be made in existing capacity in data centers today,” Cantwell says. “Understanding how much energy the existing compute consumes is key to boosting energy efficiency and maximizing the use of available infrastructure to support new AI demands.”

But bizarrely, the one thing that is pushing data centers to the brink could also help alleviate the AI data center crisis. Artificial intelligence is already being used to try and better manage energy use more efficiently—and it’s no different in the world of data centers. “The immediate solution lies with efficient data center technologies that are capable of vastly reducing the space, power, and cooling needs of AI and other data storage requirements,” says Pure Storage’s McMullan. And that could include insights from AI itself.