- | 8:00 am

Researchers explain why commanding employees doesn’t always make them more productive

Leader control has been gradually eroding for centuries, starting with the Industrial Revolution.

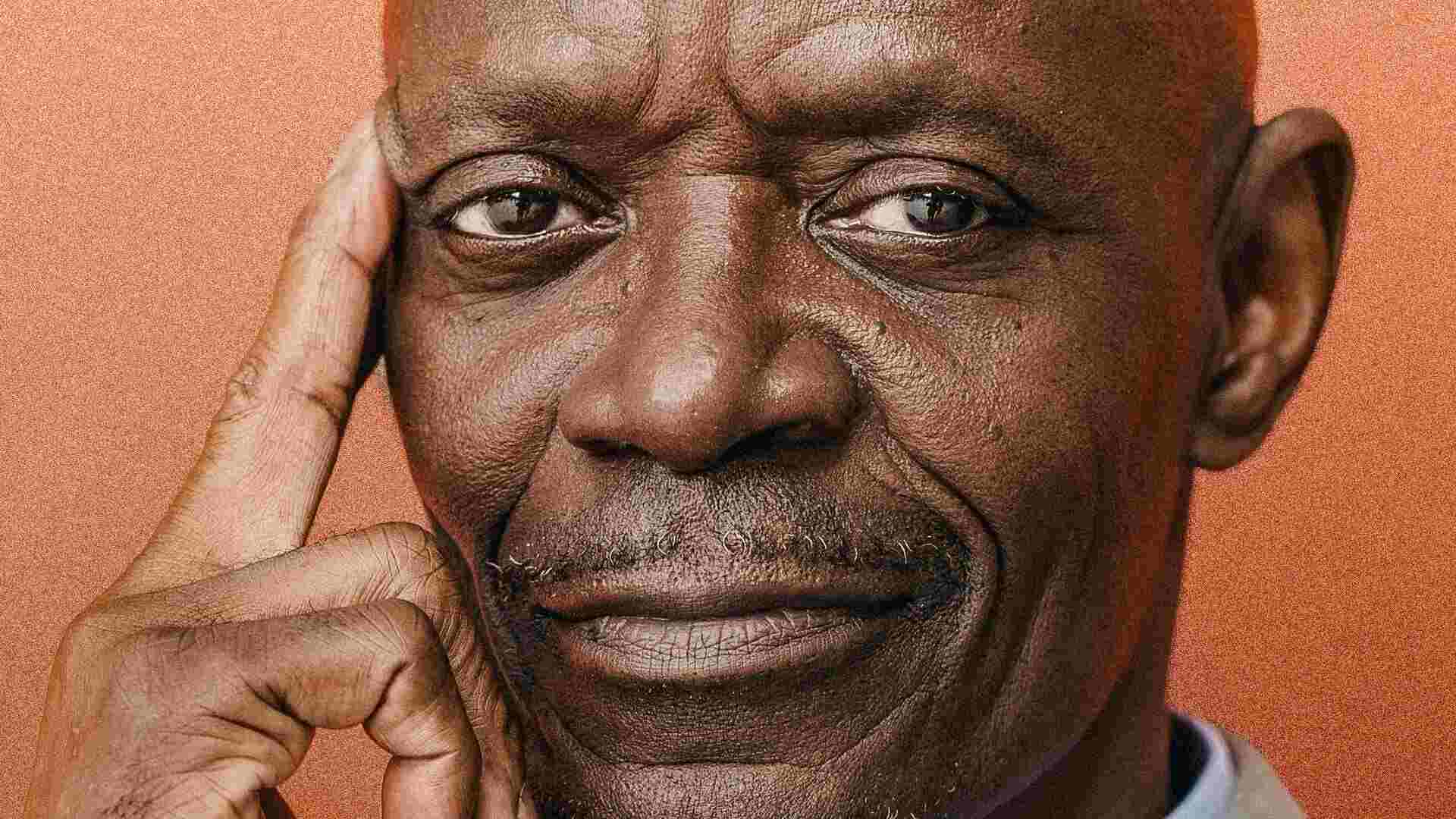

“Why is it that we often design organizations as if people naturally shirk responsibility, do only what is required, resist learning, and can’t be trusted to do the right thing?”—Abraham Maslow

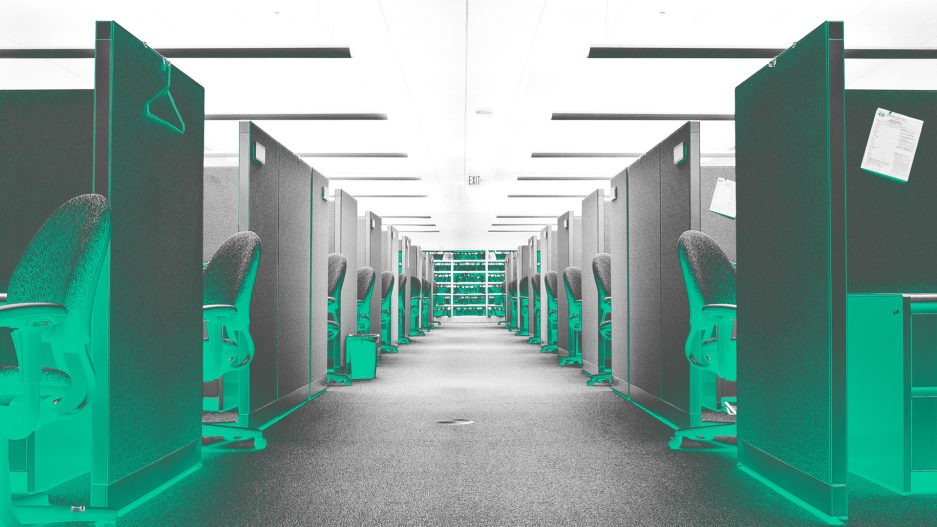

We don’t know if Maslow’s question decades ago was rhetorical. But we do know that it remains as relevant as ever. With the recent increase in remote working, organizational leaders started asking the same question: How do we know if our employees are working and productive? The question assumes that the only way leaders can know if people are productive is if they can see them physically in the office.

Consider the temptation to exert control these past few years with the surge in hybrid and fully remote work and leaders not seeing employees on a regular basis. Essentially, the number of people in remote-ready jobs working in either hybrid or fully remote locations doubled.

Leader control has been gradually eroding for centuries, starting with the Industrial Revolution. But with increases in quit rates in 2021 and 2022, leader control hit a new low. New freedom, low unemployment, and more job openings caused employees to be selective about where and how they work.

THE EVOLUTION OF PEOPLE MANAGEMENT

Prior to the 19th century, most businesses were relatively small. A business owner might manage some family members and a few other employees in a specialized craft. The Industrial Revolution changed that. Larger organizations were formed to mass produce goods in manufacturing and industrial plants. These large organizations needed structures so business owners could manage the large number of people.

In the late 1800s and early 1900s, the discipline of management started appearing in higher education. Later, in the mid-20th century, “scientific management” became more prominent through methods such as reengineering and Six Sigma. Psychologists and sociologists entered the mix and began developing new theories for managing people.

In practice, people management was mostly command and control, even though some scholars were theorizing that a “participative” approach to managing might work best. With the growth of the information age, people accumulated knowledge and expertise—and they would take that expertise with them when they changed companies.

Different theories for how to manage people emerged.

In the 1950s and 1960s, Douglas McGregor, a professor at MIT and one of Maslow’s collaborators, created a theoretical fork in the road when he described two approaches to management: Theory X and Theory Y. Theory X posits that the average employee is not ambitious or highly responsible and is mainly motivated by rewards and punishments—people are extrinsically motivated, the theory goes. The management style for those who subscribe to Theory X is command and control, or micromanagement.

Theory Y takes the view that the average employee is self-motivated, responsible and fulfilled by the work itself—they are intrinsically motivated. The management style for those who endorse Theory Y is to involve employees and encourage them to take ownership of their work. These managers tend to get to know employees on a more personal level than Theory X managers.

Which theory is right?

Meta-analyses published in the management literature illustrate that both styles can get results—but different results. Extrinsic rewards associated with Theory X management tend to motivate the specific behaviors that are being rewarded or punished. Whereas intrinsic rewards associated with Theory Y—such as engaging and fulfilling work—tend to broaden thinking and decision-making. Intrinsic rewards are more common in knowledge jobs, in which employees are expected to use some amount of discretionary judgment—such as spontaneously helping a customer, looking out for a coworker or combining information in a new way to improve the overall organization.

Some would conclude that a combination of Theory X and Theory Y is best, depending on the situation. But one thing is clear in today’s world of work: An organization is at risk if it doesn’t emphasize Theory Y management.

Which theory do leaders really believe? The evidence to date suggests that most organizations are still being run as if they subscribe to Theory X. For example, only one in three workers globally strongly agree that their opinions seem to count at work. The simple habit of listening to people who are close to the action is missing in most organizations.

A 2022 Gallup-Workhuman study found that recognition and praise for good work predicts higher employee engagement, advocacy for the organization and intentions to stay with the organization. But eight in 10 senior leaders (81%) say recognition is not a major strategic priority.

The economic environment also matters. When unemployment is high, leaders have more control. But they have far less control in tight job markets with many job openings, as we saw in 2021 and 2022. The irony is, Gallup has found that employee engagement has an even stronger impact on performance outcomes, such as customer loyalty and profit, during tough economic times (e.g., a recession) when leaders have more control.

While leaders need to make tough decisions—sometimes decisions employees don’t like—leading with command and control doesn’t work very well in the new workforce.

Excerpted with permission from Culture Shock by Jim Clifton and Jim Harter.